Vue lecture

Comment les chauves-souris contrôlent leurs virus

Lowering exam stakes could cut the gender grade gap in physics, finds study

Female university students do much better in introductory physics exams if they have the option of retaking the tests. That’s according to a new analysis of almost two decades of US exam results for more than 26,000 students. The study’s authors say it shows that female students benefit from lower-stakes assessments – and that the persistent “gender grade gap” in physics exam results does not reflect a gender difference in physics knowledge or ability.

The study has been carried out by David Webb from the University of California, Davis, and Cassandra Paul from San Jose State University. It builds on previous work they did in 2023, which showed that the gender gap disappears in introductory physics classes that offer the chance for all students to retake the exams. That study did not, however, explore why the offer of a retake has such an impact.

In the new study, the duo analysed exam results from 1997 to 2015 for a series of introductory physics classes at a public university in the US. The dataset included 26,783 students, mostly in biosciences, of whom about 60% were female. Some of the classes let students retake exams while others did not, thereby letting the researchers explore why retakes close the gender gap.

When Webb and Paul examined the data for classes that offered retakes, they found that in first-attempt exams female students slightly outperformed their male counterparts. But male students performed better than female students in retakes.

This, the researchers argue, discounts the notion that retakes close the gender gap by allowing female students to improve their grades. Instead, they suggest that the benefit of retakes is that they lower the stakes of the first exam.

The team then compared the classes that offered retakes with those that did not, which they called high-stakes courses. They found that the gender gap in exam results was much larger in the high-stakes classes than the lower-stakes classes that allowed retakes.

“This suggests that high-stakes exams give a benefit to men, on average, [and] lowering the stakes of each exam can remove that bias,” Webb told Physics World. He thinks that as well as allowing students to retake exams, physics might benefit from not having comprehensive high-stakes final exams but instead “use final exam time to let students retake earlier exams”.

The post Lowering exam stakes could cut the gender grade gap in physics, finds study appeared first on Physics World.

Quantum steampunk: we explore the art and science

Earlier this year I met the Massachusetts-based steampunk artist Bruce Rosenbaum at the Global Physics Summit of the American Physical Society. He was exhibiting a beautiful sculpture of a “quantum engine” that was created in collaboration with physicists including NIST’s Nicole Yunger Halpern – who pioneered the scientific field of quantum steampunk.

I was so taken by the art and science of quantum steampunk that I promised Rosenbaum that I would chat with him and Yunger Halpern on the podcast – and here is that conversation. We begin by exploring the art of steampunk and how it is influenced by the technology of the 19th century. Then, we look at the physics of quantum steampunk, a field that weds modern concepts of quantum information with thermodynamics – which itself is a scientific triumph of the 19th century.

- Philip Ball reviews Yunger Halpern’s 2022 book Quantum Steampunk: the Physics of Yesterday’s Tomorrow

This podcast is supported by Atlas Technologies, specialists in custom aluminium and titanium vacuum chambers as well as bonded bimetal flanges and fittings used everywhere from physics labs to semiconductor fabs.

The post Quantum steampunk: we explore the art and science appeared first on Physics World.

Quantum fluids mix like oil and water

Researchers in the US have replicated a well-known fluid-dynamics process called the Rayleigh–Taylor instability on a quantum scale for the first time. The work opens the hydrodynamics of quantum gases to further exploration and could even create a new platform for understanding gravitational dynamics in the early universe.

If you’ve ever tried mixing oil with water, you’ll understand how the Rayleigh–Taylor instability (RTI) can develop. Due to their different molecular structures and the nature of the forces between their molecules, the two fluids do not mix well. After some time, they separate, forming a clear interface between oil and water.

Scientists have studied the dynamics of this interface upon perturbations – disturbances of the system – for nearly 150 years, with major work being done by the British physicists Lord Rayleigh in 1883 and Geoffrey Taylor in 1950. Under specific conditions related to the buoyant force of the fluid and the perturbative force causing the disturbance, they showed that this interface becomes unstable. Rather than simply oscillating, the system deviates from its initial state, leading to the formation of interesting geometric patterns such as mushroom clouds and filaments of gas in the Crab Nebula.

An interface of spins

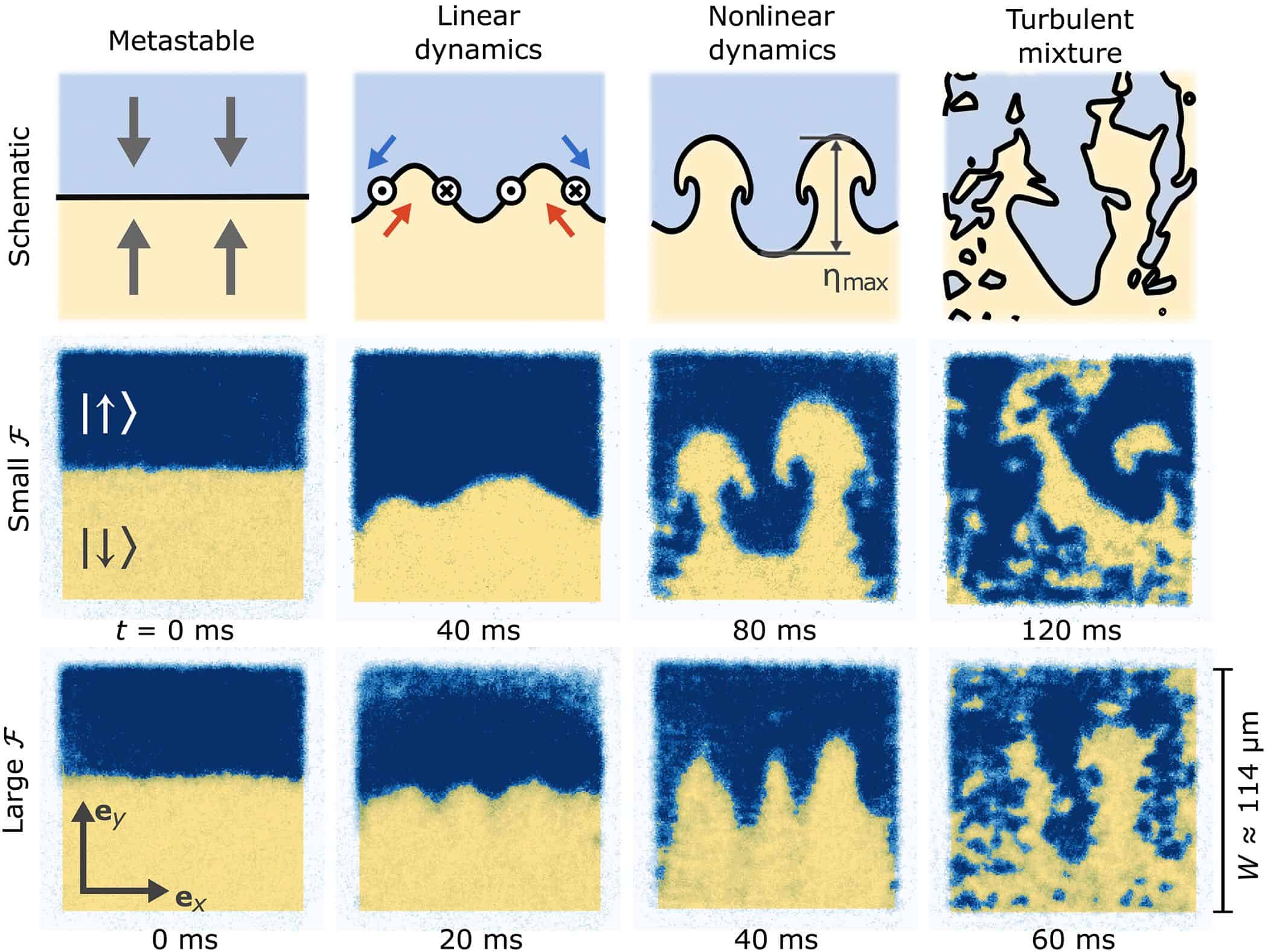

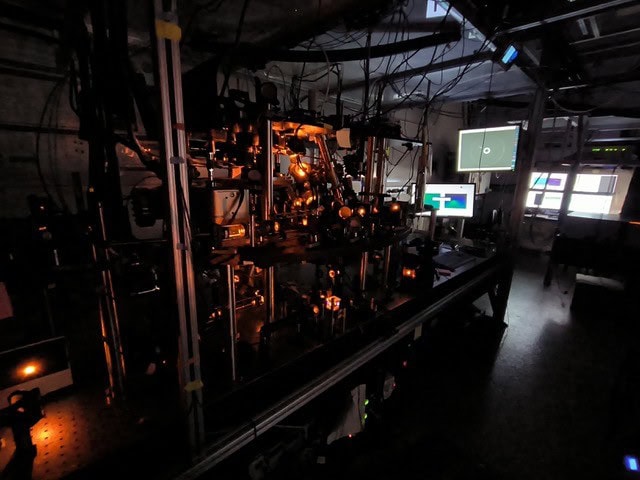

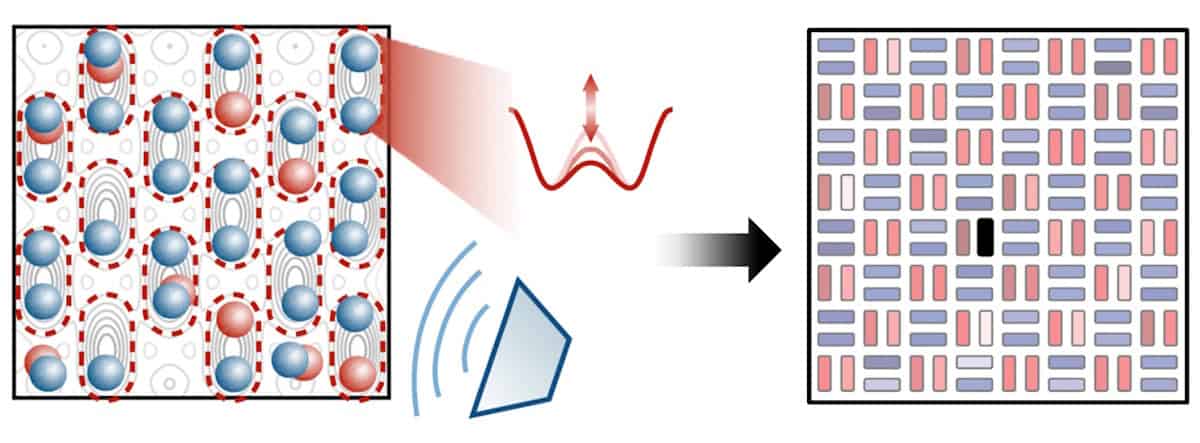

To show that such dynamics occur not only in macroscopic structures, but also at a quantum scale, scientists at the University of Maryland and the Joint Quantum Institute (JQI) created a two-state quantum system using a Bose–Einstein condensate (BEC) of sodium (23Na) atoms. In this state of matter, the temperature is so low, the sodium atoms behave as a single coherent system, giving researchers precise control of their parameters.

The JQI team confine this BEC in a two-dimensional optical potential that essentially produces a 100 µm × 100 µm sheet of atoms in the horizontal plane. The scientists then apply a microwave pulse that excites half of the atoms from the spin-down to the spin-up state. By adding a small magnetic field gradient along one of the horizontal axes, they induce a force (the Stern–Gerlach force) that acts on the two spin components in opposite directions due to the differing signs of their magnetic moments. This creates a clear interface between the spin-up and the spin-down atoms.

Mushrooms and ripplons

To initiate the RTI, the scientists need to perturb this two-component BEC by reversing the magnetic field gradient, which consequently reverses the direction of the induced force. According to Ian Spielman, who led the work alongside co-principal investigator Gretchen Campbell, this wasn’t as easy as it sounds. “The most difficult part was preparing the initial state (horizontal interface) with high quality, and then reliably inverting the gradient rapidly and accurately,” Spielman says.

The researchers then investigated how the magnitude of this force difference, acting on the two sides of the interface, affected the dynamics of the two-component BEC. For a small differential force, they initially observed a sinusoidal modulation of the interface. After some time, the interface enters a nonlinear dynamics regime where the RTI manifests through the formation of mushroom clouds. Finally, it becomes a turbulent mixture. The larger the differential force, the more rapidly the system evolves.

While RTI dynamics like these were expected to occur in quantum fluids, Spielman points out that proving it required a BEC with the right internal interactions. The BEC of sodium atoms in their experimental setup is one such system.

In general, Spielman says that cold atoms are a great tool for studying RTI because the numerical techniques used to describe them do not suffer from the same flaws as the Navier–Stokes equation used to model classical fluid dynamics. However, he notes that the transition to turbulence is “a tough problem that resides at the boundary between two conceptually different ways of thinking”, pushing the capabilities of both analytical and numerical techniques.

The scientists were also able to excite waves known as ripplon modes that travel along the interface of the two-component BEC. These are equivalent to the classical capillary waves –“ripples” when a droplet impacts a water surface. Yanda Geng, a JQI PhD student working on this project, explains that every unstable RTI mode has a stable ripplon as a sibling. The difference is that ripplon modes only appear when a small sinusoidal modulation is added to the differential force. “Studying ripplon modes builds understanding of the underlying [RTI] mechanism,” Geng says.

The flow of the spins

In a further experiment, the team studied a phenomenon that occurs as the RTI progresses and the spin components of the BEC flow in opposite directions along part of their shared interface. This is known as an interfacial counterflow. By transferring half the atoms into the other spin state after initializing the RTI process, the scientists were able to generate a chain of quantum mechanical whirlpools – a vortex chain – along the interface in regions where interfacial counterflow occurred.

Spielman, Campbell and their team are now working to create a cleaner interface in their two-component BEC, which would allow a wider range of experiments. “We are considering the thermal properties of this interface as a 1D quantum ‘string’,” says Spielman, adding that the height of such an interface is, in effect, an ultra-sensitive thermometer. Spielman also notes that interfacial waves in higher dimensions (such as a 2D surface) could be used for simulations of gravitational physics.

The research is described in Science Advances.

The post Quantum fluids mix like oil and water appeared first on Physics World.

Large-area triple-junction perovskite solar cell achieves record efficiency

Improving the efficiency of solar cells will likely be one of the key approaches to achieving net zero emissions in many parts of the world. Many types of solar cells will be required, with some of the better performances and efficiencies expected to come from multi-junction solar cells. Multi-junction solar cells comprise a vertical stack of semiconductor materials with distinct bandgaps, with each layer converting a different part of the solar spectrum to maximize conversion of the Sun’s energy to electricity.

When there are no constraints on the choice of materials, triple-junction solar cells can outperform double-junction and single-junction solar cells, with a power conversion efficiency (PCE) of up to 51% theoretically possible. But material constraints – due to fabrication complexity, cost or other technical challenges – mean that many such devices still perform far from the theoretical limits.

Perovskites are one of the most promising materials in the solar cell world today, but fabricating practical triple-junction solar cells beyond 1 cm2 in area has remained a challenge. A research team from Australia, China, Germany and Slovenia set out to change this, recently publishing a paper in Nature Nanotechnology describing the largest and most efficient triple-junction perovskite–perovskite–silicon tandem solar cell to date.

When asked why this device architecture was chosen, Anita Ho-Baillie, one of the lead authors from The University of Sydney, states: “I am interested in triple-junction cells because of the larger headroom for efficiency gains”.

Addressing surface defects in perovskite solar cells

Solar cells formed from metal halide perovskites have potential to be commercially viable, due to their cost-effectiveness, efficiency, ease of fabrication and their ability to be paired with silicon in multi-junction devices. The ease of fabrication means that the junctions can be directly fabricated on top of each other through monolithic integration – which leads to only two terminal connections, instead of four or six. However, these junctions can still contain surface defects.

To enhance the performance and resilience of their triple-junction cell (top and middle perovskite junctions on a bottom silicon cell), the researchers optimized the chemistry of the perovskite material and the cell design. They addressed surface defects in the top perovskite junction by replacing traditional lithium fluoride materials with piperazine-1,4-diium chloride (PDCl). They also replaced methylammonium – which is commonly used in perovskite cells – with rubidium. “The rubidium incorporation in the bulk and the PDCl surface treatment improved the light stability of the cell,” explains Ho-Baillie.

To connect the two perovskite junctions, the team used gold nanoparticles on tin oxide. Because the gold was in a nanoparticle form, the junctions could be engineered to maximize the flow of electric charge and light absorption by the solar cell.

“Another interesting aspect of the study is the visualization of the gold nanoparticles [using transmission electron microscopy] and the critical point when they become a semi-continuous film, which is detrimental to the multi-junction cell performance due to its parasitic absorption,” says Ho-Baillie. “The optimization for achieving minimal particle coverage while achieving sufficient ohmic contact for vertical carrier flow are useful insights”.

Record performance for a large-scale perovskite triple-junction cell

Using these design strategies, Ho-Baillie and colleagues developed a 16 cm2 triple-junction cell that achieved an independently certified steady-state PCE of 23.3% – the highest reported for a large-area device. While triple-junction perovskite solar cells have exhibited higher PCEs – with all-perovskite triple-junction cells reaching 28.7% and perovskite–perovskite–silicon devices reaching 27.1% – these were all achieved on a 1 cm2 cell, not a large-area cell.

In this study, the researchers also developed a 1 cm2 cell that was close to the best, with a PCE of 27.06%, but it is the large-area cell that’s the record breaker. The 1 cm2 cell also passed the International Electrotechnical Commission’s (IEC) 61215 thermal cycling test, which exposes the cell to 200 cycles under extreme temperature swings, ranging from –40 to 85°C. During this test, the 1 cm2 cell retained 95% of its initial efficiency after 407 h of continuous operation.

The combination of the successful thermal cycling test combined with the high efficiencies on a larger cell shows that there could be potential for this triple-junction architecture in real-world settings in the near future, even though they are still far away from their theoretical limits.

The post Large-area triple-junction perovskite solar cell achieves record efficiency appeared first on Physics World.

TEST de SIMON THE SORCERER ORIGINS – La magie opère toujours!

Grand nom de la belle époque des point’n click des années 90, Simon the Sorcerer est de retour pour un tout nouvel opus « Origins ». Cette série incontournable créée en 1992 par les anglais de chez Adventure Soft est donc de retour avec, toujours, de l’humour et de la magie… mais cette fois, entre les mains des italiens de chez Smallthing Studios…

Nous sommes en 2025 et c’est donc sans surprise qu’on profite d’une direction artistique bien différentes des anciens opus avec une approche cartoon de belle facture. On découvre Simon qui emménage dans une nouvelle maison et va rapidement se retrouver dans un monde de Fantasy et dans sa célèbre tenue de magicien en herbe…

L’approche point’n click évolue elle aussi avec une interface plus simple, toujours efficace. On peut ainsi interagir facilement avec l’environnement, les objets, les personnages, notre inventaire, etc.. Nous avons joué sur Nintendo Switch 2 et on peut dire que le contrôle est parfait, surtout en mode nomade.

Pour la petite histoire, le magicien Calypso nous propose de partir à la recherche de livres magiques pour pouvoir retourner à la maison. On traversera pour ce faire divers contrées et notamment une Académie de magie très inspiré de Harry Potter. Comme dans les derniers opus, le jeu n’hésite pas à jouer avec pas mal de références.

Brisant souvent le 4ème mur, Simon est plein d’humour caustique et ça fait souvent mouche. On retrouve aussi cet humour dans les énigmes avec des combinaisons parfois farfelues, il ne faut donc pas hésiter à tout tester pour parvenir à ses fins. Simon The Sorcerer Origins propose une difficulté bien dosée qui permet de se creuser les méninges, faire quelques allers et retours sans jamais vraiment être coincé trop longtemps.

L’ambiance sonore est plutôt cool elle aussi avec des dialogues malheureusement en VOST, mais c’est un détail.

Avec une durée de vie assez courte, Simon the Sorcerer Origins propose une toute nouvelle aventure qui ravira les fans de jeux d’aventure de ce type. Cela fait plaisir d’en voir toujours de nos jours, c’est un genre qui, finalement, perdure (surtout sur PC…) à travers les années. Le jeu est dispo depuis hier! Foncez!

Cet article TEST de SIMON THE SORCERER ORIGINS – La magie opère toujours! est apparu en premier sur Insert Coin.

Tim Berners-Lee: why the inventor of the Web is ‘optimistic, idealistic and perhaps a little naïve’

It’s rare to come across someone who’s been responsible for enabling a seismic shift in society that has affected almost everyone and everything. Tim Berners-Lee, who invented the World Wide Web, is one such person. His new memoir This is for Everyone unfolds the history and development of the Web and, in places, of the man himself.

Berners-Lee was born in London in 1955 to parents, originally from Birmingham, who met while working on the Ferranti Mark 1 computer and knew Alan Turing. Theirs was a creative, intellectual and slightly chaotic household. His mother could maintain a motorbike with fence wire and pliers, and was a crusader for equal rights in the workplace. His father – brilliant and absent minded – taught Berners-Lee about computers and queuing theory. A childhood of camping and model trains, it was, in Berners-Lee’s view, idyllic.

Berners-Lee had the good fortune to be supported by a series of teachers and managers who recognized his potential and unique way of working. He studied physics at the University of Oxford (his tutor “going with the flow” of Berners-Lee’s unconventional notation and ability to approach problems from oblique angles) and built his own computer. After graduating, he married and, following a couple of jobs, took a six-month placement at the CERN particle-physics lab in Geneva in 1985.

This placement set “a seed that sprouted into a tool that shook up the world”. Berners-Lee saw how difficult it was to share information stored in different languages in incompatible computer systems and how, in contrast, information flowed easily when researchers met over coffee, connected semi-randomly and talked. While at CERN, he therefore wrote a rough prototype for a program to link information in a type of web rather than a structured hierarchy.

Back at CERN, Tim Berners-Lee developed his vision of a “universal portal” to information

The placement ended and the program was ignored, but four years later Berners-Lee was back at CERN. Now divorced and soon to remarry, he developed his vision of a “universal portal” to information. It proved to be the perfect time. All the tools necessary to achieve the Web – the Internet, address labelling of computers, network cables, data protocols, the hypertext language that allowed cross-referencing of text and links on the same computer – had already been developed by others.

Berners-Lee saw the need for a user-friendly interface, using hypertext that could link to information on other computers across the world. His excitement was “uncontainable”, and according to his line manager “few of us if any could understand what he was talking about”. But Berners-Lee’s managers supported him and freed his time away from his actual job to become the world’s first web developer.

Having a vision was one thing, but getting others to share it was another. People at CERN only really started to use the Web properly once the lab’s internal phone book was made available on it. As a student at the time, I can confirm that it was much, much easier to use the Web than log on to CERN’s clunky IBM mainframe, where phone numbers had previously been stored.

Wider adoption relied on a set of volunteer developers, working with open-source software, to make browsers and platforms that were attractive and easy to use. CERN agreed to donate the intellectual property for web software to the public domain, which helped. But the path to today’s Web was not smooth: standards risked diverging and companies wanted to build applications that hindered information sharing.

Feeling that “the Web was outgrowing my institution” and “would be a distraction” to a lab whose core mission was physics, Berners-Lee moved to the Massachusetts Institute of Technology in 1994. There he founded the World Wide Web Consortium (W3C) to ensure consistent, accessible standards were followed by everyone as the Web developed into a global enterprise. The progression sounds straightforward although earlier accounts, such as James Gillies and Robert Caillau’s 2000 book How the Web Was Born, imply some rivalry between institutions that is glossed over here.

Initially inclined to advise people to share good things and not search for bad things, Berners-Lee had reckoned without the insidious power of “manipulative and coercive” algorithms on social networks

The rest is history, but not quite the history that Berners-Lee had in mind. By 1995 big business had discovered the possibilities of the Web to maximize influence and profit. Initially inclined to advise people to share good things and not search for bad things, Berners-Lee had reckoned without the insidious power of “manipulative and coercive” algorithms on social networks. Collaborative sites like Wikipedia are closer to his vision of an ideal Web; an emergent good arising from individual empowerment. The flip side of human nature seems to come as a surprise.

The rest of the book brings us up to date with Berners-Lee’s concerns (data, privacy, misuse of AI, toxic online culture), his hopes (the good use of AI), a third marriage and his move into a data-handling business. There are some big awards and an impressive amount of name dropping; he is excited by Order of Merit lunches with the Queen and by sitting next to Paul McCartney’s family at the opening ceremony to the London Olympics in 2012. A flick through the index reveals names ranging from Al Gore and Bono to Lucien Freud. These are not your average computing technology circles.

There are brief character studies to illustrate some of the main players, but don’t expect much insight into their lives. This goes for Berners-Lee too, who doesn’t step back to particularly reflect on those around him, or indeed his own motives beyond that vision of a Web for all enabling the best of humankind. He is firmly future focused.

Still, there is no-one more qualified to describe what the Web was intended for, its core philosophy, and what caused it to develop to where it is today. You’ll enjoy the book whether you want an insight into the inner workings that make your web browsing possible, relive old and forgotten browser names, or see how big tech wants to monetize and monopolize your online time. It is an easy read from an important voice.

The book ends with a passionate statement for what the future could be, with businesses and individuals working together to switch the Web from “the attention economy to the intention economy”. It’s a future where users are no longer distracted by social media and manipulated by attention-grabbing algorithms; instead, computers and services do what users want them to do, with the information that users want them to have.

Berners-Lee is still optimistic, still an incurable idealist, still driven by vision. And perhaps still a little naïve too in believing that everyone’s values will align this time.

- 2025 Macmillan 400pp £25.00/$30.00hb

The post Tim Berners-Lee: why the inventor of the Web is ‘optimistic, idealistic and perhaps a little naïve’ appeared first on Physics World.

New protocol makes an elusive superconducting signature measurable

Understanding the mechanism of high-temperature superconductivity could unlock powerful technologies, from efficient energy transmission to medical imaging, supercomputing and more. Researchers at Harvard University and the Massachusetts Institute of Technology have designed a new protocol to study a candidate model for high-temperature superconductivity (HTS), described in Physical Review Letters.

The model, known as the Fermi-Hubbard model, is believed to capture the essential physics of cuprate high-temperature superconductors, materials composed of copper and oxygen. The model describes fermions, such as electrons, moving on a lattice. The fermions experience two competing effects: tunnelling and on-site interaction. Imagine students in a classroom: they may expend energy to switch seats (tunnelling), avoid a crowded desk (repulsive on-site interaction) or share desks with friends (attractive on-site interaction). Such behaviour mirrors that of electrons moving between lattice sites.

Daniel Mark, first author of the study, notes that: “After nearly four decades of research, there are many detailed numerical studies and theoretical models on how superconductivity can emerge from the Fermi-Hubbard model, but there is no clear consensus [on exactly how it emerges].”

A precursor to understanding the underlying mechanism is testing whether the Fermi-Hubbard model gives rise to an important signature of cuprate HTS: d-wave pairing. This is a special type of electron pairing where the strength and sign of the pairing depend on the direction of electron motion. It contrasts with conventional low-temperature superconductors that exhibit s-wave pairing, in which the pairing strength is uniform in all directions.

Although physicists have developed robust methods for simulating the Fermi-Hubbard model with ultracold atoms, measuring d-wave pairing has been notoriously difficult. The new protocol aims to change that.

A change of perspective

A key ingredient in the protocol is the team’s use of “repulsive-to-attractive mapping”. The physics of HTS is often described by the repulsive Fermi-Hubbard model, in which electrons pay an energetic penalty for occupying the same lattice site, like disagreeing students sharing a desk. In this model, detecting d-wave pairing requires fermions to maintain a fragile quantum state as they move over large distances, which necessitates carefully fine-tuned experimental parameters.

To make the measurement more robust to experimental imperfection, the authors use a clever mathematical trick: they map from the repulsive model to the attractive one. In the attractive model, electrons receive an energetic benefit from being close together, like two friends in a classroom. The mapping is achieved by a particle–hole transformation, wherein spin-down electrons are reinterpreted as holes and vice versa. After mapping, the d-wave pairing signal becomes an observable that conserves local fermion number, thereby circumventing the challenge of long-range motion.

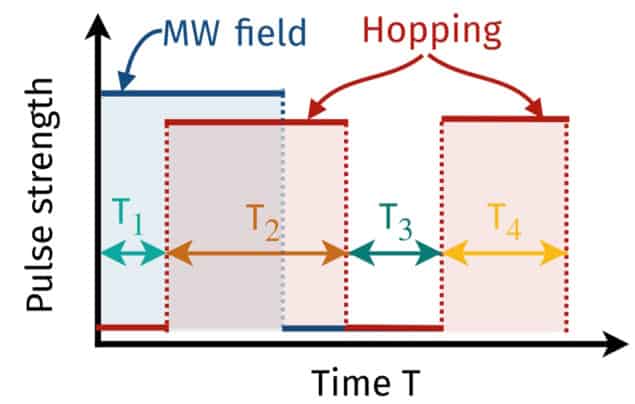

In its initial form, the d-wave pairing signal is difficult to measure. Drawing inspiration from digital quantum gates, the researchers divide their complex system into subsystems composed of pairs of lattice sites or dimers. Then, they apply a pulse sequence to make the observable measurable by simply counting fermions – a standard technique in the lab.

The pulse sequence begins with a global microwave pulse to manipulate the spin of the fermions, followed by a series of “hopping” and “idling” steps. The hopping step involves lowering the barrier between lattice sites, thereby increasing tunnelling. The idling step involves raising the barrier, allowing the system to evolve without tunnelling. Every step is carefully timed to reveal the d-wave pairing information at the end of the sequence.

The researchers report that their protocol is sample-efficient, experimentally viable, and generalizable to other observables that conserve local fermion number and act on dimers.

This work adds to a growing field that combines components of analogue quantum systems with digital gates to deeply study complex quantum phenomena. “All the experimental ingredients in our protocol have been demonstrated in existing experiments, and we are in discussion with several groups on possible use cases,” Mark tells Physics World.

The post New protocol makes an elusive superconducting signature measurable appeared first on Physics World.

Interface engineered ferromagnetism

Exchange-coupled interfaces offer a powerful route to stabilising and enhancing ferromagnetic properties in two-dimensional materials, such as transition metal chalcogenides. These materials exhibit strong correlations among charge, spin, orbital, and lattice degrees of freedom, making them an exciting area for emergent quantum phenomena.

Cr₂Te₃’s crystal structure naturally forms layers that behave like two-dimensional sheets of magnetic material. Each layer has magnetic ordering (ferromagnetism), but the layers are not tightly bonded in the third dimension and are considered “quasi-2D.” These layers are useful for interface engineering. Using a vacuum-based technique for atomically precise thin-film growth, known as molecular beam epitaxy, the researchers demonstrate wafer-scale synthesis of Cr₂Te₃ down to monolayer thickness on insulating substrates. Remarkably, robust ferromagnetism persists even at the monolayer limit, a critical milestone for 2D magnetism.

When Cr₂Te₃ is proximitized (an effect that occurs when one material is placed in close physical contact with another so that its properties are influenced by the neighbouring material) to a topological insulator, specifically (Bi,Sb)₂Te₃, the Curie temperature, the threshold between ferromagnetic and paramagnetic phases, increases from ~100 K to ~120 K. This enhancement is experimentally confirmed via polarized neutron reflectometry, which reveals a substantial boost in magnetization at the interface.

Theoretical modelling attributes this magnetic enhancement to the Bloembergen–Rowland interaction which is a long-range exchange mechanism mediated by virtual intraband transitions. Crucially, this interaction is facilitated by the topological insulator’s topologically protected surface states, which are spin-polarized and robust against disorder. These states enable long-distance magnetic coupling across the interface, suggesting a universal mechanism for Curie temperature enhancement in topological insulator-coupled magnetic heterostructures.

This work not only demonstrates a method for stabilizing 2D ferromagnetism but also opens the door to topological electronics, where magnetism and topology are co-engineered at the interface. Such systems could enable novel quantum hybrid devices, including spintronic components, topological transistors, and platforms for realizing exotic quasiparticles like Majorana fermions.

Read the full article

Enhanced ferromagnetism in monolayer Cr2Te3 via topological insulator coupling

Yunbo Ou et al 2025 Rep. Prog. Phys. 88 060501

Do you want to learn more about this topic?

Interacting topological insulators: a review by Stephan Rachel (2018)

The post Interface engineered ferromagnetism appeared first on Physics World.

Probing the fundamental nature of the Higgs Boson

First proposed in 1964, the Higgs boson plays a key role in explaining why many elementary particles of the Standard Model have a rest mass. Many decades later the Higgs boson was observed in 2012 by the ATLAS and CMS collaborations at the Large Hadron Collider (LHC), confirming the decades old prediction.

This discovery made headline news at the time and, since then, the two collaborations have been performing a series of measurements to establish the fundamental nature of the Higgs boson field and of the quantum vacuum. Researchers certainly haven’t stopped working on the Higgs though. In subsequent years, a series of measurements have been performed to establish the fundamental nature of the new particle.

One key measurement comes from studying a process known as off-shell Higgs boson production. This is the creation of Higgs bosons with a mass significantly higher than their typical on-shell mass of 125 GeV. This phenomenon occurs due to quantum mechanics, which allows particles to temporarily fluctuate in mass.

This kind of production is harder to detect but can reveal deeper insights into the Higgs boson’s properties, especially its total width, which relates to how long it exists before decaying. This in turn, allows us to test key predictions made by the Standard Model of particle physics.

Previous observations of this process had been severely limited in their sensitivity. In order to improve on this, the ATLAS collaboration had to introduce a completely new way of interpreting their data (read here for more details).

They were able to provide evidence for off-shell Higgs boson production with a significance of 2.5𝜎 (corresponding to a 99.38% likelihood), using events with four electrons or muons, compared to a significance of 0.8𝜎 using traditional methods in the same channel.

The results mark an important step forward in understanding the Higgs boson as well as other high-energy particle physics phenomena.

Read the full article

The ATLAS Collaboration, 2025 Rep. Prog. Phys. 88 057803

The post Probing the fundamental nature of the Higgs Boson appeared first on Physics World.

Fabrication and device performance of Ni0/Ga2O3 heterojunction power rectifiers

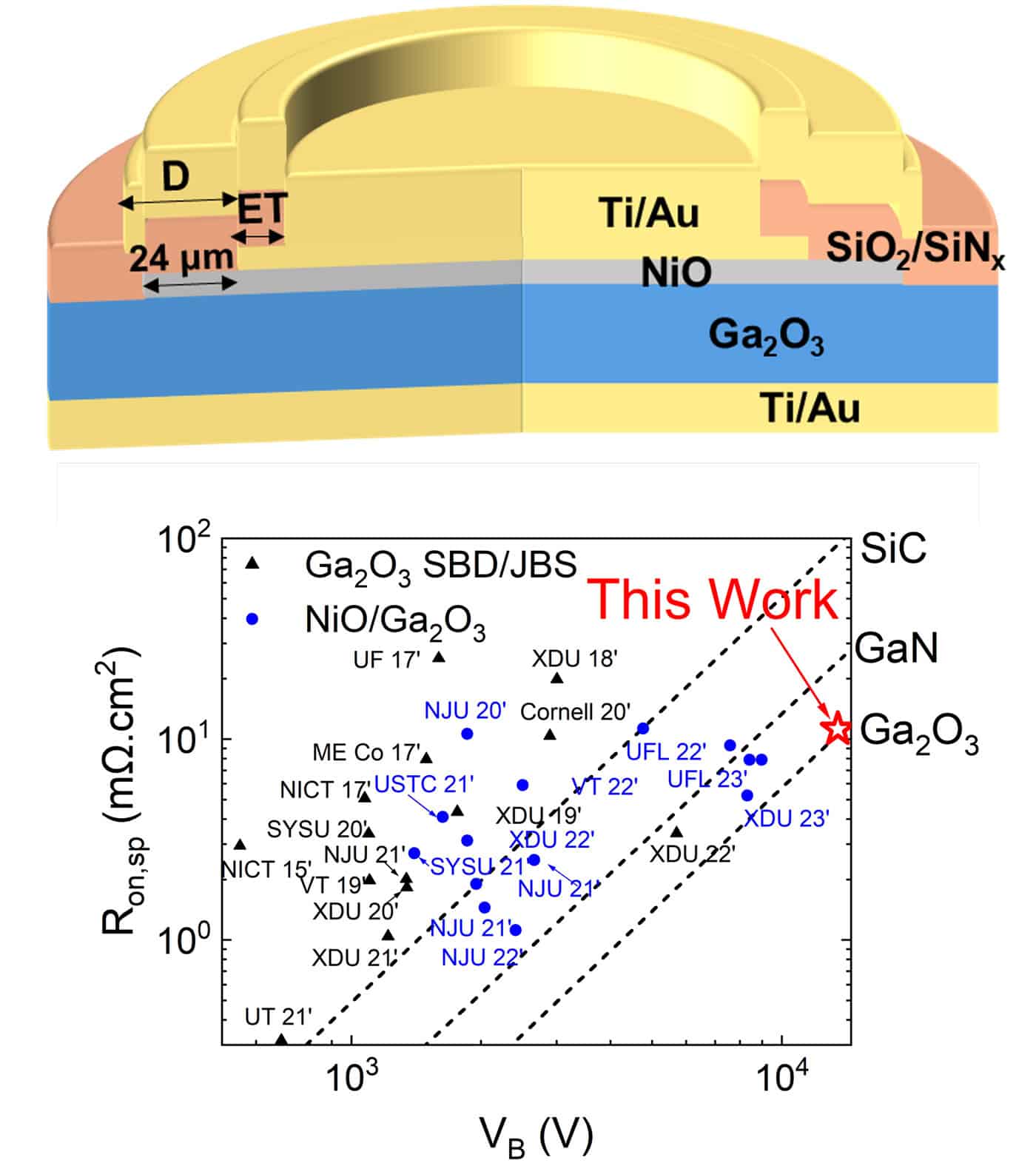

This talk shows how integrating p-type NiO to form NiO/Ga₂O₃ heterojunction rectifiers overcomes that barrier, enabling record-class breakdown and Ampere-class operation. It will cover device structure/process optimization, thermal stability to high temperatures, and radiation response – with direct ties to today’s priorities: EV fast charging, AI data‑center power systems, and aerospace/space‑qualified power electronics.

An interactive Q&A session follows the presentation.

Jian-Sian Li received the PhD in chemical engineering from the University of Florida in 2024, where his research focused on NiO/β-Ga₂O₃ heterojunction power rectifiers, includes device design, process optimization, fast switching, high-temperature stability, and radiation tolerance (γ, neutron, proton). His work includes extensive electrical characterization and microscopy/TCAD analysis supporting device physics and reliability in harsh environments. Previously, he completed his BS and MS at National Taiwan University (2015, 2018), with research spanning phoretic/electrokinetic colloids, polymers for OFETs/PSCs, and solid-state polymer electrolytes for Li-ion batteries. He has since transitioned to industry at Micron Technology.

The post Fabrication and device performance of Ni0/Ga<sub>2</sub>O<sub>3</sub> heterojunction power rectifiers appeared first on Physics World.

Randomly textured lithium niobate gives snapshot spectrometer a boost

A new integrated “snapshot spectroscopy” system developed in China can determine the spectral and spatial composition of light from an object with much better precision than other existing systems. The instrument uses randomly textured lithium niobate and its developers have used it for astronomical imaging and materials analysis – and they say that other applications are possible.

Spectroscopy is crucial to analysis of all kinds of objects in science and engineering, from studying the radiation emitted by stars to identifying potential food contaminants. Conventional spectrometers – such as those used on telescopes – rely on diffractive optics to separate incoming light into its constituent wavelengths. This makes them inherently large, expensive and inefficient at rapid image acquisition as the light from each point source has to be spatially separated to resolve the wavelength components.

In recent years researchers have combined computational methods with advanced optical sensors to create computational spectrometers with the potential to rival conventional instruments. One such approach is hyperspectral snapshot imaging, which captures both spectral and spatial information in the same image. There are currently two main snapshot-imaging techniques available. Narrowband-filtered snapshot spectral imagers comprise a mosaic pattern of narrowband filters and acquire an image by taking repeated snapshots at different wavelengths. However, these trade spectral resolution with spatial resolution, as each extra band requires its own tile within the mosaic. A more complex alternative design – the broadband-modulated snapshot spectral imager – uses a single, broadband detector covered with a spatially varying element such as a metasurface that interacts with the light and imprints spectral encoding information onto each pixel. However, these are complex to manufacture and their spectral resolution is limited to the nanometre scale.

Random thicknesses

In the new work, researchers led by Lu Fang at Tsinghua University in Beijing unveil a spectroscopy technique that utilizes the nonlinear optical properties of lithium niobate to achieve sub-Ångström spectral resolution in a simply fabricated, integrated snapshot detector they call RAFAEL. A lithium niobate layer with random, sub-wavelength thickness variations is surrounded by distributed Bragg reflectors, forming optical cavities. These are integrated into a stack with a set of electrodes. Each cavity corresponds to a single pixel. Incident light enters from one side of a cavity, interacting with the lithium niobate repeatedly before exiting and being detected. Because lithium niobate is nonlinear, its response varies with the wavelength of the light.

The researchers then applied a bias voltage using the electrodes. The nonlinear optical response of lithium niobate means that this bias alters its response to light differently at different wavelengths. Moreover, the random variation of the lithium niobate’s thickness around the surface means that the wavelength variation is spatially specific.

The researchers designed a machine learning algorithm and trained it to use this variation of applied bias voltage with resulting wavelength detected at each point to reconstruct the incident wavelengths on the detector at each point in space.

“The randomness is useful for making the equations independent,” explains Fang; “We want to have uncorrelated equations so we can solve them.”

Thousands of stars

The researchers showed that they could achieve 88 Hz snapshot spectroscopy on a grid of 2048×2048 pixels with a spectral resolution of 0.5 Å (0.05 nm) between wavelengths of 400–1000 nm. They demonstrated this by capturing the full atomic absorption spectra of up to 5600 stars in a single snapshot. This is a two to four orders of magnitude improvement in observational efficiency over world-class astronomical spectrometers. They also demonstrated other applications, including a materials analysis challenge involving the distinction of a real leaf from a fake one. The two looked identical at optical wavelengths, but, using its broader range of wavelengths, RAFAEL was able to distinguish between the two.

The researchers are now attempting to improve the device further: “I still think that sub-Ångstrom is not the ending – it’s just the starting point,” says Fu. “We want to push the limit of our resolution to the picometre.” In addition, she says, they are working on further integration of the device – which requires no specialized lithography – for easier use in the field. “We’ve already put this technology on a drone platform,” she reveals. The team is also working with astronomical observatories such as Gran Telescopio Canarias in La Palma, Spain.

The research is described in Nature.

Computational imaging expert David Brady of Duke University in North Carolina is impressed by the instrument. “It’s a compact package with extremely high spectral resolution,” he says; “Typically an optical instrument, like a CMOS sensor that’s used here, is going to have between 10,000 and 100,000 photo-electrons per pixel. That’s way too many photons for getting one measurement…I think you’ll see that with spectral imaging as is done here, but also with temporal imaging. People are saying you don’t need to go at 30 frames second, you can go at a million frames per second and push closer to the single photon limit, and then that would require you to do computation to figure out what it all means.”

The post Randomly textured lithium niobate gives snapshot spectrometer a boost appeared first on Physics World.

Tumour-specific radiofrequency fields suppress brain cancer growth

A research team headed up at Wayne State University School of Medicine in the US has developed a novel treatment for glioblastoma, based on exposure to low levels of radiofrequency electromagnetic fields (RF EMF). The researchers demonstrated that the new therapy slows the growth of glioblastoma cells in vitro and, for the first time, showed its feasibility and clinical impact in patients with brain tumours.

The study, led by Hugo Jimenez and reported in Oncotarget, uses a device developed by TheraBionic that delivers amplitude-modulated 27.12 MHz RF EMF throughout the entire body, via a spoon-shaped antenna placed on the tongue. Using tumour-specific modulation frequencies, the device has already received US FDA approval for treating patients with advanced hepatocellular carcinoma (HCC, a liver cancer), while its safety and effectiveness are currently being assessed in clinical trials in patients with pancreatic, colorectal and breast cancer.

In this latest work, the team investigated its use in glioblastoma, an aggressive and difficult to treat brain tumour.

To identify the particular frequencies needed to treat glioblastoma, the team used a non-invasive biofeedback method developed previously to study patients with various types of cancer. The process involves measuring variations in skin electrical resistance, pulse amplitude and blood pressure while individuals are exposed to low levels of amplitude-modulated frequencies. The approach can identify the frequencies, usually between 1 Hz and 100 kHz, specific to a single tumour type.

Jimenez and colleagues first examined the impact of glioblastoma-specific amplitude-modulated RF EMF (GBMF) on glioblastoma cells, exposing various cell lines to GBMF for 3 h per day at the exposure level used for patient treatments. After one week, GBMF decreased the proliferation of three glioblastoma cell lines (U251, BTCOE-4765 and BTCOE-4795) by 34.19%, 15.03% and 14.52%, respectively.

The team note that the level of this inhibitive effect (15–34%) is similar to that observed in HCC cell lines (19–47%) and breast cancer cell lines (10–20%) treated with tumour-specific frequencies. A fourth glioblastoma cell line (BTCOE-4536) was not inhibited by GBMF, for reasons currently unknown.

Next, the researchers examined the effect of GBMF on cancer stem cells, which are responsible for treatment resistance and cancer recurrence. The treatment decreased the tumour sphere-forming ability of U251 and BTCOE-4795 cells by 36.16% and 30.16%, respectively – also a comparable range to that seen in HCC and breast cancer cells.

Notably, these effects were only induced by frequencies associated with glioblastoma. Exposing glioblastoma cells to HCC-specific modulation frequencies had no measurable impact and was indistinguishable from sham exposure.

Looking into the underlying treatment mechanisms, the researchers hypothesized that – as seen in breast cancer and HCC – glioblastoma cell proliferation is mediated by T-type voltage-gated calcium channels (VGCC). In the presence of a VGCC blocker, GBMF did not inhibit cell proliferation, confirming that GBMF inhibition of cell proliferation depends on T-type VGCCs, in particular, a calcium channel known as CACNA1H.

The team also found that GBMF blocks the growth of glioblastoma cells by modulating the “Mitotic Roles of Polo-Like Kinase” signalling pathway, leading to disruption of the cells’ mitotic spindles, critical structures in cell replication.

A clinical first

Finally, the researchers used the TheraBionic device to treat two patients: a 38-year-old patient with recurrent glioblastoma and a 47-year-old patient with the rare brain tumour oligodendroglioma. The first patient showed signs of clinical and radiological benefit following treatment; the second exhibited stable disease and tolerated the treatment well.

“This is the first report showing feasibility and clinical activity in patients with brain tumour,” the authors write. “Similarly to what has been observed in patients with breast cancer and hepatocellular carcinoma, this report shows feasibility of this treatment approach in patients with malignant glioma and provides evidence of anticancer activity in one of them.”

The researchers add that a previous dosimetric analysis of this technique measured a whole-body specific absorption rate (SAR, the rate of energy absorbed by the body when exposed to RF EMF) of 1.35 mW/kg and a peak spatial SAR (over 1 g of tissue) of 146–352 mW/kg. These values are well within the safety limits set by the ICNIRP (whole-body SAR of 80 mW/kg; peak spatial SAR of 2000 mW/kg). Organ-specific values for grey matter, white matter and the midbrain also had mean SAR ranges well within the safety limits.

The team concludes that the results justify future preclinical and clinical studies of the TheraBionic device in this patient population. “We are currently in the process of designing clinical studies in patients with brain tumors,” Jimenez tells Physics World.

The post Tumour-specific radiofrequency fields suppress brain cancer growth appeared first on Physics World.

Entangled light leads to quantum advantage

Physicists at the Technical University of Denmark have demonstrated what they describe as a “strong and unconditional” quantum advantage in a photonic platform for the first time. Using entangled light, they were able to reduce the number of measurements required to characterize their system by a factor of 1011, with a correspondingly huge saving in time.

“We reduced the time it would take from 20 million years with a conventional scheme to 15 minutes using entanglement,” says Romain Brunel, who co-led the research together with colleagues Zheng-Hao Liu and Ulrik Lund Andersen.

Although the research, which is described in Science, is still at a preliminary stage, Brunel says it shows that major improvements are achievable with current photonic technologies. In his view, this makes it an important step towards practical quantum-based protocols for metrology and machine learning.

From individual to collective measurement

Quantum devices are hard to isolate from their environment and extremely sensitive to external perturbations. That makes it a challenge to learn about their behaviour.

To get around this problem, researchers have tried various “quantum learning” strategies that replace individual measurements with collective, algorithmic ones. These strategies have already been shown to reduce the number of measurements required to characterize certain quantum systems, such as superconducting electronic platforms containing tens of quantum bits (qubits), by as much as a factor of 105.

A photonic platform

In the new study, Brunel, Liu, Andersen and colleagues obtained a quantum advantage in an alternative “continuous-variable” photonic platform. The researchers note that such platforms are far easier to scale up than superconducting qubits, which they say makes them a more natural architecture for quantum information processing. Indeed, photonic platforms have already been crucial to advances in boson sampling, quantum communication, computation and sensing.

The team’s experiment works with conventional, “imperfect” optical components and consists of a channel containing multiple light pulses that share the same pattern, or signature, of noise. The researchers began by performing a procedure known as quantum squeezing on two beams of light in their system. This caused the beams to become entangled – a quantum phenomenon that creates such a strong linkage that measuring the properties of one instantly affects the properties of the other.

The team then measured the properties of one of the beams (the “probe” beam) in an experiment known as a 100-mode bosonic displacement process. According to Brunel, one can imagine this experiment as being like tweaking the properties of 100 independent light modes, which are packets or beams of light. “A ‘bosonic displacement process’ means you slightly shift the amplitude and phase of each mode, like nudging each one’s brightness and timing,” he explains. “So, you then have 100 separate light modes, and each one is shifted in phase space according to a specific rule or pattern.”

By comparing the probe beam to the second (“reference”) beam in a single joint measurement, Brunel explains that he and his colleagues were able to cancel out much of the uncertainties in these measurements. This meant they could extract more information per trial than they could have by characterizing the probe beam alone. This information boost, in turn, allowed them to significantly reduce the number of measurements – in this case, by a factor of 1011.

While the DTU researchers acknowledge that they have not yet studied a practical, real-world system, they emphasize that their platform is capable of “doing something that no classical system will ever be able to do”, which is the definition of a quantum advantage. “Our next step will therefore be to study a more practical system in which we can demonstrate a quantum advantage,” Brunel tells Physics World.

The post Entangled light leads to quantum advantage appeared first on Physics World.

Queer Quest: a quantum-inspired journey of self-discovery

This episode of Physics World Stories features an interview with Jessica Esquivel and Emily Esquivel – the creative duo behind Queer Quest. The event created a shared space for 2SLGBTQIA+ Black and Brown people working in science, technology, engineering, arts and mathematics (STEAM).

Mental health professionals also joined Queer Quest, which was officially recognized by UNESCO as part of the International Year of Quantum Science and Technology (IYQ). Over two days in Chicago this October, the event brought science, identity and wellbeing into powerful conversation.

Jessica Esquivel, a particle physicist and associate scientist at Fermilab, is part of the Muon g-2 experiment, pushing the limits of the Standard Model. Emily Esquivel is a licensed clinical professional counsellor. Together, they run Oyanova, an organization empowering Black and Brown communities through science and wellness.

Quantum metaphors and resilience through connection

Queer Quest blended keynote talks, with collective conversations, alongside meditation and other wellbeing activities. Panellists drew on quantum metaphors – such as entanglement – to explore identity, community and mental health.

In a wide-ranging conversation with podcast host Andrew Glester, Jessica and Emily speak about the inspiration for the event, and the personal challenges they have faced within academia. They speak about the importance of building resilience through community connections, especially given the social tensions in the US right now.

Hear more from Jessica Esquivel in her 2021 Physics World Stories appearance on the latest developments in muon science.

This article forms part of Physics World‘s contribution to the 2025 International Year of Quantum Science and Technology (IYQ), which aims to raise global awareness of quantum physics and its applications.

Stayed tuned to Physics World and our international partners throughout the year for more coverage of the IYQ.

Find out more on our quantum channel.

The post Queer Quest: a quantum-inspired journey of self-discovery appeared first on Physics World.

Fingerprint method can detect objects hidden in complex scattering media

Physicists have developed a novel imaging technique for detecting and characterizing objects hidden within opaque, highly scattering material. The researchers, from France and Austria, showed that their new mathematical approach, which utilizes the fact that hidden objects generate their own complex scattering pattern, or “fingerprint”, can work on biological tissue.

Viewing the inside of the human body is challenging due to the scattering nature of tissue. With ultrasound, when waves propagate through tissue they are reflected, bounce around and scatter chaotically, creating noise that obscures the signal from the object that the medical practitioner is trying to see. The further you delve into the body the more incoherent the image becomes.

There are techniques for overcoming these issues, but as scattering increases – in more complex media or as you push deeper through tissue – they struggle and unpicking the required signal becomes too complex.

The scientists behind the latest research, from the Institut Langevin in Paris, France and TU Wien in Vienna, Austria, say that rather than compensating for scattering, their technique instead relies on detecting signals from the hidden object in the disorder.

Objects buried in a material create their own complex scattering pattern, and the researchers found that if you know an object’s specific acoustic signal it’s possible to find it in the noise created by the surrounding environment.

“We cannot see the object, but the backscattered ultrasonic wave that hits the microphones of the measuring device still carries information about the fact that it has come into contact with the object we are looking for,” explains Stefan Rotter, a theoretical physicist at TU Wien.

Rotter and his colleagues examined how a series of objects scattered ultrasound waves in an interference-free environment. This created what they refer to as fingerprint matrices: measurements of the specific, characteristic way in which each object scattered the waves.

The team then developed a mathematical method that allowed them to calculate the position of each object when hidden in a scattering medium, based on its fingerprint matrix.

“From the correlations between the measured reflected wave and the unaltered fingerprint matrix, it is possible to deduce where the object is most likely to be located, even if the object is buried,” explains Rotter.

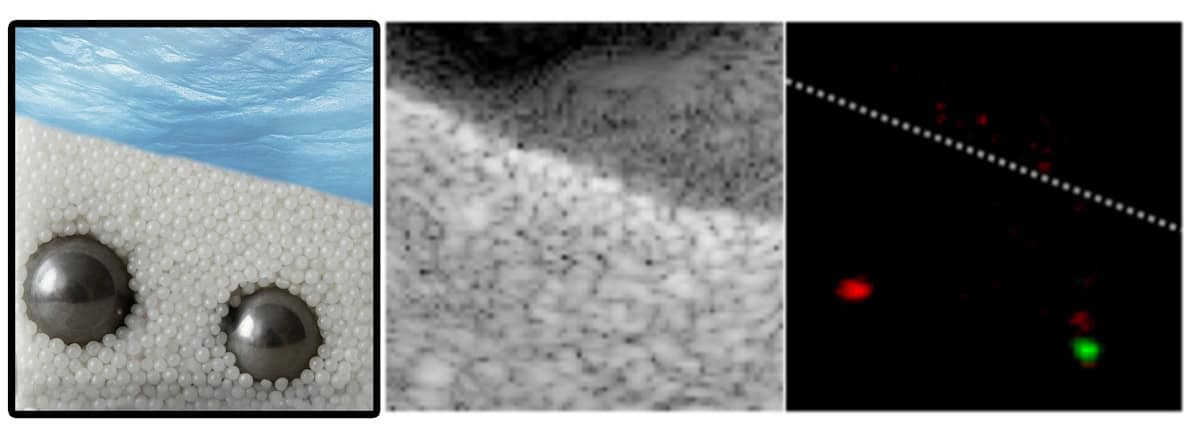

The team tested the technique in three different scenarios. The first experiment trialled the ultrasound imaging of metal spheres in a dense suspension of glass beads in water. Conventional ultrasound failed in this setup and the spheres were completely invisible, but with their novel fingerprint method the researchers were able to accurately detect them.

Next, to examine a medical application for the technique, the researchers embedded lesion markers often used to monitor breast tumours in a foam designed to mimic the ultrasound scattering of soft tissue. These markers can be challenging to detect due to scatterers randomly distributed in human tissue. With the fingerprint matrix, however, the researchers say that the markers were easy to locate.

Finally, the team successfully mapped muscle fibres in a human calf using the technique. They claim this could be useful for diagnosing and monitoring neuromuscular diseases.

According to Rotter and his colleagues, their fingerprint matrix method is a versatile and universal technique that could be applied beyond ultrasound to all fields of wave physics. They highlight radar and sonar as examples of sensing techniques where target identification and detection in noisy environments are long-standing challenges.

“The concept of the fingerprint matrix is very generally applicable – not only for ultrasound, but also for detection with light,” Rotter says. “It opens up important new possibilities in all areas of science where a reflection matrix can be measured.”

The researchers report their findings in Nature Physics.

The post Fingerprint method can detect objects hidden in complex scattering media appeared first on Physics World.

Ask me anything: Kirsty McGhee – ‘Follow what you love: you might end up doing something you never thought was an option’

What skills do you use every day in your job?

Obviously, I write: I wouldn’t be a very good science writer if I couldn’t. So communication skills are vital. Recently, for example, Qruise launched a new magnetic-resonance product for which I had to write a press release, create a new webpage and do social-media posts. That meant co-ordinating with lots of different people, finding out the key features to advertise, identifying the claims we wanted to make – and if we have the data to back those claims up. I’m not an expert in quantum computing or magnetic-resonance imagining or even marketing so I have to pick things up fast and then translate technically complex ideas from physics and software into simple messages for a broader audience. Thankfully, my colleagues are always happy to help. Science writing is a difficult task but I think I’m getting better at it.

What do you like best and least about your job?

I love the variety and the fact that I’m doing so many different things all the time. If there’s a day I feel I want something a little bit lighter, I can do some social media or the website, which is more creative. On the other hand, if I feel I could really focus in detail on something then I can write some documentation that is a little bit more technical. I also love the flexibility of remote working, but I do miss going to the office and socialising with my colleagues on a regular basis. You can’t get to know someone as well online, it’s nicer to have time with them in person.

What do you know today, that you wish you knew when you were starting out in your career?

That’s a hard one. It would be easy to say I wish I’d known earlier that I could combine science and writing and make a career out of that. On the other hand, if I’d known that, I might not have done my PhD – and if I’d gone into writing straight after my undergraduate degree, I perhaps wouldn’t be where I am now. My point is, it’s okay not to have a clear plan in life. As children, we’re always asked what we want to be – in my case, my dream from about the age of four was to be a vet. But then I did some work experience in a veterinary practice and I realized I’m really squeamish. It was only when I was 15 or 16 that I discovered I wanted to do physics because I liked it and was good at it. So just follow the things you love. You might end up doing something you never even thought was an option.

The post Ask me anything: Kirsty McGhee – ‘Follow what you love: you might end up doing something you never thought was an option’ appeared first on Physics World.

SCOOBY-DOO x LUSH

Alors que Halloween approche, Lush a créé une collection inspirée de célèbre bande de détectives dans le cadre d’une collaboration exclusive en édition limitée : la collection Scooby-Doo x Lush, disponible depuis le 9 octobre en ligne et en boutiques.

Puisant son inspiration dans le dessin animé intemporel de Hanna-Barbera, Lush dévoile une collection pleine de nostalgie qui invite chacun·e à se reconnecter avec les personnages qui ont éveillé notre imagination, tout en retrouvant la joie de se détendre après une journée passée à résoudre des mystères…

On retrouve des bombes de bain à l’effigie de Scooby-Doo (8€) lui-même ou bien de la Mystery Machine (9€) mais aussi la gelée de douche Zoinks! (15€) et le gel douche Mystery Inc. (12,50€ les 120g) qui, avec sa mystérieuse couleur violette, sent bon les Scooby-Snacks! Ajoutez à cela le fameux spray corporel Scooby Snacks (45€…) et vous êtes prêts à élucider les mystères de Halloween…

Les produits peuvent être réunis dans une boite cadeau en forme de Mystery Machine! Trop classe!

Plus d’infos sur LUSH

Cet article SCOOBY-DOO x LUSH est apparu en premier sur Insert Coin.

New adaptive optics technology boosts the power of gravitational wave detectors

Future versions of the Laser Interferometer Gravitational Wave Observatory (LIGO) will be able to run at much higher laser powers thanks to a sophisticated new system that compensates for temperature changes in optical components. Known as FROSTI (for FROnt Surface Type Irradiator) and developed by physicists at the University of California Riverside, US, the system will enable next-generation machines to detect gravitational waves emitted when the universe was just 0.1% of its current age, before the first stars had even formed.

Gravitational waves are distortions in spacetime that occur when massive astronomical objects accelerate and collide. When these distortions pass through the four-kilometre-long arms of the two LIGO detectors, they create a tiny difference in the (otherwise identical) distance that light travels between the centre of the observatory and the mirrors located at the end of each arm. The problem is that detecting and studying gravitational waves requires these differences in distance to be measured with an accuracy of 10-19 m, which is 1/10 000th the size of a proton.

Extending the frequency range

LIGO overcame this barrier 10 years ago when it detected the gravitational waves produced when two black holes located roughly 1.3 billion light–years from Earth merged. Since then, it and two smaller facilities, KAGRA and VIRGO, have observed many other gravitational waves at frequencies ranging from 30–2000 Hz.

Observing waves at lower and higher frequencies in the gravitational wave spectrum remains challenging, however. At lower frequencies (around 10–30 Hz), the problem stems from vibrational noise in the mirrors. Although these mirrors are hefty objects – each one measures 34 cm across, is 20 cm thick and has a mass of around 40 kg – the incredible precision required to detect gravitational waves at these frequencies means that even the minute amount of energy they absorb from the laser beam is enough to knock them out of whack.

At higher frequencies (150 – 2000 Hz), measurements are instead limited by quantum shot noise. This is caused by the random arrival time of photons at LIGO’s output photodetectors and is a fundamental consequence of the fact that the laser field is quantized.

A novel adaptive optics device

Jonathan Richardson, the physicist who led this latest study, explains that FROSTI is designed to reduce quantum shot noise by allowing the mirrors to cope with much higher levels of laser power. At its heart is a novel adaptive optics device that is designed to precisely reshape the surfaces of LIGO’s main mirrors under laser powers exceeding 1 megawatt (MW), which is nearly five times the power used at LIGO today.

Though its name implies cooling, FROSTI actually uses heat to restore the mirror’s surface to its original shape. It does this by projecting infrared radiation onto test masses in the interferometer to create a custom heat pattern that “smooths out” distortions and so allows for fine-tuned, higher-order corrections.

The single most challenging aspect of FROSTI’s design, and one that Richardson says shaped its entire concept, is the requirement that it cannot introduce even more noise into the LIGO interferometer. “To meet this stringent requirement, we had to use the most intensity-stable radiation source available – that is, an internal blackbody emitter with a long thermal time constant,” he tells Physics World. “Our task, from there, was to develop new non-imaging optics capable of reshaping the blackbody thermal radiation into a complex spatial profile, similar to one that could be created with a laser beam.”

Richardson anticipates that FROSTI will be a critical component for future LIGO upgrades – upgrades that will themselves serve as blueprints for even more sensitive next-generation observatories like the proposed Cosmic Explorer in the US and the Einstein Telescope in Europe. “The current prototype has been tested on a 40-kg LIGO mirror, but the technology is scalable and will eventually be adapted to the 440-kg mirrors envisioned for Cosmic Explorer,” he says.

Jan Harms, a physicist at Italy’s Gran Sasso Science Institute who was not involved in this work, describes FROSTI as “an ingenious concept to apply higher-order corrections to the mirror profile.” Though it still needs to pass the final test of being integrated into the actual LIGO detectors, Harms notes that “the results from the prototype are very promising”.

Richardson and colleagues are continuing to develop extensions to their technology, building on the successful demonstration of their first prototype. “In the future, beyond the next upgrade of LIGO (A+), the FROSTI radiation will need to be shaped into an even more complex spatial profile to enable the highest levels of laser power (1.5 MW) ultimately targeted,” explains Richardson. “We believe this can be achieved by nesting two or more FROSTI actuators together in a single composite, with each targeting a different radial zone of the test mass surfaces. This will allow us to generate extremely finely-matched optical wavefront corrections.”

The present study is detailed in Optica.

The post New adaptive optics technology boosts the power of gravitational wave detectors appeared first on Physics World.

TEST de NINJA GAIDEN 4 – Ça va re-trancher!

Cela fait 13 ans que nous attendions une suite pour la licence Ninja Gaiden. C’est chose faite car l’épisode 4 est enfin disponible sur quasi tous les supports et nous avons eu l’occasion de le tester sur Xbox Series X. Ninja Gaiden 4 propose une expérience toujours aussi difficile mais se modernise grâce à une accessibilité disponible à tout moment et ça c’est vraiment chouette !

Une modernisation du genre

Ninja Gaiden est connu pour être un jeu difficile à cause d’un gameplay hardcore pour les combats. L’exigence de cette licence se modernise en proposant dès le début du jeu plusieurs modes de difficultés : mode héros, standard ou difficile. Personnellement j’ai opté pour le mode héros pour profiter d’une expérience plus fluide et sentir manette en main toute l’étendue des pouvoirs du héros que l’on joue. A noter que la nature des combats restent tout aussi nerveuse car certains boss sont assez difficiles. Cependant si vous êtes à la recherche de gros challenge, le mode standard ou difficile sont des expériences qui colleront à vos envies.

Si vous êtes à la recherche d’un bon beat’em all sans trop de difficulté, je vous recommande le mode héros qui permet de combattre avec certaines mécaniques qui s’automatisent, citons par exemple : la parade parfaite quand la jauge de santé descend en dessous des 30% ou un système de combo simplifié. Le jeu est découpé en plusieurs chapitres qui durent environ entre 30 et 45 minutes chacun. Ninja Gaiden 4 ne dispose pas de sauvegarde manuelle et il vous faudra faire attention car les check-point peuvent être assez loin les uns des autres.

Ninja Gaiden 4 vous invite à partager les aventures de Yakumo, un jeune ninja du clan des corbeaux qui a pour mission de tuer la prêtresse capable de ressusciter le dragon noir. Cependant il va l’épargner pour qu’elle puisse le faire revivre et l’éliminer pour de bon. Yakumo va aller à l’encontre des ordres de son clan et s’émanciper à travers un périple dangereux. Il va tout faire pour sauver Tokyo de l’emprise des démons avec l’aide de ses amis. Dans Ninja Gaiden 4, vous allez obtenir un score à chaque fin de chapitre pour déterminer votre maîtrise du jeu. Vous allez devoir réaliser des missions annexes pour obtenir des ressources importantes qui vous serviront à acheter des techniques et des capacités liées à chacunes des armes obtenues. Les combats sont assez exigeants surtout quand les ennemis sont en très grand nombre. Il vous faudra esquiver ou contrer chaque attaque pour tenter de survivre. En combat, vous aurez deux modes pour vos armes et l’une vous demandera d’augmenter une jauge pour lancer des attaques dévastatrices et contrer les parades des élites.

Ninja Gaiden 4 propose d’explorer chaque recoin de la map pour obtenir des items de soins ou des ninjacoins pour acheter de nouvelles techniques. Dans ce nouvel épisode les phases de déplacement sont assez variées et vous allez pouvoir utiliser, un grappin, des ailes de corbeau pour vous déplacer dans les airs, sauter de toit en toit tel un ninja et même rider sur des bâtiments.

Ninja Gaiden 4 est un nouvel épisode qui risque de diviser les fans de la série et les nouveaux joueurs. Le jeu est plus accessible et permet de profiter du scénario et de l’univers de Ninja Gaiden sans compromis. Cependant pour les vétérans il faudra certainement choisir le mode difficile pour retrouver le gameplay d’origine. Toutefois les combats dans ces modes sont tout aussi impressionnants grâce à des effets et des graphismes d’excellente facture. Personnellement mes amis et moi adorons cette nouvelle itérations et je vous recommande d’y jouer si vous avez terminé Lost Soul Aside et que vous êtes à la recherche d’expérience forte.

Test réalisé par Pierre

Cet article TEST de NINJA GAIDEN 4 – Ça va re-trancher! est apparu en premier sur Insert Coin.

Test – Écouteurs Aurvana Ace 3 de Creative

Que valent les écouteurs Aurvana Ace 3 de Creative ?

Creative lance de façon très régulière de nouveaux modèles sur le marché et cette fois, nous a gentiment fait parvenir ses nouveaux écouteurs haut de gamme, j’ai nommé les Aurvana Ace 3. Nous avons d’ailleurs pu tester la V2 juste ici en mars 2024.

Voyons ensemble ce qu’ils valent et quelles sont les améliorations qui ont été apportées. Vous les retrouverez au prix de 149,99 € directement sur le site de la marque. Place au test !

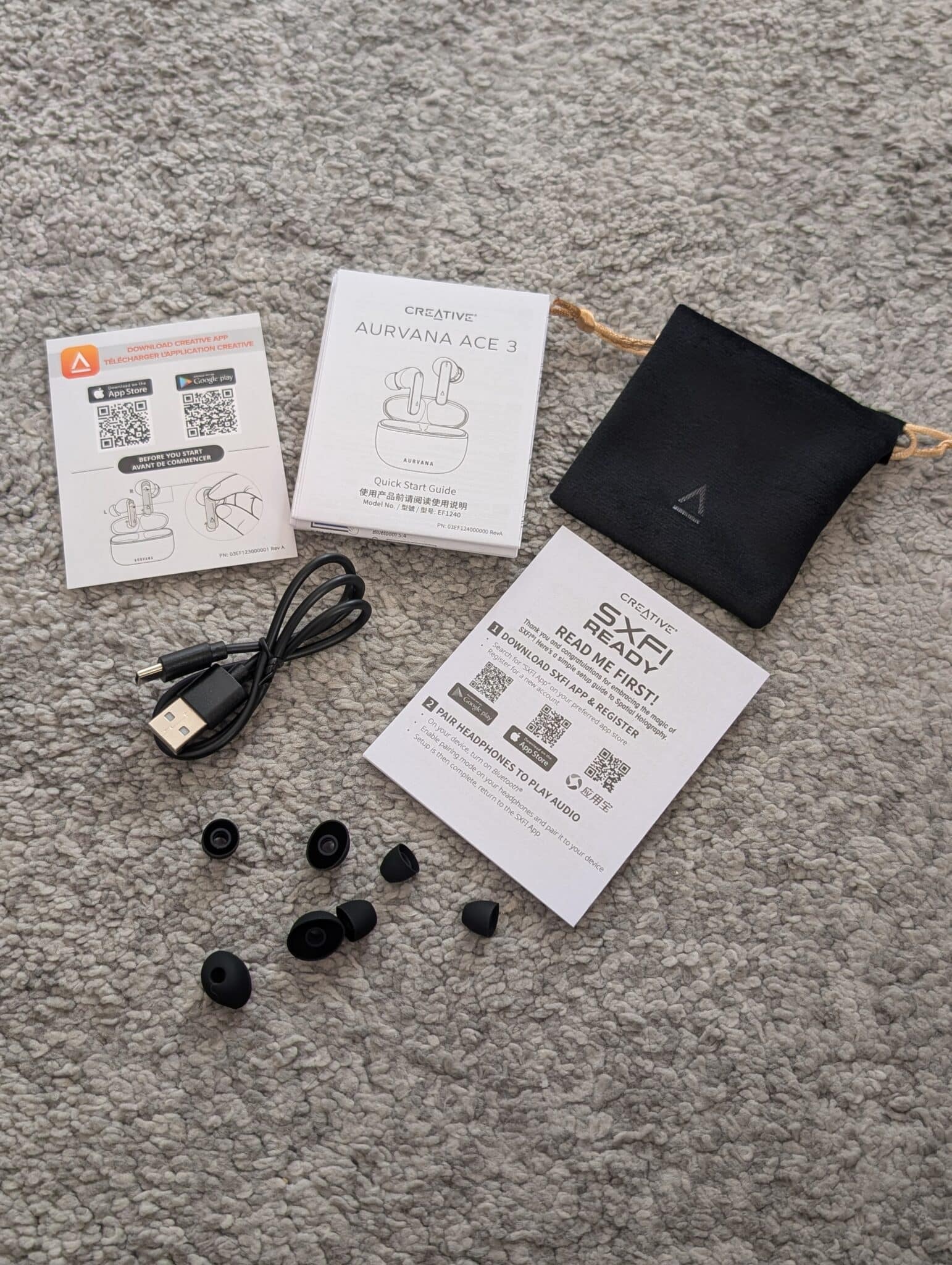

Unboxing

Cette fois, pas de touche orange à laquelle la marque Creative nous a habitué depuis quelques années. Ici, on retrouvera sur la face avant un visuel des écouteurs à peine sortie de leur boîte de rechargement, un rappel de la marque et du modèle juste en dessous. Simple, élégant, efficace. À gauche, nous retrouverons quelques mentions légales en plusieurs langues tandis qu’à droite, nous aurons un visuel des écouteurs en utilisations et un rappel des divers technologies qu’ils renferment.

Sur le dessus, le contenu de la boîte y sera dessiné et pour finir, à l’arrière, les principales caractéristiques techniques et un rappel des applications dédiées.

Caractéristiques techniques

| Caractéristique |

|---|

| Technologie audio principale | xMEMS (double driver) + transducteur dynamique 10 mm |

| Codecs audio pris en charge | LDAC + aptX Lossless (via Snapdragon Sound) |

| Connectivité | Bluetooth 5.4 |

| Annulation de bruit active (ANC) | Système hybride adaptatif |

| Mode ambient | Oui |

| Résistance à l’eau / sueur | IPX5 |

| Autonomie annoncée | 7 heures par charge + ~26 heures (ou 28 h selon source) avec le boîtier |

| Nombre de micros / appels | Six micros annoncés pour des appels clairs |

| Détection usage (play/pause auto) | Oui, détection intelligente de retrait / remise d’écouteurs |

Fonctionnalités

-

Système à double transducteur hybride – un driver xMEMS (semi-conducteur) + un driver dynamique de 10 mm pour conjuguer précision des aigus et puissance des basses.

-

Support audio haut-de-gamme et sans perte – compatibilité avec Qualcomm Snapdragon Sound, codec aptX Lossless et LDAC.

-

Connectivité Bluetooth 5.4 – prise en charge du standard LE Audio et de la technologie Auracast pour le partage audio et la diffusion sur plusieurs appareils.

-

Technologie de personnalisation sonore Mimi Hearing Technologies (Mimi Sound Personalization) – un test auditif génère un profil et le son s’adapte en temps réel selon votre oreille.

-

Annulation active du bruit (ANC) de type « hybride adaptatif » – l’ANC s’ajuste selon l’environnement, mais également le mode « Ambient » (bruit extérieur) pour rester conscient de ce qui se passe autour.

-

Détection de port (« Wear Detect ») – la lecture met en pause automatiquement quand vous retirez un écouteur, et reprend quand vous le remettez.

-

Contrôles tactiles – pour lecture/pause, appels, assistant vocal etc.

-

Mode mono – possibilité d’utiliser un seul écouteur pour un usage plus flexible.

-

Résistance à l’eau et à la transpiration – certification IPX5 pour les écouteurs (leur usage lors d’activités sportives ou sous pluie légère).

-

Autonomie annoncée – jusqu’à 7 heures d’écoute avec une seule charge des écouteurs, et jusqu’à 26 heures combinées avec le boîtier. Recharge par USB-C et charge sans fil compatible.

-

Application dédiée (Creative App) – permettant profil auditif, mise à jour firmware, réglages audio personnalisés.

-

Embouts de différentes tailles – (XS, S, M, L, XL) pour adapter le confort et l’isolation.

Contenu

- 1 x Creative Aurvana Ace 3

- 1 x Boîtier de recharge USB-C

- 1 x Câble de recharge USB-C

- 1 x Paire d’embouts en silicone (XS), (S), (M), (L) et (XL)

- 1 x Guide de démarrage rapide

- 1 x Pochette de transport

Test

Creative revient sur le devant de la scène avec une nouvelle génération d’écouteurs intra-auriculaires : les Aurvana Ace 3. Après le joli succès des Aurvana Ace et Ace 2, la marque singapourienne semble vouloir affirmer un peu plus son savoir-faire audio, entre innovation technique et sensibilité musicale. Commençons par le design, ici épuré, fonctionnel, sans extravagance. Les Aurvana Ace 3 s’inscrivent dans la continuité visuelle des Ace anciennes génération, avec ce boîtier légèrement ovoïde, au couvercle un peu transparent et au logo gravé en relief.

Le boîtier s’ouvre avec une résistance bien calibrée – plus ferme que sur les modèles précédents si ma mémoire est bonne– et les écouteurs s’en extraient aisément, sans craindre de les faire tomber. Ils tiennent bien dans l’oreille, grâce à un format semi-ergonomique qui épouse naturellement le pavillon sans créer de pression. Le port reste confortable même après plusieurs heures, un point sur lequel Creative a nettement progressé.

Côté fabrication, on retrouve un assemblage propre, des finitions précises et une texture douce qui ne garde pas trop les traces de doigts, mais un peu quand même. Les écouteurs sont certifiés IPX5, donc capables de résister à la transpiration ou à une pluie fine – un vrai plus pour une utilisation nomade ou sportive.

Grande nouveauté de cette génération, la technologie Mimi personnalise le son selon votre audition. Après un court test, les écouteurs adaptent la restitution en temps réel à votre sensibilité, ajustant subtilement les aigus, médiums et graves.

Le résultat est bluffant : chaque écoute devient unique, naturelle et parfaitement équilibrée. Les voix gagnent en clarté, les instruments respirent mieux, et l’on découvre à quel point un son « fait pour soi » peut transformer l’expérience d’écoute.

L’annulation de bruit active a toujours été le point faible des modèles Aurvana. Sur cette version, Creative introduit une ANC hybride adaptative, capable d’ajuster automatiquement son intensité selon l’environnement. Dans les faits, cela fonctionne plutôt bien pour les sons continus – le vrombissement d’un bus, le souffle d’une climatisation – mais reste limité face aux bruits soudains ou aux voix.

Ce n’est pas un défaut rédhibitoire, le but est plutôt d’isoler suffisamment pour profiter pleinement de la musique sans se couper totalement du monde. Le mode « transparence », lui, m’a laissé plus perplexe : il amplifie les sons ambiants de façon naturelle, utile pour une balade urbaine ou un trajet à vélo. En courant par exemple, une voiture a réussi à me surprendre, en passant de pas de bruit de moteur à un bruit assourdissant tout d’un coup. Petit moment panique. On notera également la possibilité de basculer très vite d’un mode à l’autre via un simple geste tactile, ce qui rend l’usage fluide et intuitif.

Le passage au Bluetooth 5.4 se ressent immédiatement. L’appairage est quasi instantané, la stabilité irréprochable, même à plusieurs mètres du smartphone. Les codecs LDAC et aptX Lossless sont évidemment de la partie, garantissant une restitution sans perte si vous disposez d’un appareil compatible.

Pour les appels, les six microphones assurent une captation claire et un traitement efficace du bruit environnant. Même en extérieur, la voix reste nette.

L’autonomie annoncée est de 7 heures d’écoute par charge, avec environ 26 heures supplémentaires grâce au boîtier. En pratique, avec l’ANC actif et un volume autour de 70 %, on se situe plutôt entre 4 et 5 heures – ce qui reste dans la moyenne haute du segment. Le boîtier se recharge en USB-C, et la charge sans fil est toujours de la partie.

Parlons rapidement des deux applications : Creative App et Super SXFI. La première permet de tout personnaliser : son, commandes tactiles, ANC, détection de port ou encore mises à jour. C’est aussi elle qui intègre la technologie Mimi Sound Personalization, capable d’adapter le son à votre audition après un court test. Simple, fluide et efficace, l’application transforme les écouteurs en un produit vraiment sur mesure, où chaque réglage s’ajuste à vos préférences et à votre manière d’écouter.

L’application Super X-Fi quant à elle permet de reproduire un son 3D immersif fidèle à la spatialisation d’un home cinéma. Elle donne la possibilité de calibrer l’écoute selon la forme de votre tête et de vos oreilles, d’ajuster les profils audio, et de gérer les réglages des écouteurs.

Pour terminer, comparons nos Aurvana Ace 3 au modèle sorti l’année dernier, ce qui nous permettra au passage de résumer un peu notre test. Notre modèle du jour apporte plusieurs améliorations par rapport aux Ace 2. Leur son est plus riche et détaillé grâce au double transducteur hybride (xMEMS + dynamique 10 mm). La grande nouveauté est la personnalisation sonore Mimi, qui ajuste le son selon votre audition, absente sur les Ace 2. L’ANC est plus précise et adaptative, la connectivité passe au Bluetooth 5.4 avec support LE Audio et Auracast, et l’autonomie atteint jusqu’à 7 h par charge et 26 h avec le boîtier. Les Ace 3 ajoutent également 6 microphones et une résistance IPX5, offrant ainsi une expérience plus complète et moderne pour un prix similaire.