Staying the course with lockdowns could end future pandemics in months

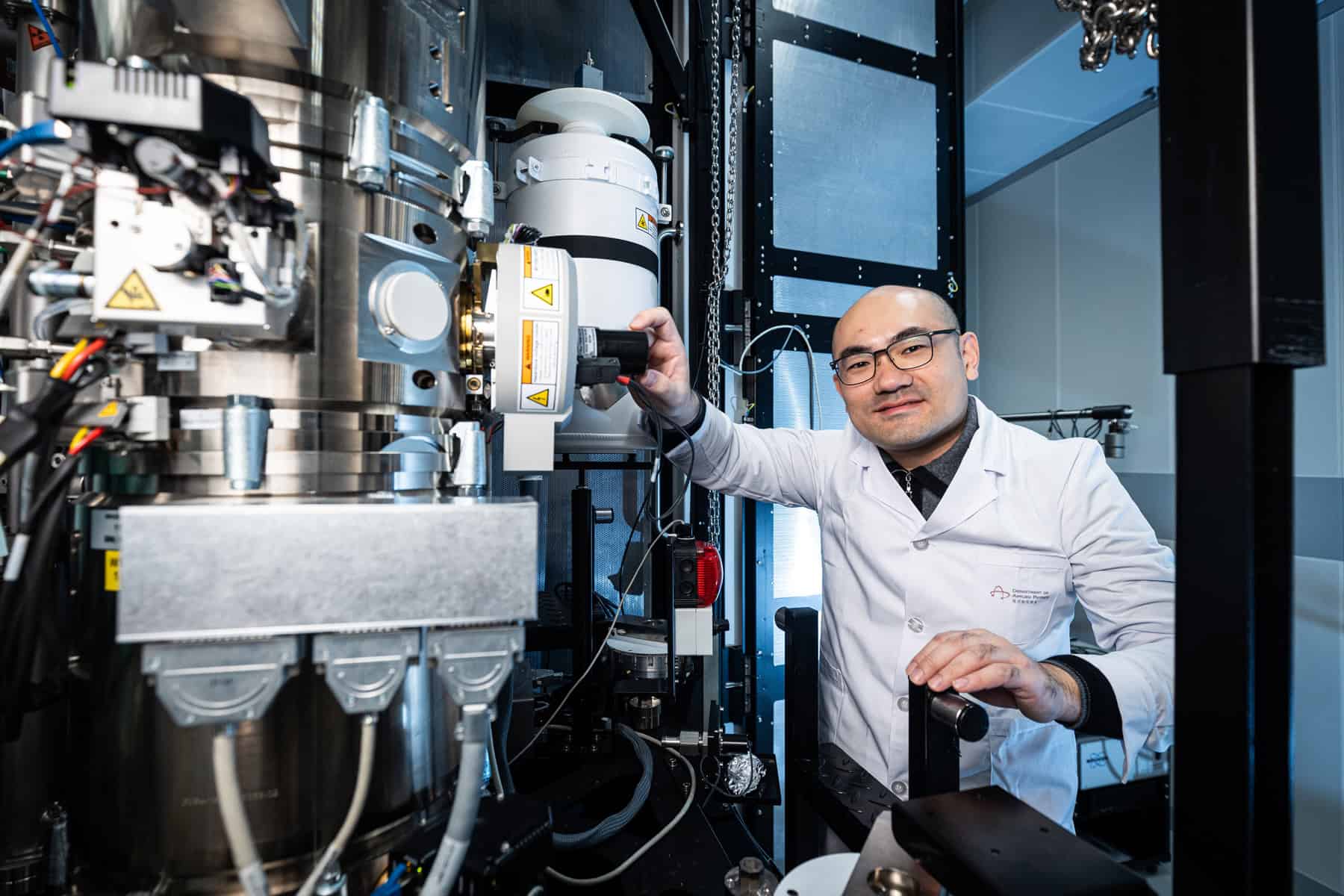

As a theoretical and mathematical physicist at Imperial College London, UK, Bhavin Khatri spent years using statistical physics to understand how organisms evolve. Then the COVID-19 pandemic struck, and like many other scientists, he began searching for ways to apply his skills to the crisis. This led him to realize that the equations he was using to study evolution could be repurposed to model the spread of the virus – and, crucially, to understand how it could be curtailed.

In a paper published in EPL, Khatri models the spread of a SARS-CoV-2-like virus using branching process theory, which he’d previously used to study how advantageous alleles (variations in a genetic sequence) become more prevalent in a population. He then uses this model to assess the duration that interventions such as lockdowns would need to be applied in order to completely eliminate infections, with the strength of the intervention measured in terms of the number of people each infected person goes on to infect (the virus’ effective reproduction number, R).

Tantalizingly, the paper concludes that applying such interventions worldwide in June 2020 could have eliminated the COVID virus by January 2021, several months before the widespread availability of vaccines reduced its impact on healthcare systems and led governments to lift restrictions on social contact. Physics World spoke to Khatri to learn more about his research and its implications for future pandemics.

What are the most important findings in your work?

One important finding is that we can accurately calculate the distribution of times required for a virus to become extinct by making a relatively simple approximation. This approximation amounts to assuming that people have relatively little population-level “herd” immunity to the virus – exactly the situation that many countries, including the UK, faced in March 2020.

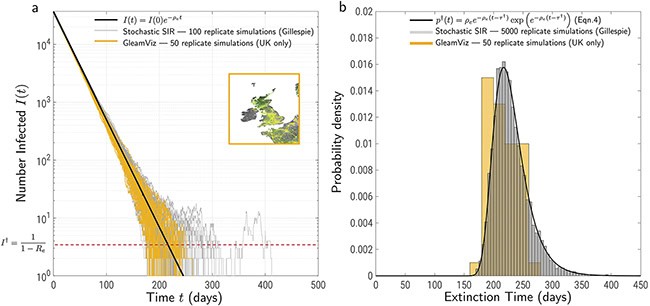

Making this approximation meant I could reduce the three coupled differential equations of the well-known SIR model (which models pandemics via the interplay between Susceptible, Infected and Recovered individuals) to a single differential equation for the number of infected individuals in the population. This single equation turned out to be the same one that physics students learn when studying radioactive decay. I then used the discrete stochastic version of exponential decay and standard approaches in branching process theory to calculate the distribution of extinction times.

Alongside the formal theory, I also used my experience in population genetic theory to develop an intuitive approach for calculating the mean of this extinction time distribution. In population genetics, when a mutation is sufficiently rare, changes in its number of copies in the population are dominated by randomness. This is true even if the mutation has a large selective advantage: it has to grow by chance to sufficient critical size – on the order of 1/(selection strength) – for selection to take hold.

The same logic works in reverse when applied to a declining number of infections. Initially, they will decline deterministically, but once they go below a threshold number of individuals, changes in infection numbers become random. Using the properties of such random walks, I calculated an expression for the threshold number and the mean duration of the stochastic phase. These agree well with the formal branching process calculation.

In practical terms, the main result of this theoretical work is to show that for sufficiently strong lockdowns (where, on average, only one of every two infected individuals goes on to infect another person, R=0.5), this distribution of extinction times was narrow enough to ensure that the COVID pandemic virus would have gone extinct in a matter of months, or at most a year.

How realistic is this counterfactual scenario of eliminating SARS-CoV-2 within a year?

Leaving politics and the likelihood of social acceptance aside for the moment, if a sufficiently strong lockdown could have been maintained for a period of roughly six months across the globe, then I am confident that the virus could have been reduced to very low levels, or even made extinct.

The question then is: is this a stable situation? From the perspective of a single nation, if the rest of the world still has infections, then that nation either needs to maintain its lockdown or be prepared to re-impose it if there are new imported cases. From a global perspective, a COVID-free world should be a stable state, unless an animal reservoir of infections causes re-infections in humans.

As for the practical success of such a strategy, that depends on politics and the willingness of individuals to remain in lockdown. Clearly, this is not in the model. One thing I do discuss, though, is that this strategy becomes far more difficult once more infectious variants of SARS-CoV-2 evolve. However, the problem I was working on before this one (which I eventually published in PNAS) concerned the probability of evolutionary rescue or resistance, and that work suggests that evolution of new COVID variants reduces significantly when there are fewer infections. So an elimination strategy should also be more robust against the evolution of new variants.

What lessons would you like experts (and the public) to take from this work when considering future pandemic scenarios?

I’d like them to conclude that pandemics with similar properties are, in principle, controllable to small levels of infection – or complete extinction – on timescales of months, not years, and that controlling them minimizes the chance of new variants evolving. So, although the question of the political and social will to enact such an elimination strategy is not in the scope of the paper, I think if epidemiologists, policy experts, politicians and the public understood that lockdowns have a finite time horizon, then it is more likely that this strategy could be adopted in the future.

I should also say that my work makes no comment on the social harms of lockdowns, which shouldn’t be minimized and would need to be weighed against the potential benefits.

What do you plan to do next?

I think the most interesting next avenue will be to develop theory that lets us better understand the stability of the extinct state at the national and global level, under various assumptions about declining infections in other countries that adopted different strategies and the role of an animal reservoir.

It would also be interesting to explore the role of “superspreaders”, or infected individuals who infect many other people. There’s evidence that many infections spread primarily through relatively few superspreaders, and heuristic arguments suggest that taking this into account would decrease the time to extinction compared to the estimates in this paper.

I’ve also had a long-term interest in understanding the evolution of viruses from the lens of what are known as genotype phenotype maps, where we consider the non-trivial and often redundant mapping from genetic sequences to function, where the role of stochasticity in evolution can be described by statistical physics analogies. For the evolution of the antibodies that help us avoid virus antigens, this would be a driven system, and theories of non-equilibrium statistical physics could play a role in answering questions about the evolution of new variants.

The post Staying the course with lockdowns could end future pandemics in months appeared first on Physics World.