The forgotten pioneers of computational physics

When you look back at the early days of computing, some familiar names pop up, including John von Neumann, Nicholas Metropolis and Richard Feynman. But they were not lonely pioneers – they were part of a much larger group, using mechanical and then electronic computers to do calculations that had never been possible before.

These people, many of whom were women, were the first scientific programmers and computational scientists. Skilled in the complicated operation of early computing devices, they often had degrees in maths or science, and were an integral part of research efforts. And yet, their fundamental contributions are mostly forgotten.

This was in part because of their gender – it was an age when sexism was rife, and it was standard for women to be fired from their job after getting married. However, there is another important factor that is often overlooked, even in today’s scientific community – people in technical roles are often underappreciated and underacknowledged, even though they are the ones who make research possible.

Human and mechanical computers

Originally, a “computer” was a human being who did calculations by hand or with the help of a mechanical calculator. It is thought that the world’s first computational lab was set up in 1937 at Columbia University. But it wasn’t until the Second World War that the demand for computation really exploded; with the need for artillery calculations, new technologies and code breaking.

In the US, the development of the atomic bomb during the Manhattan Project (established in 1943) required huge computational efforts, so it wasn’t long before the New Mexico site had a hand-computing group. Called the T-5 group of the Theoretical Division, it initially consisted of about 20 people. Most were women, including the spouses of other scientific staff. Among them was Mary Frankel, a mathematician married to physicist Stan Frankel; mathematician Augusta “Mici” Teller who was married to Edward Teller, the “father of the hydrogen bomb”; and Jean Bacher, the wife of physicist Robert Bacher.

As the war continued, the T-5 group expanded to include civilian recruits from the nearby towns and members of the Women’s Army Corps. Its staff worked around the clock, using printed mathematical tables and desk calculators in four-hour shifts – but that was not enough to keep up with the computational needs for bomb development. In the early spring of 1944, IBM punch-card machines were brought in to supplement the human power. They became so effective that the machines were soon being used for all large calculations, 24 hours a day, in three shifts.

The computational group continued to grow, and among the new recruits were Naomi Livesay and Eleonor Ewing. Livesay held an advanced degree in mathematics and had done a course in operating and programming IBM electric calculating machines, making her an ideal candidate for the T-5 division. She in turn recruited Ewing, a fellow mathematician who was a former colleague. The two young women supervised the running of the IBM machines around the clock.

The frantic pace of the T-5 group continued until the end of the war in September 1945. The development of the atomic bomb required an immense computational effort, which was made possible through hand and punch-card calculations.

Electronic computers

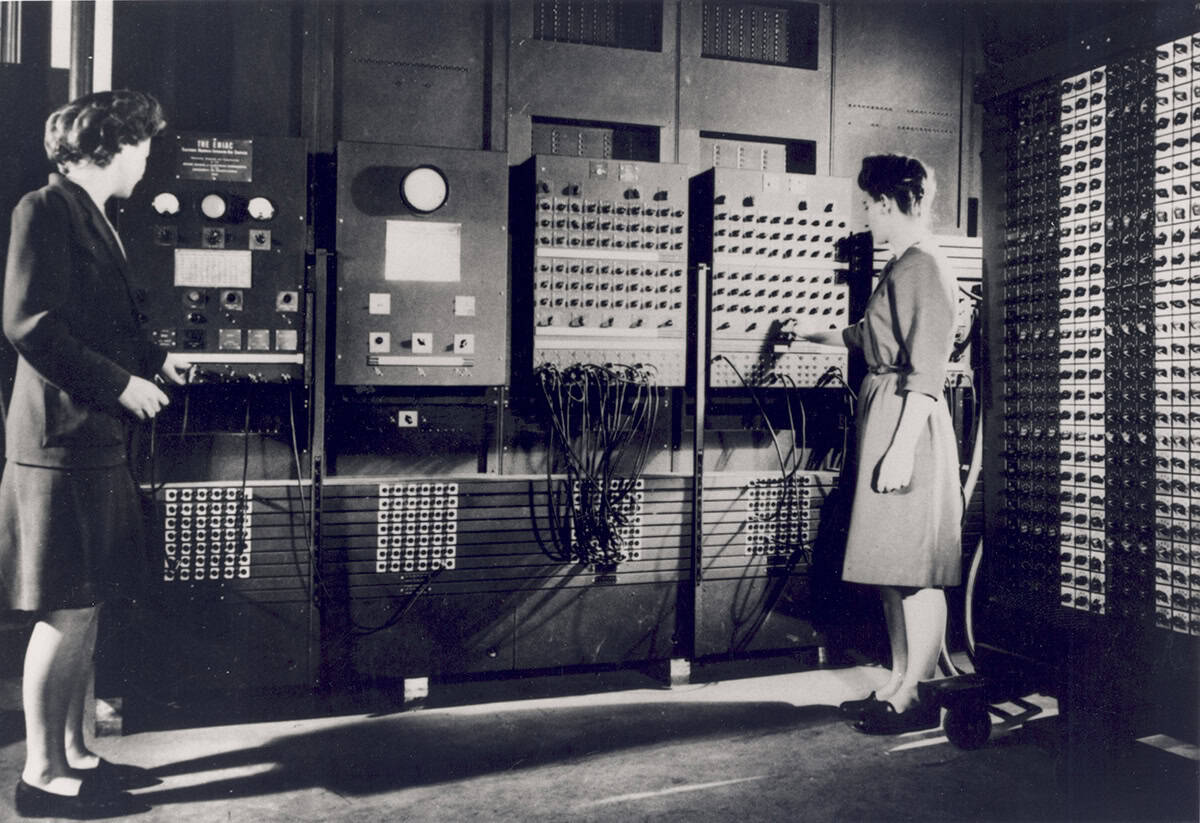

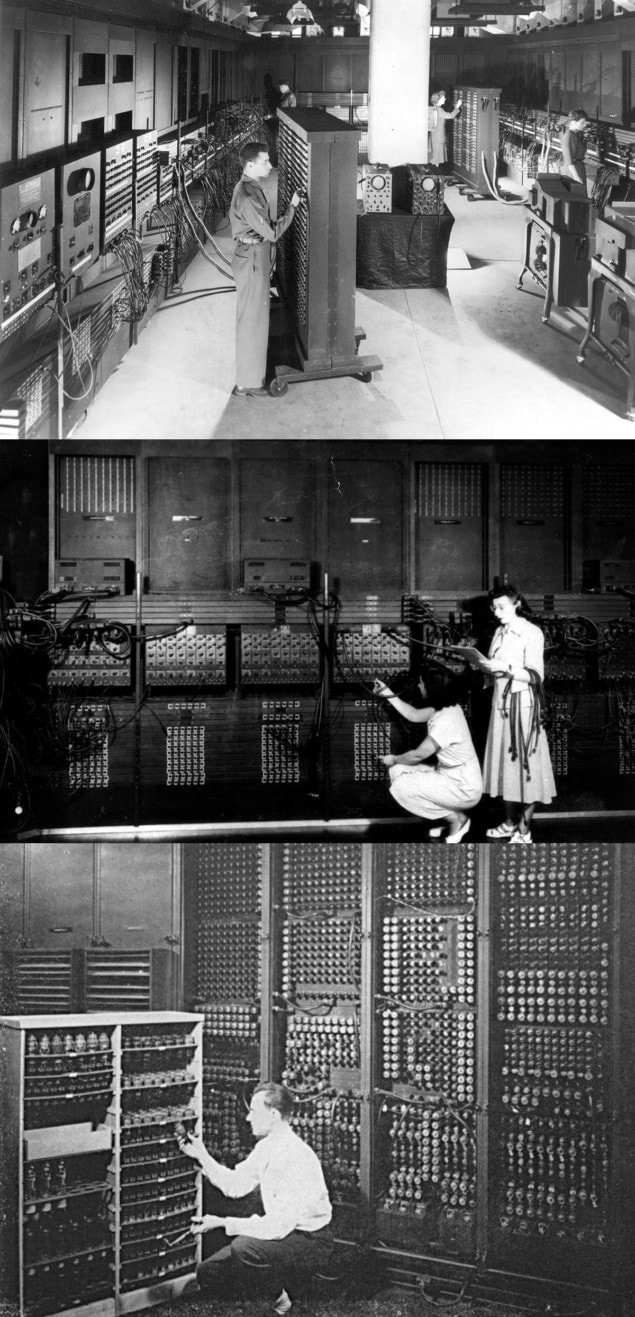

Shortly after the war ended, the first fully electronic, general-purpose computer – the Electronic Numerical Integrator and Computer (ENIAC) – became operational at the University of Pennsylvania, following two years of development. The project had been led by physicist John Mauchly and electrical engineer J Presper Eckert. The machine was operated and coded by six women – mathematicians Betty Jean Jennings (later Bartik); Kathleen, or Kay, McNulty (later Mauchly, then Antonelli); Frances Bilas (Spence); Marlyn Wescoff (Meltzer) and Ruth Lichterman (Teitelbaum); as well as Betty Snyder (Holberton) who had studied journalism.

Polymath John von Neumann also got involved when looking for more computing power for projects at the new Los Alamos Laboratory, established in New Mexico in 1947. In fact, although originally designed to solve ballistic trajectory problems, the first problem to be run on the ENIAC was “the Los Alamos problem” – a thermonuclear feasibility calculation for Teller’s group studying the H-bomb.

Like in the Manhattan Project, several husband-and-wife teams worked on the ENIAC, the most famous being von Neumann and his wife Klara Dán, and mathematicians Adele and Herman Goldstine. Dán von Neumann in particular worked closely with Nicholas Metropolis, who alongside mathematician Stanislaw Ulam had coined the term Monte Carlo to describe numerical methods based on random sampling. Indeed, between 1948 and 1949 Dán von Neumann and Metropolis ran the first series of Monte Carlo simulations on an electronic computer.

Work began on a new machine at Los Alamos in 1948 – the Mathematical Analyzer Numerical Integrator and Automatic Computer (MANIAC) – which ran its first large-scale hydrodynamic calculation in March 1952. Many of its users were physicists, and its operators and coders included mathematicians Mary Tsingou (later Tsingou-Menzel), Marjorie Jones (Devaney) and Elaine Felix (Alei); plus Verna Ellingson (later Gardiner) and Lois Cook (Leurgans).

Early algorithms

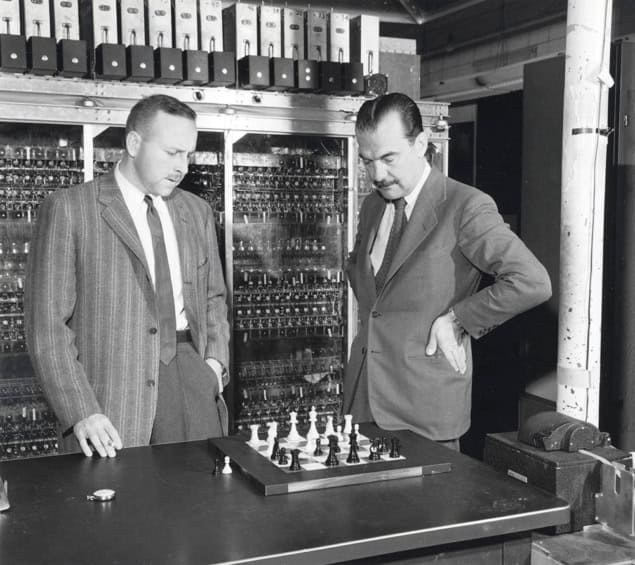

The Los Alamos scientists tried all sorts of problems on the MANIAC, including a chess-playing program – the first documented case of a machine defeating a human at the game. However, two of these projects stand out because they had profound implications on computational science.

In 1953 the Tellers, together with Metropolis and physicists Arianna and Marshall Rosenbluth, published the seminal article “Equation of state calculations by fast computing machines” (J. Chem. Phys. 21 1087). The work introduced the ideas behind the “Metropolis (later renamed Metropolis–Hastings) algorithm”, which is a Monte Carlo method that is based on the concept of “importance sampling”. (While Metropolis was involved in the development of Monte Carlo methods, it appears that he did not contribute directly to the article, but provided access to the MANIAC nightshift.) This is the progenitor of the Markov Chain Monte Carlo methods, which are widely used today throughout science and engineering.

Marshall later recalled how the research came about when he and Arianna had proposed using the MANIAC to study how solids melt (AIP Conf. Proc. 690 22).

Edward Teller meanwhile had the idea of using statistical mechanics and taking ensemble averages instead of following detailed kinematics for each individual disk, and Mici helped with programming during the initial stages. However, the Rosenbluths did most of the work, with Arianna translating and programming the concepts into an algorithm.

The 1953 article is remarkable, not only because it led to the Metropolis algorithm, but also as one of the earliest examples of using a digital computer to simulate a physical system. The main innovation of this work was in developing “importance sampling”. Instead of sampling from random configurations, it samples with a bias toward physically important configurations which contribute more towards the integral.

Furthermore, the article also introduced another computational trick, known as “periodic boundary conditions” (PBCs): a set of conditions which are often used to approximate an infinitely large system by using a small part known as a “unit cell”. Both importance sampling and PBCs went on to become workhorse methods in computational physics.

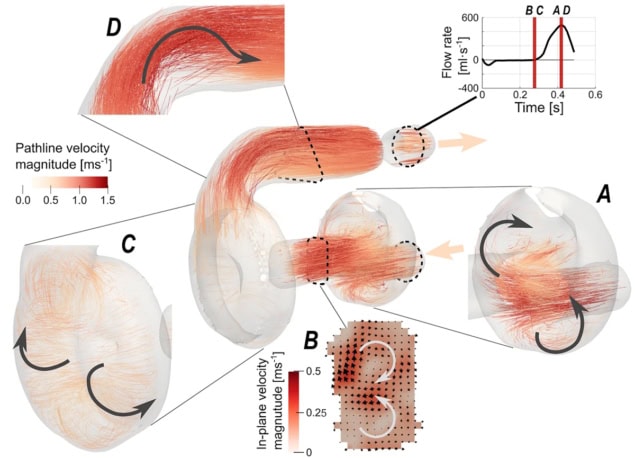

In the summer of 1953, physicist Enrico Fermi, Ulam, Tsingou and physicist John Pasta also made a significant breakthrough using the MANIAC. They ran a “numerical experiment” as part of a series meant to illustrate possible uses of electronic computers in studying various physical phenomena.

The team modelled a 1D chain of oscillators with a small nonlinearity to see if it would behave as hypothesized, reaching an equilibrium with the energy redistributed equally across the modes (doi.org/10.2172/4376203). However, their work showed that this was not guaranteed for small perturbations – a non-trivial and non-intuitive observation that would not have been apparent without the simulations. It is the first example of a physics discovery made not by theoretical or experimental means, but through a computational approach. It would later lead to the discovery of solitons and integrable models, the development of chaos theory, and a deeper understanding of ergodic limits.

Although the paper says the work was done by all four scientists, Tsingou’s role was forgotten, and the results became known as the Fermi–Pasta–Ulam problem. It was not until 2008, when French physicist Thierry Dauxois advocated for giving her credit in a Physics Today article, that Tsingou’s contribution was properly acknowledged. Today the finding is called the Fermi–Pasta–Ulam–Tsingou problem.

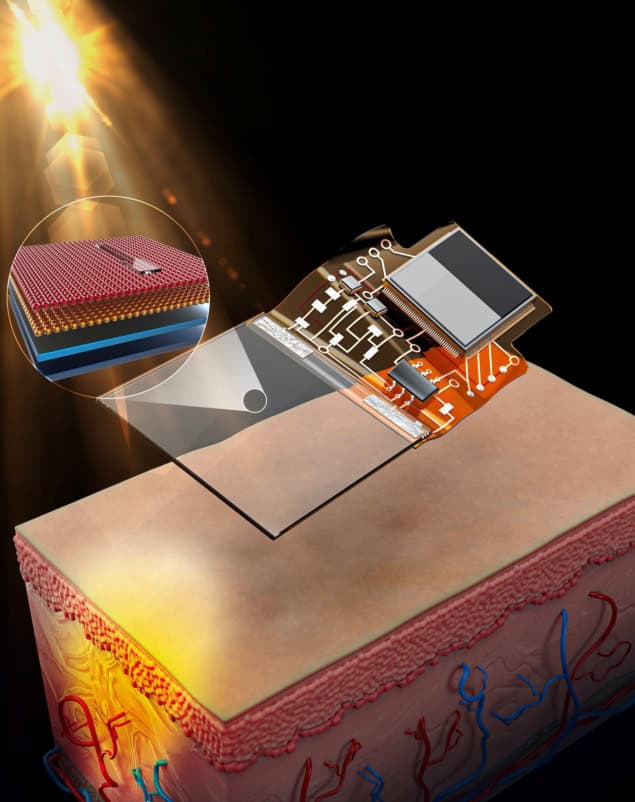

The year 1953 also saw IBM’s first commercial, fully electronic computer – an IBM 701 – arrive at Los Alamos. Soon the theoretical division had two of these machines, which, alongside the MANIAC, gave the scientists unprecedented computing power. Among those to take advantage of the new devices were Martha Evans (whom very little is known about) and theoretical physicist Francis Harlow, who began to tackle the largely unexplored subject of computational fluid dynamics.

The idea was to use a mesh of cells through which the fluid, represented as particles, would move. This computational method made it possible to solve complex hydrodynamics problems (involving large distortions and compressions of the fluid) in 2D and 3D. Indeed, the method proved so effective that it became a standard tool in plasma physics where it has been applied to every conceivable topic from astrophysical plasmas to fusion energy.

The resulting internal Los Alamos report – The Particle-in-cell Method for Hydrodynamic Calculations, published in 1955 – showed Evans as first author and acknowledged eight people (including Evans) for the machine calculations. However, while Harlow is remembered as one of the pioneers of computational fluid dynamics, Evans was forgotten.

A clear-cut division of labour?

In an age where women had very limited access to the frontlines of research, the computational war effort brought many female researchers and technical staff in. As their contributions come more into the light, it becomes clearer that their role was not a simple clerical one.

There is a view that the coders’ work was “the vital link between the physicist’s concepts (about which the coders more often than not didn’t have a clue) and their translation into a set of instructions that the computer was able to perform, in a language about which, more often than not, the physicists didn’t have a clue either”, as physicists Giovanni Battimelli and Giovanni Ciccotti wrote in 2018 (Eur. Phys. J. H 43 303). But the examples we have seen show that some of the coders had a solid grasp of the physics, and some of the physicists had a good understanding of the machine operation. Rather than a skilled–non-skilled/men–women separation, the division of labour was blurred. Indeed, it was more of an effective collaboration between physicists, mathematicians and engineers.

Even in the early days of the T-5 division before electronic computers existed, Livesay and Ewing, for example, attended maths lectures from von Neumann, and introduced him to punch-card operations. As has been documented in books including Their Day in the Sun by Ruth Howes and Caroline Herzenberg, they also took part in the weekly colloquia held by J Robert Oppenheimer and other project leaders. This shows they should not be dismissed as mere human calculators and machine operators who supposedly “didn’t have a clue” about physics.

Verna Ellingson (Gardiner) is another forgotten coder who worked at Los Alamos. While little information about her can be found, she appears as the last author on a 1955 paper (Science 122 465) written with Metropolis and physicist Joseph Hoffman – “Study of tumor cell populations by Monte Carlo methods”. The next year she was first author of “On certain sequences of integers defined by sieves” with mathematical physicist Roger Lazarus, Metropolis and Ulam (Mathematics Magazine 29 117). She also worked with physicist George Gamow on attempts to discover the code for DNA selection of amino acids, which just shows the breadth of projects she was involved in.

Evans not only worked with Harlow but took part in a 1959 conference on self-organizing systems, where she queried AI pioneer Frank Rosenblatt on his ideas about human and machine learning. Her attendance at such a meeting, in an age when women were not common attendees, implies we should not view her as “just a coder”.

With their many and wide-ranging contributions, it is more than likely that Evans, Gardiner, Tsingou and many others were full-fledged researchers, and were perhaps even the first computational scientists. “These women were doing work that modern computational physicists in the [Los Alamos] lab’s XCP [Weapons Computational Physics] Division do,” says Nicholas Lewis, a historian at Los Alamos. “They needed a deep understanding of both the physics being studied, and of how to map the problem to the particular architecture of the machine being used.”

An evolving identity

In the 1950s there was no computational physics or computer science, therefore it’s unsurprising that the practitioners of these disciplines went by different names, and their identity has evolved over the decades since.

1930s–1940s

Originally a “computer” was a person doing calculations by hand or with the help of a mechanical calculator.

Late 1940s – early 1950s

A “coder” was a person who translated mathematical concepts into a set of instructions in machine language. John von Neumann and Herman Goldstine distinguished between “coding” and “planning”, with the former being the lower-level work of turning flow diagrams into machine language (and doing the physical configuration) while the latter did the mathematical analysis of the problem.

Meanwhile, an “operator” would physically handle the computer (replacing punch cards, doing the rewiring, etc). In the late-1940s coders were also operators.

As historians note in the book ENIAC in Action this was an age where “It was hard to devise the mathematical treatment without a good knowledge of the processes of mechanical computation…It was also hard to operate the ENIAC without understanding something about the mathematical task it was undertaking.”

For the ENIAC a “programmer” was not a person but “a unit combining different sequences in a coherent computation”. The term would later shift and eventually overlap with the meaning of coder as a person’s job.

1960s

Computer scientist Margaret Hamilton, who led the development of the on-board flight software for NASA’s Apollo program, coined the term “software engineering” to distinguish the practice of designing, developing, testing and maintaining software from the engineering tasks associated with the hardware.

1980s – early 2000s

Using the term “programmer” for someone who coded computers peaked in popularity in the 1980s, but by the 2000s was replaced in favour of other job titles such as various flavours of “developer” or “software architect”.

Early 2010s

A “research software engineer” is a person who combines professional software engineering expertise with an intimate understanding of scientific research.

Overlooked then, overlooked now

Credited or not, these pioneering women and their contributions have been mostly forgotten, and only in recent decades have their roles come to light again. But why were they obscured by history in the first place?

Secrecy and sexism seem to be the main factors at play. For example, Livesay was not allowed to pursue a PhD in mathematics because she was a woman, and in the cases of the many married couples, the team contributions were attributed exclusively to the husband. The existence of the Manhattan Project was publicly announced in 1945, but documents that contain certain nuclear-weapons-related information remain classified today. Because these are likely to remain secret, we will never know the full extent of these pioneers’ contributions.

But another often overlooked reason is the widespread underappreciation of the key role of computational scientists and research software engineers, a term that was only coined just over a decade ago. Even today, these non-traditional research roles end up being undervalued. A 2022 survey by the UK Software Sustainability Institute, for example, showed that only 59% of research software engineers were named as authors, with barely a quarter (24%) mentioned in the acknowledgements or main text, while a sixth (16%) were not mentioned at all.

The separation between those who understand the physics and those who write the code, understand and operate the hardware goes back to the early days of computing (see box above), but it wasn’t entirely accurate even then. People who implement complex scientific computations are not just coders or skilled operators of supercomputers, but truly multidisciplinary scientists who have a deep understanding of the scientific problems, mathematics, computational methods and hardware.

Such people – whatever their gender – play a key role in advancing science and yet remain the unsung heroes of the discoveries their work enables. Perhaps what this story of the forgotten pioneers of computational physics tells us is that some views rooted in the 1950s are still influencing us today. It’s high time we moved on.

The post The forgotten pioneers of computational physics appeared first on Physics World.