Shining laser light on a material produces subtle changes in its magnetic properties

Researchers in Switzerland have found an unexpected new use for an optical technique commonly used in silicon chip manufacturing. By shining a focused laser beam onto a sample of material, a team at the Paul Scherrer Institute (PSI) and ETH Zürich showed that it was possible to change the material’s magnetic properties on a scale of nanometres – essentially “writing” these magnetic properties into the sample in the same way as photolithography etches patterns onto wafers. The discovery could have applications for novel forms of computer memory as well as fundamental research.

In standard photolithography – the workhorse of the modern chip manufacturing industry – a light beam passes through a transmission mask and projects an image of the mask’s light-absorption pattern onto a (usually silicon) wafer. The wafer itself is covered with a photosensitive polymer called a resist. Changing the intensity of the light leads to different exposure levels in the resist-covered material, making it possible to create finely detailed structures.

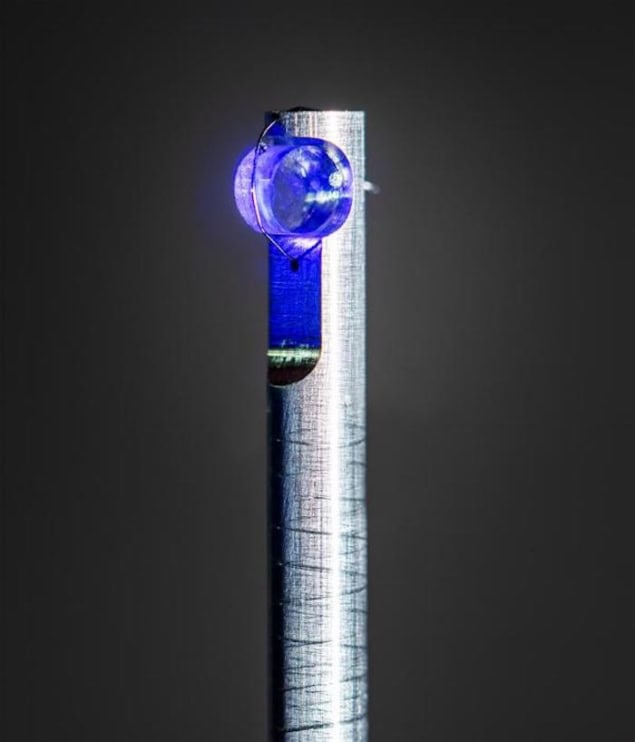

In the new work, Laura Heyderman and colleagues in PSI-ETH Zürich’s joint Mesoscopic System group began by placing a thin film of a magnetic material in a standard photolithography machine, but without a photoresist. They then scanned a focused laser beam over the surface of the sample while modulating the beam’s wavelength of 405 nm to deliver varying intensities of light. This process is known as direct write laser annealing (DWLA), and it makes it possible to heat areas of the sample that measure just 150 nm across.

In each heated area, thermal energy from the laser is deposited at the surface and partially absorbed by the film down to a depth of around 100 nm). The remainder dissipates through a silicon substrate coated in 300-nm-thick silicon oxide. However, the thermal conductivity of this substrate is low, which maximizes the temperature increase in the film for a given laser fluence. The researchers also sought to keep the temperature increase as uniform as possible by using thin-film heterostructures with a total thickness of less than 20 nm.

Crystallization and interdiffusion effects

Members of the PSI-ETH Zürich team applied this technique to several technologically important magnetic thin-film systems, including ferromagnetic CoFeB/MgO, ferrimagnetic CoGd and synthetic antiferromagnets composed of Co/Cr, Co/Ta or CoFeB/Pt/Ru. They found that DWLA induces both crystallization and interdiffusion effects in these materials. During crystallization, the orientation of the sample’s magnetic moments gradually changes, while interdiffusion alters the magnetic exchange coupling between the layers of the structures.

The researchers say that both phenomena could have interesting applications. The magnetized regions in the structures could be used in data storage, for example, with the direction of the magnetization (“up” or “down”) corresponding to the “1” or “0” of a bit of data. In conventional data-storage systems, these bits are switched with a magnetic field, but team member Jeffrey Brock explains that the new technique allows electric currents to be used instead. This is advantageous because electric currents are easier to produce than magnetic fields, while data storage devices switched with electricity are both faster and capable of packing more data into a given space.

Team member Lauren Riddiford says the new work builds on previous studies by members of the same group, which showed it was possible to make devices suitable for computer memory by locally patterning magnetic properties. “The trick we used here was to locally oxidize the topmost layer in a magnetic multilayer,” she explains. “However, we found that this works only in a few systems and only produces abrupt changes in the material properties. We were therefore brainstorming possible alternative methods to create gradual, smooth gradients in material properties, which would open possibilities to even more exciting applications and realized that we could perform local annealing with a laser originally made for patterning polymer resist layers for photolithography.”

Riddiford adds that the method proved so fast and simple to implement that the team’s main challenge was to investigate all the material changes it produced. Physical characterization methods for ultrathin films can be slow and difficult, she tells Physics World.

The researchers, who describe their technique in Nature Communications, now hope to use it to develop structures that are compatible with current chip-manufacturing technology. “Beyond magnetism, our approach can be used to locally modify the properties of any material that undergoes changes when heated, so we hope researchers using thin films for many different devices – electronic, superconducting, optical, microfluidic and so on – could use this technique to design desired functionalities,” Riddiford says. “We are looking forward to seeing where this method will be implemented next, whether in magnetic or non-magnetic materials, and what kind of applications it might bring.”

The post Shining laser light on a material produces subtle changes in its magnetic properties appeared first on Physics World.