Quantum fluid instability produces eccentric skyrmions

Physicists at Osaka Metropolitan University in Japan and the Korea Advanced Institute of Science and Technology (KAIST) claim to have observed the quantum counterpart of the classic Kelvin-Helmholtz instability (KHI), which is the most basic instability in fluids. The effect, seen in a quantum gas of 7Li atoms, produces a new type of exotic vortex pattern called an eccentric fractional skyrmion. The finding not only advances our understanding of complex topological quantum systems, it could also help in the development of next-generation memory and storage devices.

Topological defects occur when a system rapidly transitions from a disordered to an ordered phase. These defects, which can occur in a wide range of condensed matter systems, from liquid crystals and atomic gases to the rapidly cooling early universe, can produce excitations such as solitons, vortices and skyrmions.

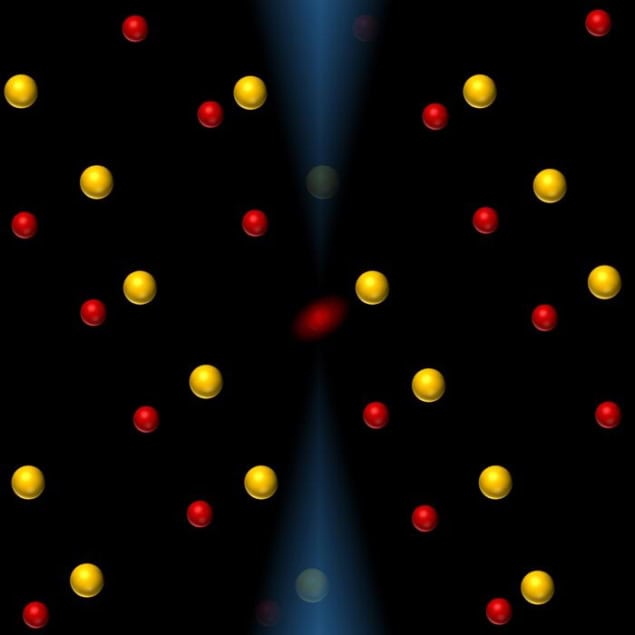

Skyrmions, first discovered in magnetic materials, are swirling vortex-like spin structures that extend across a few nanometres in a material. They can be likened to 2D knots in which the magnetic moments rotate about 360° within a plane.

Eccentric fractional skyrmions contain singularities

Skyrmions are topologically stable, which makes them robust to external perturbations, and are much smaller than the magnetic domains used to encode data in today’s disk drives. That makes them ideal building blocks for future data storage technologies such as “racetrack” memories. Eccentric fractional skyrmions (EFSs), which had only been predicted in theory until now, have a crescent-like shape and contain singularities – points in which the usual spin structure breaks down, creating sharp distortions as it becomes unsymmetrical.

“To me, the large crescent moon in the upper right corner of Van Gogh’s ‘The Starry Night’ also looks exactly like an EFS,” says Hiromitsu Takeuchi at Osaka, who co-led this new study with Jae-Yoon Choi of KAIST. “EFSs carry half the elementary charge, which means they do not fit into traditional classifications of topological defects.”

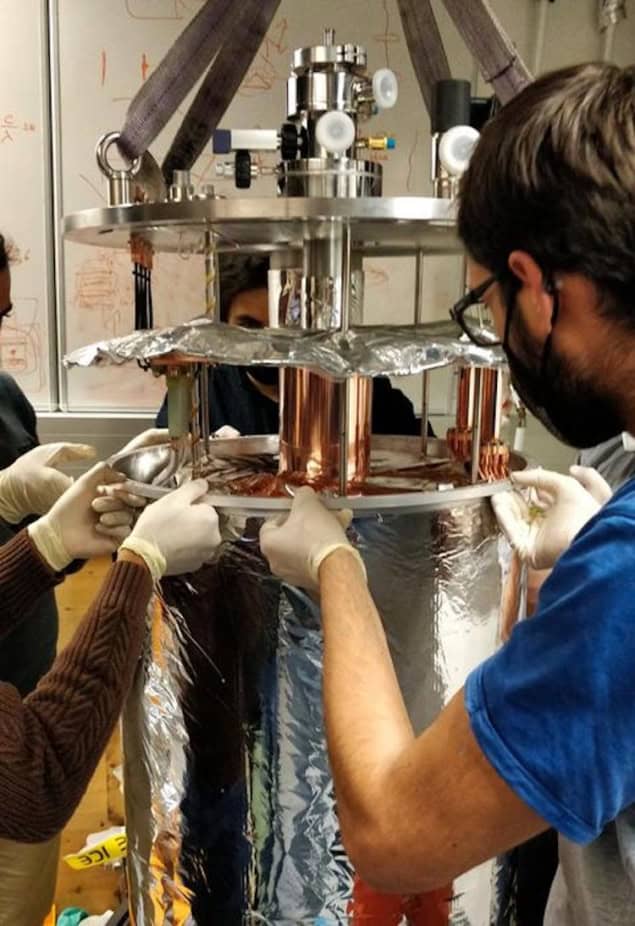

The KHI is a classic phenomenon in fluids in which waves and vortices form at the interface between two fluids moving at different speeds. “To observe the KHI in quantum systems, we need a structure containing a thin superfluid interface (a magnetic domain wall), such as in a quantum gas of 7Li atoms,” says Takeuchi. “We also need experimental techniques that can skilfully control the behaviour of this interface. Both of these criteria have recently been met by Choi’s group.”

The researchers began by cooling a gas of 7Li atoms to near absolute zero temperatures to create a multi-component Bose-Einstein condensate – a quantum superfluid containing two streams flowing at different speeds. At the interface of these streams, they observed vortices, which corresponded to the predicted EFSs.

The behaviour of the KHI is universal

“We have shown that the behaviour of the KHI is universal and exists in both the classical and quantum regimes,” says Takeuchi. This finding could not only lead to a better understanding of quantum turbulence and the unification of quantum and classic hydrodynamics, it could also help in the development of technologies such as next-generation storage and memory devices and spintronics, an emerging technology in which magnetic spin is used to store and transfer information using much less energy than existing electronic devices.

“By further refining the experiment, we might be able to verify certain predictions (some of which were made as long ago as the 19th century) about the wavelength and frequency of KHI-driven interface waves in non-viscous quantum fluids, like the one studied in this work,” he adds.

“In addition to the universal finger pattern we observed, we expect structures like zipper and sealskin patterns, which are unique to such multi-component quantum fluids,” Takeuchi tells Physics World. “As well as experiments, it is necessary to develop a theory that more precisely describes the motion of EFSs, the interaction between these skyrmions and their internal structure in the context of quantum hydrodynamics and spontaneous symmetry breaking.”

The study is detailed in Nature Physics.

The post Quantum fluid instability produces eccentric skyrmions appeared first on Physics World.