Electrical charge on objects in optical tweezers can be controlled precisely

An effect first observed decades ago by Nobel laureate Arthur Ashkin has been used to fine tune the electrical charge on objects held in optical tweezers. Developed by an international team led by Scott Waitukaitis of the Institute of Science and Technology Austria, the new technique could improve our understanding of aerosols and clouds.

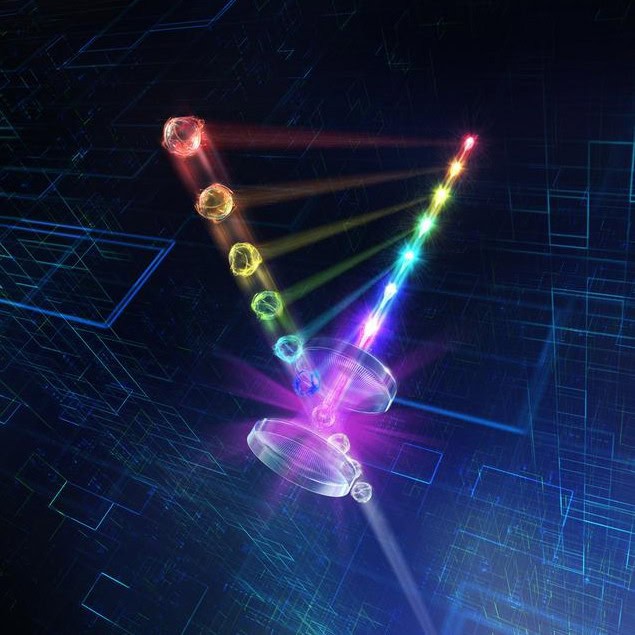

Optical tweezers use focused laser beams to trap and manipulate small objects about 100 nm to 1 micron in size. Their precision and versatility have made them a staple across fields from quantum optics to biochemistry.

Ashkin shared the 2018 Nobel prize for inventing optical tweezers and in the 1970s he noticed that trapped objects can be electrically charged by the laser light. “However, his paper didn’t get much attention, and the observation has essentially gone ignored,” explains Waitukaitis.

Waitukaitis’ team rediscovered the effect while using optical tweezers to study how charges build up in the ice crystals accumulating inside clouds. In their experiment, micron-sized silica spheres stood in for the ice, but Ashkin’s charging effect got in their way.

Bummed out

“Our goal has always been to study charged particles in air in the context of atmospheric physics – in lightning initiation or aerosols, for example,” Waitukaitis recalls. “We never intended for the laser to charge the particle, and at first we were a bit bummed out that it did so.”

Their next thought was that they had discovered a new and potentially useful phenomenon. “Out of due diligence we of course did a deep dive into the literature to be sure that no one had seen it, and that’s when we found the old paper from Ashkin, “ says Waitukaitis.

In 1976, Ashkin described how optically trapped objects become charged through a nonlinear process whereby electrons absorb two photons simultaneously. These electrons can acquire enough energy to escape the object, leaving it with a positive charge.

Yet beyond this insight, Ashkin “wasn’t able to make much sense of the effect,” Waitukaitis explains. “I have the feeling he found it an interesting curiosity and then moved on.”

Shaking and scattering

To study the effect in more detail, the team modified their optical tweezers setup so its two copper lens holders doubled as electrodes, allowing them to apply an electric field along the axis of the confining, opposite-facing laser beams. If the silica sphere became charged, this field would cause it to shake, scattering a portion of the laser light back towards each lens.

The researchers picked off this portion of the scattered light using a beam splitter, then diverted it to a photodiode, allowing them to track the sphere’s position. Finally, they converted the measured amplitude of the shaking particle into a real-time charge measurement. This allowed them to track the relationship between the sphere’s charge and the laser’s tuneable intensity.

Their measurements confirmed Ashkin’s 1976 hypothesis that electrons on optically-trapped objects undergo two-photon absorption, allowing them to escape. Waitukaitis and colleagues improved on this model and showed how the charge on a trapped object can be controlled precisely by simply adjusting the laser’s intensity.

As for the team’s original research goal, the effect has actually been very useful for studying the behaviour of charged aerosols.

“We can get [an object] so charged that it shoots off little ‘microdischarges’ from its surface due to breakdown of the air around it, involving just a few or tens of electron charges at a time,” Waitukaitis says. “This is going to be really cool for studying electrostatic phenomena in the context of particles in the atmosphere.“

The study is described in Physical Review Letters.

The post Electrical charge on objects in optical tweezers can be controlled precisely appeared first on Physics World.