Space Elevators Could Totally Work—if Earth Days Were Much Shorter

Grid operators around the world are under intense pressure to expand and modernize their power networks. The International Energy Authority predicts that demand for electricity will rise by 30% in this decade alone, fuelled by global economic growth and the ongoing drive towards net zero. At the same time, electrical transmission systems must be adapted to handle the intermittent nature of renewable energy sources, as well as the extreme and unpredictable weather conditions that are being triggered by climate change.

High-voltage capacitors play a crucial role in these power networks, balancing the electrical load and managing the flow of current around the grid. For more than 40 years, the standard dielectric for storing energy in these capacitors has been a thin film of a polymer material called biaxially oriented polypropylene (BOPP). However, as network operators upgrade their analogue-based infrastructure with digital technologies such as solid-state transformers and high-frequency switches, BOPP struggles to provide the thermal resilience and reliability that are needed to ensure the stability, scalability and security of the grid.

“We’re trying to bring innovation to an area that hasn’t seen it for a very long time,” says Dr Mike Ponting, Chief Scientific Officer of Peak Nano, a US firm specializing in advanced polymer materials. “Grid operators have been using polypropylene materials for a generation, with no improvement in capability or performance. It’s time to realize we can do better.”

Peak Nano has created a new capacitor film technology that address the needs of the digital power grid, as well as other demanding energy storage applications such as managing the power supply to data centres, charging solutions for electric cars, and next-generation fusion energy technology. The company’s Peak NanoPlex™ materials are fabricated from multiple thin layers of different polymer materials, and can be engineered to deliver enhanced performance for both electrical and optical applications. The capacitor films typically contain polymer layers anywhere between 32 and 156 nm thick, while the optical materials are fabricated with as many as 4000 layers in films thinner than 300 µm.

“When they are combined together in an ordered, layered structure, the long polymer molecules behave and interact with each other in different ways,” explains Ponting. “By putting the right materials together, and controlling the precise arrangement of the molecules within the layers, we can engineer the film properties to achieve the performance characteristics needed for each application.”

In the case of capacitor films, this process enhances BOPP’s properties by interleaving it with another polymer. Such layered films can be optimized to store four times the energy as conventional BOPP while achieving extremely fast charge/discharge rates. Alternatively, they can be engineered to deliver longer lifetimes at operating temperatures some 50–60°C higher than existing materials. Such improved thermal resilience is useful for applications that experience more heat, such as mining and aerospace, and is also becoming an important priority for grid operators as they introduce new transmission technologies that generate more heat.

“We talked to the users of the components to find out what they needed, and then adjusted our formulations to meet those needs,” says Ponting. “Some people wanted smaller capacitors that store a lot of energy and can be cycled really fast, while others wanted an upgraded version of BOPP that is more reliable at higher temperatures.”

The multilayered materials now being produced by Peak Nano emerged from research Ponting was involved in while he was a graduate student at Case Western Reserve University in the 2000s, where Ponting was a graduate student. Plastics containing just a few layers had originally been developed for everyday applications like gift wrap and food packaging, but scientists were starting to explore the novel optical and electronic properties that emerge when the thickness of the polymer layers is reduced to the nanoscale regime.

Small samples of these polymer nanocomposites produced in the lab demonstrated their superior performance, and Peak Nano was formed in 2016 to commercialize the technology and scale up the fabrication process. “There was a lot of iteration and improvement to produce large quantities of the material while still maintaining the precision and repeatability of the nanostructured layers,” says Ponting, who has been developing these multilayered polymer materials and the required processing technology for more than 20 years. “The film properties we want to achieve require the polymer molecules to be well ordered, and it took us a long time to get it right.”

As part of this development process, Peak Nano worked with capacitor manufacturers to create a plug-and-play replacement technology for BOPP that can be used on the same manufacturing systems and capacitor designs as BOPP today. By integrating its specialist layering technology into these existing systems, Peak Nano has been able to leverage established supply chains for materials and equipment rather than needing to develop a bespoke manufacturing process. “That has helped to keep costs down, which means that our layered material is only slightly more expensive than BOPP,” says Ponting.

Ponting also points out that long term, NanoPlex is a more cost-effective option. With improved reliability and resilience, NanoPlex can double or even quadruple the lifetime of a component. “The capacitors don’t need to be replaced as often, which reduces the need for downtime and offsets the slightly higher cost,” he says.

For component manufacturers, meanwhile, the multilayered films can be used in exactly the same way as conventional materials. “Our material can be wound into capacitors using the same process as for polypropylene,” says Ponting. “Our customers don’t need to change their process; they just need to design for higher performance.”

Initial interest in the improved capabilities of NanoPlex came from the defence sector, with Peak Nano benefiting from investment and collaborative research with the US Defense Advanced Research Projects Agency (DARPA) and the Naval Research Laboratory. Optical films produced by the company have been used to fabricate lenses with a graduated refractive index, reducing the size and weight of head-mounted visual equipment while also sharpening the view. Dielectric films with a high breakdown voltage are also a common requirement within the defence community.

The post Nanostructured plastics deliver energy innovation appeared first on Physics World.

Superfluorescence is a collective quantum phenomenon in which many excited particles emit light coherently in a sudden, intense burst. It is usually only observed at cryogenic temperatures, but researchers in the US and France have now determined how and why superfluorescence occurs at room temperature in a lead halide perovskite. The work could help in the development of materials that host exotic coherent quantum states – like superconductivity, superfluidity or superfluorescence – under ambient conditions, they say.

Superfluorescence and other collective quantum phenomena are rapidly destroyed at temperatures higher than cryogenic ones because of thermal vibrations produced in the crystal lattice. In the system studied in this work, the researchers, led by physicist Kenan Gundogdu of North Carolina State University, found that excitons (bound electron–hole pairs) spontaneously form localized, coherence-preserving domains. “These solitons act like quantum islands,” explains Gundogdu. “Excitons inside these islands remain coherent while those outside rapidly dephase.”

The soliton structure acts as a shield, he adds, protecting its content from thermal disturbances – a behaviour that represents a kind of quantum analogue of “soundproofing” – that is, isolation from vibrations. “Here, coherence is maintained not by external cooling but by intrinsic self-organization,” he says.

The team, which also includes researchers from Duke University, Boston University and the Institut Polytechnique de Paris, began their experiment by exciting lead halide perovskite samples with intense femtosecond laser pulses to generate a dense population of excitons in the material. Under normal conditions, these excitons recombine and emit light incoherently, but at high enough densities, as was the case here, the researchers observed intense, time-delayed bursts of coherent emission, which is a signature of superfluorescence.

When they analysed how the emission evolved over time, the researchers observed that it fluctuated. Surprisingly, these fluctuations were not random, explains Gundogdu, but were modulated by a well-defined frequency, corresponding to a specific lattice vibrational mode. “This suggested that the coherent excitons that emit superfluorescence come from a region in the lattice in which the lattice modes themselves oscillate in synchrony.”

So how can coherent lattice oscillations arise in a thermally disordered environment? The answer involves polarons, says Gundogdu. These are groups of excitons that locally deform the lattice. “Above a critical excitation density, these polarons self-organize into a soliton, which concentrates energy into specific vibrational modes while suppressing others. This process filters out incoherent lattice motion, allowing a stable collective oscillation to emerge.”

The new work, which is detailed in Nature, builds on a previous study in which the researchers had observed superfluorescence in perovskites at room temperature – an unexpected result. They suspected that an intrinsic effect was protecting excitons from dephasing – possibly through a quantum analogue of vibration isolation as mentioned – but the mechanism behind this was unclear.

In this latest experiment, the team determined how polarons can self-organize into soliton states, and revealed an unconventional form of superfluorescence where coherence emerges intrinsically inside solitons. This coherence protection mechanism might be extended to other macroscopic quantum phenomena such as superconductivity and superfluidity.

“These effects are foundational for quantum technologies, yet how coherence survives at high temperatures is still unresolved,” Gundogdu tells Physics World. “Our findings provide a new principle that could help close this knowledge gap and guide the design of more robust, high-temperature quantum systems.”

The post Soliton structure protects superfluorescence appeared first on Physics World.

Light has always played a central role in healthcare, enabling a wide range of tools and techniques for diagnosing and treating disease. Nick Stone from the University of Exeter is a pioneer in this field, working with technologies ranging from laser-based cancer therapies to innovative spectroscopy-based diagnostics. Stone was recently awarded the Institute of Physics’ Rosalind Franklin Medal and Prize for developing novel Raman spectroscopic tools for rapid in vivo cancer diagnosis and monitoring. Physics World’s Tami Freeman spoke with Stone about his latest research.

Think about how we see the sky. It is blue due to elastic (specifically Rayleigh) scattering – when an incident photon scatters off a particle without losing any energy. But in about one in a million events, photons interacting with molecules in the atmosphere will be inelastically scattered. This changes the energy of the photon as some of it is taken by the molecule to make it vibrate.

If you shine laser light on a molecule and cause it to vibrate, the photon that is scattered from that molecule will be shifted in energy by a specific amount relating to the molecule’s vibrational mode. Measuring the wavelength of this inelastically scattered light reveals which molecule it was scattered from. This is Raman spectroscopy.

Because most of the time we’re working at room or body temperatures, most of what we observe is Stokes Raman scattering, in which the laser photons lose energy to the molecules. But if a molecule is already vibrating in an excited state (at higher temperature), it can give up energy and shift the laser photon to a higher energy. This anti-Stokes spectrum is much weaker, but can be very useful – as I’ll come back to later.

A cell in the body is basically a nucleus: one set of molecules, surrounded by the cytoplasm: another set of molecules. These molecules change subtlety depending on the phenotype [set of observable characteristics] of the particular cell. If you have a genetic mutation, which is what drives cancer, the cell tends to change its relative expression of proteins, nucleic acids, glycogen and so on.

We can probe these molecules with light, and therefore determine their molecular composition. Cancer diagnostics involves identifying minute changes between the different compositions. Most of our work has been in tissues, but it can also be done in biofluids such as tears, blood plasma or sweat. You build up a molecular fingerprint of the tissue or cell of interest, and then you can compare those fingerprints to identify the disease.

We tend to perform measurements under a microscope and, because Raman scattering is a relatively weak effect, this requires good optical systems. We’re trying to use a single wavelength of light to probe molecules of interest and look for wavelengths that are shifted from that of the laser illumination. Technology improvements have provided holographic filters that remove the incident laser wavelength readily, and less complex systems that enable rapid measurements.

Absolutely, we’ve developed probes that fit inside an endoscope for diagnosing oesophageal cancer.

Earlier in my career I worked on photodynamic therapy. We would look inside the oesophagus with an endoscope to find disease, then give the patient a phototoxic drug that would target the diseased cells. Shining light on the drug causes it to generate singlet oxygen that kills the cancer cells. But I realized that the light we were using could also be used for diagnosis.

Currently, to find this invisible disease, you have to take many, many biopsies. But our in vivo probes allow us to measure the molecular composition of the oesophageal lining using Raman spectroscopy, to be and determine where to take biopsies from. Oesophageal cancer has a really bad outcome once it’s diagnosed symptomatically, but if you can find the disease early you can deliver effective treatments. That’s what we’re trying to do.

The very weak Raman signal, however, causes problems. With a microscope, we can use advanced filters to remove the incident laser wavelength. But sending light down an optical fibre generates unwanted signal, and we also need to remove elastically scattered light from the oesophagus. So we had to put a filter on the end of this tiny 2 mm fibre probe. In addition, we don’t want to collect photons that have travelled a long way through the body, so we needed a confocal system. We built a really complex probe, working in collaboration with John Day at the University of Bristol – it took a long time to optimize the optics and the engineering.

Yes, we have also developed a smart needle probe that’s currently in trials. We are using this to detect lymphomas – the primary cancer in lymph nodes – in the head and neck, under the armpit and in the groin.

If somebody comes forward with lumps in these areas, they usually have a swollen lymph node, which shows that something is wrong. Most often it’s following an infection and the node hasn’t gone back down in size.

This situation usually requires surgical removal of the node to decide whether cancer is present or not. Instead, we can just insert our needle probe and send light in. By examining the scattered light and measuring its fingerprint we can identify if it’s lymphoma. Indeed, we can actually see what type of cancer it is and where it has come from.

Currently, the prototype probe is quite bulky because we are trying to make it low in cost. It has to have a disposable tip, so we can use a new needle each time, and the filters and optics are all in the handpiece.

As people don’t particularly want a needle stuck in them, we are now trying to understand where the photons travel if you just illuminate the body. Red and near-infrared light travel a long way through the body, so we can use near-infrared light to probe photons that have travelled many, many centimetres.

We are doing a study looking at calcifications in a very early breast cancer called ductal carcinoma in situ (DCIS) – it’s a Cancer Research UK Grand Challenge called DCIS PRECISION, and we are just moving on to the in vivo phase.

Calcifications aren’t necessarily a sign of breast cancer – they are mostly benign; but in patients with DCIS, the composition of the calcifications can show how their condition will progress. Mammographic screening is incredibly good at picking up breast cancer, but it’s also incredibly good at detecting calcifications that are not necessarily breast cancer yet. The problem is how to treat these patients, so our aim is to determine whether the calcifications are completely fine or if they require biopsy.

We are using Raman spectroscopy to understand the composition of these calcifications, which are different in patients who are likely to progress onto invasive disease. We can do this in biopsies under a microscope and are now trying to see whether it works using transillumination, where we send near-infrared light through the breast. We could use this to significantly reduce the number of biopsies, or monitor individuals with DCIS over many years.

This is an area I’m really excited about. Nanoscale gold can enhance Raman signals by many orders of magnitude – it’s called surface-enhanced Raman spectroscopy. We can also “label” these nanoparticles by adding functional molecules to their surfaces. We’ve used unlabelled gold nanoparticles to enhance signals from the body and labelled gold to find things.

During that process, we also realized that we can use gold to provide heat. If you shine light on gold at its resonant frequency, it will heat the gold up and can cause cell death. You could easily blow holes in people with a big enough laser and lots of nanoparticles – but we want to do is more subtle. We’re decorating the tiny gold nanoparticles with a label that will tell us their temperature.

By measuring the ratio between Stokes and anti-Stokes scattering signals (which are enhanced by the gold nanoparticles), we can measure the temperature of the gold when it is in the tumour. Then, using light, we can keep the temperature at a suitable level for treatment to optimize the outcome for the patient.

Ideally, we want to use 100 nm gold particles, but that is not something you can simply excrete through the kidneys. So we’ve spent the last five years trying to create nanoconstructs made from 5 nm gold particles that replicate the properties of 100 nm gold, but can be excreted. We haven’t demonstrated this excretion yet, but that’s the process we’re looking at.

We’ve just completed a five-year programme called Raman Nanotheranostics. The aim is to label our nanoparticles with appropriate antibodies that will help the nanoparticles target different cancer types. This could provide signals that tell us what is or is not present and help decide how to treat the patient.

We have demonstrated the ability to perform treatments in preclinical models, control the temperature and direct the nanoparticles. We haven’t yet achieved a multiplexed approach with all the labels and antibodies that we want. But this is a key step forward and something we’re going to pursue further.

We are also trying to put labels on the gold that will enable us to measure and monitor treatment outcomes. We can use molecules that change in response to pH, or the reactive oxygen species that are present, or other factors. If you want personalized medicine, you need ways to see how the patient reacts to the treatment, how their immune system responds. There’s a whole range of things that will enable us to go beyond just diagnosis and therapy, to actually monitor the treatment and potentially apply a boost if the gold is still there.

Light has always been used for diagnosis: “you look yellow, you’ve got something wrong with your liver”; “you’ve got blue-tinged lips, you must have oxygen depletion”. But it’s getting more and more advanced. I think what’s most encouraging is our ability to measure molecular changes that potentially reveal future outcomes of patients, and individualization of the patient pathway.

But the real breakthrough is what’s on our wrists. We are all walking around with devices that shine light in us – to measure heartbeat, blood oxygenation and so on. There are already Raman spectrometers that sort of size. They’re not good enough for biological measurements yet, but it doesn’t take much of a technology step forward.

I could one day have a chip implanted in my wrist that could do all the things the gold nanoconstructs might do, and my watch could read it out. And this is just Raman – there are a whole host of approaches, such as photoacoustic imaging or optical coherence tomography. Combining different techniques together could provide greater understanding in a much less invasive way than many traditional medical methods. Light will always play a really important role in healthcare.

The post Harnessing the power of light for healthcare appeared first on Physics World.

Astronomers in China have observed a pulsar that becomes partially eclipsed by an orbiting companion star every few hours. This type of observation is very rare and could shed new light on how binary star systems evolve.

While most stars in our galaxy exist in pairs, the way these binary systems form and evolve is still little understood. According to current theories, when two stars orbit each other, one of them may expand so much that its atmosphere becomes large enough to encompass the other. During this “envelope” phase, mass can be transferred from one star to the other, causing the stars’ orbit to shrink over a period of around 1000 years. After this, the stars either merge or the envelope is ejected.

In the special case where one star in the pair is a neutron star, the envelope-ejection scenario should, in theory, produce a helium star that has been “stripped” of much of its material and a “recycled” millisecond pulsar – that is, a rapidly spinning neutron star that flashes radio pulses hundreds of times per second. In this type of binary system, the helium star can periodically eclipse the pulsar as it orbits around it, blocking its radio pulses and preventing us from detecting them here on Earth. Only a few examples of such a binary system have ever been observed, however, and all previous ones were in nearby dwarf galaxies called the Magellanic Clouds, rather than our own Milky Way.

Astronomers led by Jinlin Han from the National Astronomical Observatories of China say they have now identified the first system of this type in the Milky Way. The pulsar in the binary, denoted PSR J1928+1815, had been previously identified using the Five-hundred-meter Aperture Spherical radio Telescope (FAST) during the FAST Galactic Plane Pulsar Snapshot survey. These observations showed that PSR J1928+1815 has a spin period of 10.55 ms, which is relatively short for a pulsar of this type and suggests it had recently sped up by accreting mass from a companion.

The researchers used FAST to observe this suspected binary system at radio frequencies ranging from 1.0 to 1.5 GHz over a period of four and a half years. They fitted the times that the radio pulses arrived at the telescope with a binary orbit model to show that the system has an eccentricity of less than 3 × 10−5. This suggests that the pulsar and its companion star are in a nearly circular orbit. The diameter of this orbit, Han points out, is smaller than that of our own Sun, and its period – that is, the time it takes the two stars to circle each other – is correspondingly short, at 3.6 hours. For a sixth of this time, the companion star blocks the pulsar’s radio signals.

The team also found that the rate at which this orbital period is changing (the so-called spin period derivative) is unusually high for a millisecond-period pulsar, at 3.63 × 10−18 s s−1 .This shows that energy is rapidly being lost from the system as the pulsar spins down.

“We knew that PSR J1928+1815 was special from November 2021 onwards,” says Han. “Once we’d accumulated data with FAST, one of my students, ZongLin Yang, studied the evolution of such binaries in general and completed the timing calculations from the data we had obtained for this system. His results suggested the existence of the helium star companion and everything then fell into place.”

This is the first time a short-life (107 years) binary consisting of a neutron star and a helium star has ever been detected, Han tells Physics World. “It is a product of the common envelope evolution that lasted for only 1000 years and that we couldn’t observe directly,” he says.

“Our new observation is the smoking gun for long-standing binary star evolution theories, such as those that describe how stars exchange mass and shrink their orbits, how the neutron star spins up by accreting matter from its companion and how the shared hydrogen envelope is ejected.”

The system could help astronomers study how neutron stars accrete matter and then cool down, he adds. “The binary detected in this work will evolve to become a system of two compact stars that will eventually merge and become a future source of gravitational waves.”

Full details of the study are reported in Science.

The post Short-lived eclipsing binary pulsar spotted in Milky Way appeared first on Physics World.

As the world celebrates the 2025 International Year of Quantum Science and Technology, it’s natural that we should focus on the exciting applications of quantum physics in computing, communication and cryptography. But quantum physics is also set to have a huge impact on medicine and healthcare. Quantum sensors, in particular, can help us to study the human body and improve medical diagnosis – in fact, several systems are close to being commercialized.

Quantum computers, meanwhile, could one day help us to discover new drugs by providing representations of atomic structures with greater accuracy and by speeding up calculations to identify potential drug reactions. But what other technologies and projects are out there? How can we forge new applications of quantum physics in healthcare and how can we help discover new potential use cases for the technology?

Those are the some of the questions tackled in a recent report, on which this Physics World article is based, published by Innovate UK in October 2024. Entitled Quantum for Life, the report aims to kickstart new collaborations by raising awareness of what quantum physics can do for the healthcare sector. While the report says quite a bit about quantum computing and quantum networking, this article will focus on quantum sensors, which are closer to being deployed.

The importance of quantum science to healthcare isn’t new. In fact, when a group of academics and government representatives gathered at Chicheley Hall back in 2013 to hatch plans for the UK’s National Quantum Technologies Programme, healthcare was one of the main applications they identified. The resulting £1bn programme, which co-ordinated the UK’s quantum-research efforts, was recently renewed for another decade and – once again – healthcare is a key part of the remit.

As it happens, most major hospitals already use quantum sensors in the form of magnetic resonance imaging (MRI) machines. Pioneered in the 1970s, these devices manipulate the quantum spin states of hydrogen atoms using magnetic fields and radio waves. By measuring how long those states take to relax, MRI can image soft tissues, such as the brain, and is now a vital part of the modern medicine toolkit.

While an MRI machine measures the quantum properties of atoms, the sensor itself is classical, essentially consisting of electromagnetic coils that detect the magnetic flux produced when atomic spins change direction. More recently, though, we’ve seen a new generation of nanoscale quantum sensors that are sensitive enough to detect magnetic fields emitted by a target biological system. Others, meanwhile, consist of just a single atom and can monitor small changes in the environment.

There are lots of different quantum-based companies and institutions working in the healthcare sector

As the Quantum for Life report shows, there are lots of different quantum-based companies and institutions working in the healthcare sector. There are also many promising types of quantum sensors, which use photons, electrons or spin defects within a material, typically diamond. But ultimately what matters is what quantum sensors can achieve in a medical environment.

While compiling the report, it became clear that quantum-sensor technologies for healthcare come in five broad categories. The first is what the report labels “lab diagnostics”, in which trained staff use quantum sensors to observe what is going on inside the human body. By monitoring everything from our internal temperature to the composition of cells, the sensors can help to identify diseases such as cancer.

Currently, the only way to definitively diagnose cancer is to take a sample of cells – a biopsy – and examine them under a microscope in a laboratory. Biopsies are often done with visual light but that can damage a sample, making diagnosis tricky. Another option is to use infrared radiation. By monitoring the specific wavelengths the cells absorb, the compounds in a sample can be identified, allowing molecular changes linked with cancer to be tracked.

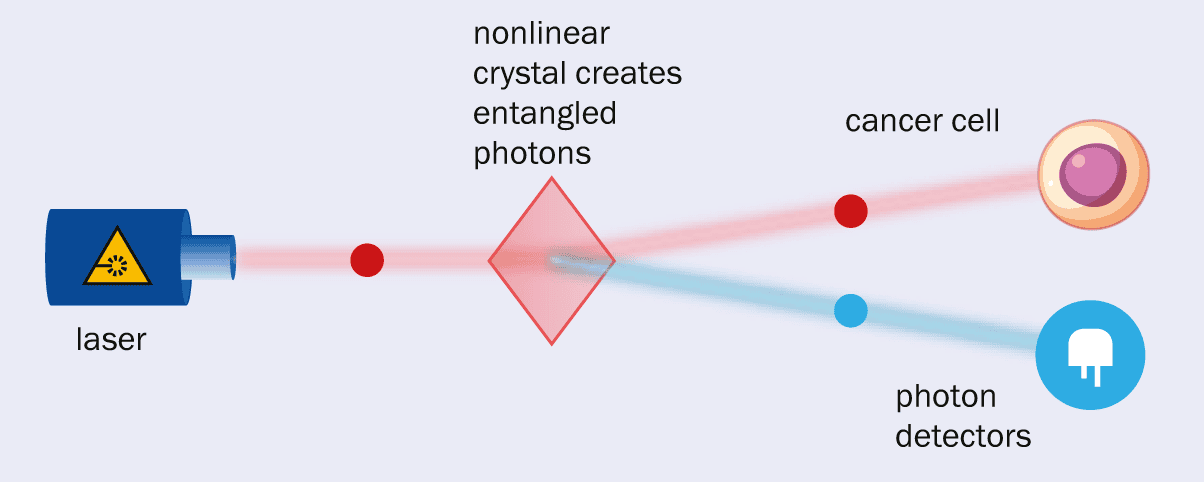

Unfortunately, it can be hard to differentiate these signals from background noise. What’s more, infrared cameras are much more expensive than those operating in the visible region. One possible solution is being explored by Digistain, a company that was spun out of Imperial College, London, in 2019. It is developing a product called EntangleCam that uses two entangled photons – one infrared and one visible (figure 1).

a One way in which quantum physics is benefiting healthcare is through entangled photons created by passing laser light through a nonlinear crystal (left). Each laser photon gets converted into two lower-energy photons – one visible, one infrared – in a process called spontaneous parametric down conversion. In technology pioneered by the UK company Digistain, the infrared photon can be sent through a sample, with the visible photon picked up by a detector. As the photons are entangled, the visible photon gives information about the infrared photon and the presence of, say, cancer cells. b Shown here are cells seen with traditional stained biopsy (left) and with Digistain’s method (right).

If the infrared photon is absorbed by, say, a breast cancer cell, that immediately affects the visible photon with which it is entangled. So by measuring the visible light, which can be done with a cheap, efficient detector, you can get information about the infrared photon – and hence the presence of a potential cancer cell (Phys. Rev. 108 032613). The technique could therefore allow cancer to be quickly diagnosed before a tumour has built up, although an oncologist would still be needed to identify the area for the technique to be applied.

The second promising application of quantum sensors lies in “point-of-care” diagnostics. We all became familiar with the concept during the COVID-19 pandemic when lateral-flow tests proved to be a vital part of the worldwide response to the virus. The tests could be taken anywhere and were quick, simple, reliable and relatively cheap. Something that had originally been designed to be used in a lab was now available to most people at home.

Quantum technology could let us miniaturize such tests further and make them more accurate, such that they could be used at hospitals, doctor’s surgeries or even at home. At the moment, biological indicators of disease tend to be measured by tagging molecules with fluorescent markers and measuring where, when and how much light they emit. But because some molecules are naturally fluorescent, those measurements have to be processed to eliminate the background noise.

One emerging quantum-based alternative is to characterize biological samples by measuring their tiny magnetic fields. This can be done, for example, using diamond specially engineered with nitrogen-vacancy (NV) defects. Each is made by removing two carbon atoms from the lattice and implanting a nitrogen atom in one of the gaps, leaving a vacancy in the other. Behaving like an atom with discrete energy levels, each defect’s spin state is influenced by the local magnetic field and can be “read out” from the way it fluoresces.

One UK company working in this area is Element Six. It has joined forces with the US-based firm QDTI to make a single-crystal diamond-based device that can quickly identify biomarkers in blood plasma, cerebrospinal fluid and other samples extracted from the body. The device detects magnetic fields produced by specific proteins, which can help identify diseases in their early stages, including various cancers and neurodegenerative conditions like Alzheimer’s. Another firm using single-crystal diamond to detect cancer cells is Germany-based Quantum Total Analysis Systems (QTAS).

Matthew Markham, a physicist who is head of quantum technologies at Element Six, thinks that healthcare has been “a real turning point” for the company. “A few years ago, this work was mostly focused on academic problems,” he says. “But now we are seeing this technology being applied to real-world use cases and that it is transitioning into industry with devices being tested in the field.”

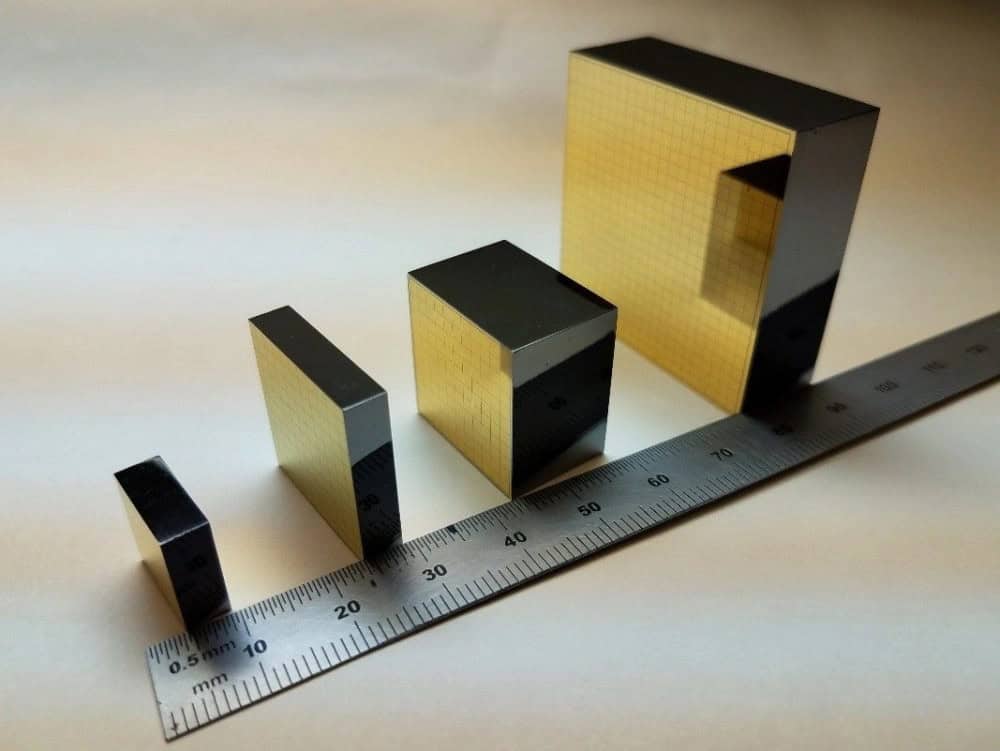

An alternative approach involves using tiny nanometre-sized diamond particles with NV centres, which have the advantage of being highly biocompatible. QT Sense of the Netherlands, for example, is using these nanodiamonds to build nano-MRI scanners that can measure the concentration of molecules that have an intrinsic magnetic field. This equipment has already been used by biomedical researchers to investigate single cells (figure 2).

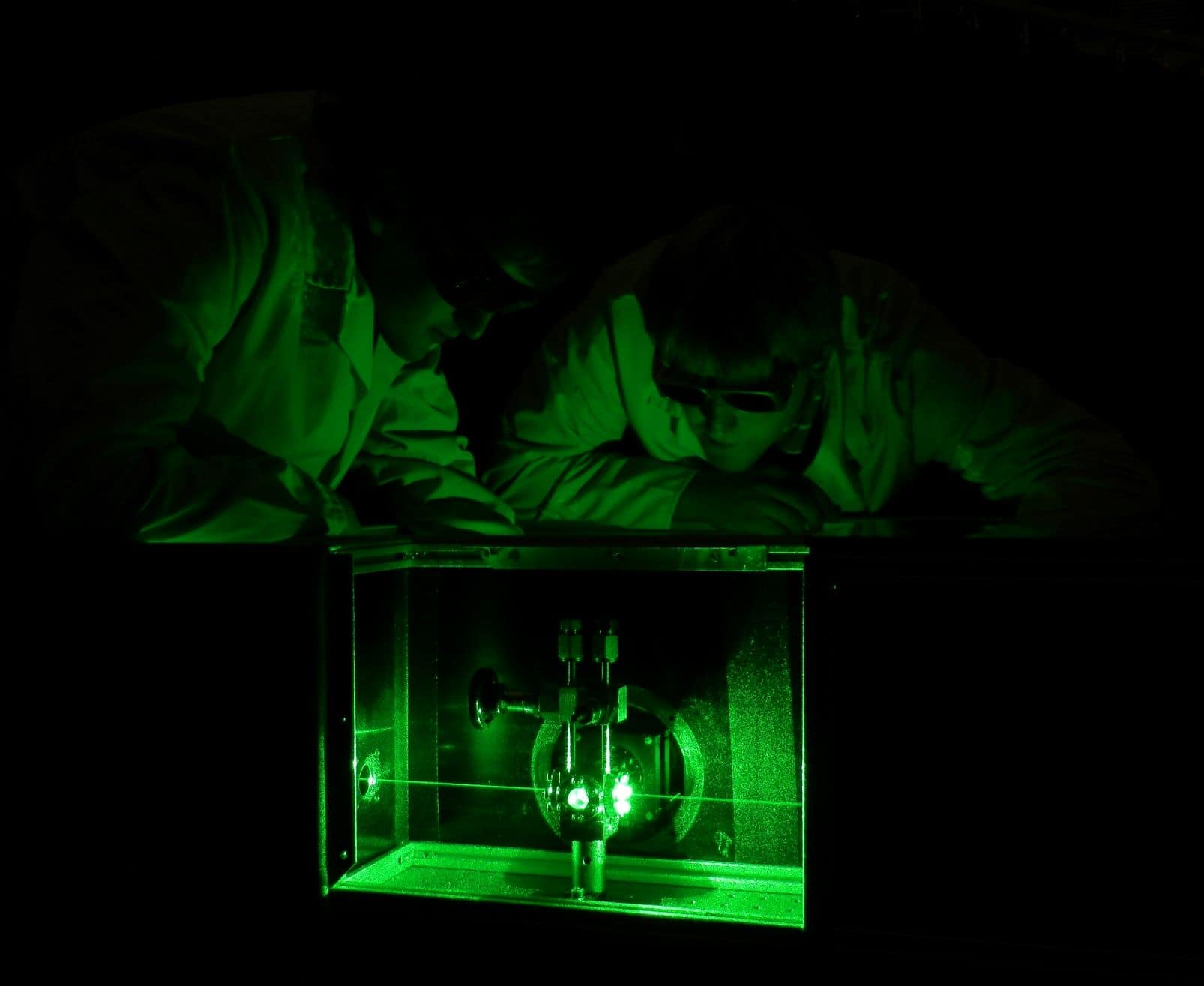

A nitrogen-vacancy defect in diamond – known as an NV centre – is made by removing two carbon atoms from the lattice and implanting a nitrogen atom in one of the gaps, leaving a vacancy in the other. Using a pulse of green laser light, NV centres can be sent from their ground state to an excited state. If the laser is switched off, the defects return to their ground state, emitting a visible photon that can be detected. However, the rate at which the fluorescent light drops while the laser is off depends on the local magnetic field. As companies like Element Six and QTSense are discovering, NV centres in diamond are great way of measuring magnetic fields in the human body especially as the surrounding lattice of carbon atoms shields the NV centre from noise.

Australian firm FeBI Technologies, meanwhile, is developing a device that uses nanodiamonds to measure the magnetic properties of ferritin – a protein that stores iron in the body. The company claims its technology is nine orders of magnitude more sensitive than traditional MRI and will allow patients to monitor the amount of iron in their blood using a device that is accurate and cheap.

The third area in which quantum technologies are benefiting healthcare is what’s billed in the Quantum for Life report as “consumer medical monitoring and wearable healthcare”. In other words, we’re talking about devices that allow people to monitor their health in daily life on an ongoing basis. Such technologies are particularly useful for people who have a diagnosed medical condition, such as diabetes or high blood pressure.

NIQS Tech, for example, was spun off from the University of Leeds in 2022 and is developing a highly accurate, non-invasive sensor for measuring glucose levels. Traditional glucose-monitoring devices are painful and invasive because they basically involve sticking a needle in the body. While newer devices use light-based spectroscopic measurements, they tend to be less effective for patients with darker skin tones.

The sensor from NIQS Tech instead uses a doped silica platform, which enables quantum interference effects. When placed in contact with the skin and illuminated with laser light, the device fluoresces, with the lifetime of the fluorescence depending on the amount of glucose in the user’s blood, regardless of skin tone. NIQS has already demonstrated proof of concept with lab-based testing and now wants to shrink the technology to create a wearable device that monitors glucose levels continuously.

The fourth application of quantum tech lies in body scanning, which allows patients to be diagnosed without needing a biopsy. One company leading in this area is Cerca Magnetics, which was spun off from the University of Nottingham. In 2023 it won the inaugural qBIG prize for quantum innovation from the Institute of Physics, which publishes Physics World, for developing wearable optically pumped magnetometers for magnetoencephalography (MEG), which measure magnetic fields generated by neuronal firings in the brain. Its devices can be used to scan patients’ brains in a comfortable seated position and even while they are moving.

Quantum-based scanning techniques could also help diagnose breast cancer, which is usually done by exposing a patient’s breast tissue to low doses of X-rays. The trouble with such mammograms is that all breasts contain a mix of low-density fatty and other, higher-density tissue. The latter creates a “white blizzard” effect against the dark background, making it challenging to differentiate between healthy tissue and potential malignancies.

That’s a particular problem for the roughly 40% of women who have a higher concentration of higher-density tissue. One alternative is to use molecular breast imaging (MBI), which involves imaging the distribution of a radioactive tracer that has been intravenously injected into a patient. This tracer, however, exposes patients to a higher (albeit still safe) dose of radiation than with a mammogram, which means that patients have to be imaged for a long time to get enough signal.

A solution could lie with the UK-based firm Kromek, which is using cadmium zinc telluride (CZT) semiconductors that produce a measurable voltage pulse from just a single gamma-ray photon. As well as being very efficient over a broad range of X-ray and gamma-ray photon energies, CZTs can be integrated onto small chips operating at room temperature. Preliminary results with Kromek’s ultralow-dose and ultrafast detectors show they work with barely one-eighth of the amount of tracer as traditional MBI techniques.

“Our prototypes have shown promising results,” says Alexander Cherlin, who is principal physicist at Kromek. The company is now designing and building a full-size prototype of the camera as part of Innovate UK’s £2.5m “ultralow-dose” MBI project, which runs until the end of 2025. It involves Kromek working with hospitals in Newcastle along with researchers at University College London and the University of Newcastle.

The final application of quantum sensors to medicine lies in microscopy, which these days no longer just means visible light but everything from Raman and two-photon microscopy to fluorescence lifetime imaging and multiphoton microscopy. These techniques allow samples to be imaged at different scales and speeds, but they are all reaching various technological limits.

Quantum technologies can help us break the technological limits of microscopy

Quantum technologies can help us break those limits. Researchers at the University of Glasgow, for example, are among those to have used pairs of entangled photons to enhance microscopy through “ghost imaging”. One photon in each pair interacts with a sample, with the image built up by detecting the effect on its entangled counterpart. The technique avoids the noise created when imaging with low levels of light (Sci. Adv. 6 eaay2652).

Researchers at the University of Strathclyde, meanwhile, have used nanodiamonds to get around the problem that dyes added to biological samples eventually stop fluorescing. Known as photobleaching, the effect prevents samples from being studied after a certain time (Roy. Soc. Op. Sci. 6 190589). In the work, samples could be continually imaged and viewed using two-photon excitation microscopy with a 10-fold increase in resolution.

But despite the great potential of quantum sensors in medicine, there are still big challenges before the technology can be deployed in real, clinical settings. Scalability – making devices reliably, cheaply and in sufficient numbers – is a particular problem. Fortunately, things are moving fast. Even since the Quantum for Life report came out late in 2024, we’ve seen new companies being founded to address these problems.

One such firm is Bristol-based RobQuant, which is developing solid-state semiconductor quantum sensors for non-invasive magnetic scanning of the brain. Such sensors, which can be built with the standard processing techniques used in consumer electronics, allow for scans on different parts of the body. RobQuant claims its sensors are robust and operate at ambient temperatures without requiring any heating or cooling.

Agnethe Seim Olsen, the company’s co-founder and chief technologist, believes that making quantum sensors robust and scalable is vital if they are to be widely adopted in healthcare. She thinks the UK is leading the way in the commercialization of such sensors and will benefit from the latest phase of the country’s quantum hubs. Bringing academia and businesses together, they include the £24m Q-BIOMED biomedical-sensing hub led by University College London and the £27.5m QuSIT hub in imaging and timing led by the University of Birmingham.

Q-BIOMED is, for example, planning to use both single-crystal diamond and nanodiamonds to develop and commercialize sensors that can diagnose and treat diseases such as cancer and Alzheimer’s at much earlier stages of their development. “These healthcare ambitions are not restricted to academia, with many startups around the globe developing diamond-based quantum technology,” says Markham at Element Six.

As with the previous phases of the hubs, allowing for further research encourages start-ups – researchers from the forerunner of the QuSIT hub, for example, set up Cerca Magnetics. The growing maturity of some of these quantum sensors will undoubtedly attract existing medical-technology companies. The next five years will be a busy and exciting time for the burgeoning use of quantum sensors in healthcare.

This article forms part of Physics World‘s contribution to the 2025 International Year of Quantum Science and Technology (IYQ), which aims to raise global awareness of quantum physics and its applications.

Stayed tuned to Physics World and our international partners throughout the year for more coverage of the IYQ.

Find out more on our quantum channel.

The post How quantum sensors could improve human health and wellbeing appeared first on Physics World.

The Helgoland 2025 meeting, marking 100 years of quantum mechanics, has featured a lot of mind-bending fundamental physics, quite a bit of which has left me scratching my head.

So it was great to hear a brilliant talk by David Moore of Yale University about some amazing practical experiments using levitated, trapped microspheres as quantum sensors to detect what he calls the “invisible” universe.

If the work sounds familar to you, that’s because Moore’s team won a Physics World Top 10 Breakthrough of the Year award in 2024 for using their technique to detect the alpha decay of individual lead-212 atoms.

Speaking in the Nordseehalle on the island of Helgoland, Moore explained the next stage of the experiment, which could see it detect neutrinos “in a couple of months” at the earliest – and “at least within a year” at the latest.

Of course, physicists have already detected neutrinos, but it’s a complicated business, generally involving huge devices in deep underground locations where background signals are minimized. Yale’s set up is much cheaper, smaller and more convenient, involving no more than a couple of lab benches.

As Moore explained, he and his colleagues first trap silica spheres at low pressure, before removing excess electrons to electrically neutralize them. They then stabilize the spheres’ rotation before cooling them to microkelvin temperatures.

In the work that won the Physics World award last year, the team used samples of radon-220, which decays first into polonium-216 and then lead-212. These nuclei embed theselves in the silica spheres, which recoil when the lead-212 decays by releasing an alpha particle (Phys. Rev. Lett. 133 023602).

Moore’s team is able to measure the tiny recoil by watching how light scatters off the spheres. “We can see the force imparted by a subatomic particle on a heavier object,” he told the audience at Helgoland. “We can see single nuclear decays.”

Now the plan is to extend the experiment to detect neutrinos. These won’t (at least initially) be the neutrinos that stream through the Earth from the Sun or even those from a nuclear reactor.

Instead, the idea will be to embed the spheres with nuclei that undergo beta decay, releasing a much lighter neutrino in the process. Moore says the team will do this within a year and, one day, potentially even use to it spot dark matter.

“We are reaching the quantum measurement regime,” he said. It’s a simple concept, even if the name – “Search for new Interactions in a Microsphere Precision Levitation Experiment” (SIMPLE) – isn’t.

This article forms part of Physics World‘s contribution to the 2025 International Year of Quantum Science and Technology (IYQ), which aims to raise global awareness of quantum physics and its applications.

Stayed tuned to Physics World and our international partners throughout the next 12 months for more coverage of the IYQ.

Find out more on our quantum channel.

The post Yale researcher says levitated spheres could spot neutrinos ‘within months’ appeared first on Physics World.

The animal world – including some of its ickiest parts – never ceases to amaze. According to researchers in Canada and Singapore, velvet worm slime contains an ingredient that could revolutionize the design of high-performance polymers, making them far more sustainable than current versions.

“We have been investigating velvet worm slime as a model system for inspiring new adhesives and recyclable plastics because of its ability to reversibly form strong fibres,” explains Matthew Harrington, the McGill University chemist who co-led the research with Ali Miserez of Nanyang Technological University (NTU). “We needed to understand the mechanism that drives this reversible fibre formation, and we discovered a hitherto unknown feature of the proteins in the slime that might provide a very important clue in this context.”

The velvet worm (phylum Onychophora) is a small, caterpillar-like creature that lives in humid forests. Although several organisms, including spiders and mussels, produce protein-based slimy material outside their bodies, the slime of the velvet worm is unique. Produced from specialized papillae on each side of the worm’s head, and squirted out in jets whenever the worm needs to capture prey or defend itself, it quickly transforms from a sticky, viscoelastic gel into stiff, glassy fibres as strong as nylon.

When dissolved in water, these stiff fibres return to their biomolecular precursors. Remarkably, new fibres can then be drawn from the solution – implyimg that the instructions for fibre self-assembly are “encoded” within the precursors themselves, Harrington says.

Previously, the molecular mechanisms behind this reversibility were little understood. In the present study, however, the researchers used protein sequencing and the AI-guided protein structure prediction algorithm AlphaFold to identify a specific high-molecular-weight protein in the slime. Known as a leucine-rich repeat, this protein has a structure similar to that of a cell surface receptor protein called a Toll-like receptor (TLR).

In biology, Miserez explains, this type of receptor is involved in immune system response. It also plays a role in embryonic or neural development. In the worm slime, however, that’s not the case.

“We have now unveiled a very different role for TLR proteins,” says Miserez, who works in NTU’s materials science and engineering department. “They play a structural, mechanical role and can be seen as a kind of ‘glue protein’ at the molecular level that brings together many other slime proteins to form the macroscopic fibres.”

Miserez adds that the team found this same protein in different species of velvet worms that diverged from a common ancestor nearly 400 million years ago. “This means that this different biological function is very ancient from an evolutionary perspective,” he explains.

“It was very unusual to find such a protein in the context of a biological material,” Harrington adds. “By predicting the protein’s structure and its ability to bind to other slime proteins, we were able to hypothesize its important role in the reversible fibre formation behaviour of the slime.”

The team’s hypothesis is that the reversibility of fibre formation is based on receptor-ligand interactions between several slime proteins. While Harrington acknowledges that much work remains to be done to verify this, he notes that such binding is a well-described principle in many groups of organisms, including bacteria, plants and animals. It is also crucial for cell adhesion, development and innate immunity. “If we can confirm this, it could provide inspiration for making high-performance non-toxic (bio)polymeric materials that are also recyclable,” he tells Physics World.

The study, which is detailed in PNAS, was mainly based on computational modelling and protein structure prediction. The next step, say the McGill researchers, is to purify or recombinantly express the proteins of interest and test their interactions in vitro.

The post Worm slime could inspire recyclable polymer design appeared first on Physics World.

In this episode of the Physics World Weekly podcast we explore the career opportunities open to physicists and engineers looking to work within healthcare – as medical physicists or clinical engineers.

Physics World’s Tami Freeman is in conversation with two early-career physicists working in the UK’s National Health Service (NHS). They are Rachel Allcock, a trainee clinical scientist at University Hospitals Coventry and Warwickshire NHS Trust, and George Bruce, a clinical scientist at NHS Greater Glasgow and Clyde. We also hear from Chris Watt, head of communications and public affairs at IPEM, about the new IPEM careers guide.

This episode is supported by Radformation, which is redefining automation in radiation oncology with a full suite of tools designed to streamline clinical workflows and boost efficiency. At the centre of it all is AutoContour, a powerful AI-driven autocontouring solution trusted by centres worldwide.

This episode is supported by Radformation, which is redefining automation in radiation oncology with a full suite of tools designed to streamline clinical workflows and boost efficiency. At the centre of it all is AutoContour, a powerful AI-driven autocontouring solution trusted by centres worldwide.

The post Exploring careers in healthcare for physicists and engineers appeared first on Physics World.

If you dig deep enough, you’ll find that most biochemical and physiological processes rely on shuttling hydrogen atoms – protons – around living systems. Until recently, this proton transfer process was thought to occur when protons jump from water molecule to water molecule and between chains of amino acids. In 2023, however, researchers suggested that protons might, in fact, transfer at the same time as electrons. Scientists in Israel have now confirmed this is indeed the case, while also showing that proton movement is linked to the electrons’ spin, or magnetic moment. Since the properties of electron spin are defined by quantum mechanics, the new findings imply that essential life processes are intrinsically quantum in nature.

The scientists obtained this result by placing crystals of lysozyme – an enzyme commonly found in living organisms – on a magnetic substrate. Depending on the direction of the substrate’s magnetization, the spin of the electrons ejected from this substrate may be up or down. Once the electrons are ejected from the substrate, they enter the lysozymes. There, they become coupled to phonons, or vibrations of the crystal lattice.

Crucially, this coupling is not random. Instead, the chirality, or “handedness”, of the phonons determines which electron spin they will couple with – a property known as chiral induced spin selectivity.

When the scientists turned their attention to proton transfer through the lysozymes, they discovered that the protons moved much more slowly with one magnetization direction than they did with the opposite. This connection between proton transfer and spin-selective electron transfer did not surprise Yossi Paltiel, who co-led the study with his Hebrew University of Jerusalem (HUJI) colleagues Naama Goren, Nir Keren and Oded Livnah in collaboration with Nurit Ashkenazy of Ben Gurion University and Ron Naaman of the Weizmann Institute.

“Proton transfer in living organisms occurs in a chiral environment and is an essential process,” Paltiel says. “Since protons also have spin, it was logical for us to try to relate proton transfer to electron spin in this work.”

The finding could shed light on proton hopping in biological environments, Paltiel tells Physics World. “It may ultimately help us understand how information and energy are transferred inside living cells, and perhaps even allow us to control this transfer in the future.

“The results also emphasize the role of chirality in biological processes,” he adds, “and show how quantum physics and biochemistry are fundamentally related.”

The HUJI team now plans to study how the coupling between the proton transfer process and the transfer of spin polarized electrons depends on specific biological environments. “We also want to find out to what extent the coupling affects the activity of cells,” Paltiel says.

Their present study is detailed in PNAS.

The post Quantum physics guides proton motion in biological systems appeared first on Physics World.

I began my career in the 1990s at a university spin-out company, working for a business that developed vibration sensors to monitor the condition of helicopter powertrains and rotating machinery. It was a job that led to a career developing technologies and techniques for checking the “health” of machines, such as planes, trains and trucks.

What a difference three decades has made. When I started out, we would deploy bespoke systems that generated limited amounts of data. These days, everything has gone digital and there’s almost more information than we can handle. We’re also seeing a growing use of machine learning and artificial intelligence (AI) to track how machines operate.

In fact, with AI being increasingly used in medical science – for example to predict a patient’s risk of heart attacks – I’ve noticed intriguing similarities between how we monitor the health of machines and the health of human bodies. Jet engines and hearts are very different objects, but in both cases monitoring devices gives us a set of digitized physical measurements.

Sensors installed on a machine provide various basic physical parameters, such as its temperature, pressure, flow rate or speed. More sophisticated devices can yield information about, say, its vibration, acoustic behaviour, or (for an engine) oil debris or quality. Bespoke sensors might even be added if an important or otherwise unchecked aspect of a machine’s performance needs to be monitored – provided the benefits of doing so outweigh the cost.

Generally speaking, the sensors you use in a particular situation depend on what’s worked before and whether you can exploit other measurements, such as those controlling the machine. But whatever sensors are used, the raw data then have to be processed and manipulated to extract particular features and characteristics.

If the machine appears to be going wrong, can you try to diagnose what the problem might be?

Once you’ve done all that, you can then determine the health of the machine, rather like in medicine. Is it performing normally? Does it seem to be developing a fault? If the machine appears to be going wrong, can you try to diagnose what the problem might be?

Generally, we do this by tracking a range of parameters to look for consistent behaviour, such as a steady increase, or by seeing if a parameter exceeds a pre-defined threshold. With further analysis, we can also try to predict the future state of the machine, work out what its remaining useful life might be, or decide if any maintenance needs scheduling.

A diagnosis typically involves linking various anomalous physical parameters (or symptoms) to a probable cause. As machines obey the laws of physics, a diagnosis can either be based on engineering knowledge or be driven by data – or sometimes the two together. If a concrete diagnosis can’t be made, you can still get a sense of where a problem might lie before carrying out further investigation or doing a detailed inspection.

One way of doing this is to use a “borescope” – essentially a long, flexible cable with a camera on the end. Rather like an endoscope in medicine, it allows you to look down narrow or difficult-to-reach cavities. But unlike medical imaging, which generally takes place in the controlled environment of a lab or clinic, machine data are typically acquired “in the field”. The resulting images can be tricky to interpret because the light is poor, the measurements are inconsistent, or the equipment hasn’t been used in the most effective way.

Even though it can be hard to work out what you’re seeing, in-situ visual inspections are vital as they provide evidence of a known condition, which can be directly linked to physical sensor measurements. It’s a kind of health status calibration. But if you want to get more robust results, it’s worth turning to advanced modelling techniques, such as deep neural networks.

One way to predict the wear and tear of a machine’s constituent parts is to use what’s known as a “digital twin”. Essentially a virtual replica of a physical object, a digital twin is created by building a detailed model and then feeding in real-time information from sensors and inspections. The twin basically mirrors the behaviour, characteristics and performance of the real object.

Real-time health data are great because they allow machines to be serviced as and when required, rather than following a rigid maintenance schedule. For example, if a machine has been deployed heavily in a difficult environment, it can be serviced sooner, potentially preventing an unexpected failure. Conversely, if it’s been used relatively lightly and not shown any problems, then maintenance could be postponed or reduced in scope. This saves time and money because the equipment will be out of action less than anticipated.

We can work out which parts will need repairing or replacing, when the maintenance will be required and who will do it

Having information about a machine’s condition at any point in time not only allows this kind of “intelligent maintenance” but also lets us use associated resources wisely. For example, we can work out which parts will need repairing or replacing, when the maintenance will be required and who will do it. Spare parts can therefore be ordered only when required, saving money and optimizing supply chains.

Real-time health-monitoring data are particularly useful for companies owning many machines of one kind, such as airlines with a fleet of planes or haulage companies with a lot of trucks. It gives them a better understanding not just of how machines behave individually – but also collectively to give a “fleet-wide” view. Noticing and diagnosing failures from data becomes an iterative process, helping manufacturers create new or improved machine designs.

This all sounds great, but in some respects, it’s harder to understand a machine than a human. People can be taken to hospitals or clinics for a medical scan, but a wind turbine or jet engine, say, can’t be readily accessed, switched off or sent for treatment. Machines also can’t tell us exactly how they feel.

However, even humans don’t always know when there’s something wrong. That’s why it’s worth us taking a leaf from industry’s book and consider getting regular health monitoring and checks. There are lots of brilliant apps out there to monitor and track your heart rate, blood pressure, physical activity and sugar levels.

Just as with a machine, you can avoid unexpected failure, reduce your maintenance costs, and make yourself more efficient and reliable. You could, potentially, even live longer too.

The post People benefit from medicine, but machines need healthcare too appeared first on Physics World.

Stars are cosmic musical instruments: they vibrate with complex patterns that echo through their interiors. These vibrations, known as pressure waves, ripple through the star, similar to the earthquakes that shake our planet. The frequencies of these waves hold information about the star’s mass, age and internal structure.

In a study led by researchers at UNSW Sydney, Australia, astronomer Claudia Reyes and colleagues “listened” to the sound from stars in the M67 cluster and discovered a surprising feature: a plateau in their frequency pattern. This plateau appears during the subgiant and red giant phases of stars where they expand and evolve after exhausting the hydrogen fuel in their cores. This feature, reported in Nature, reveals how deep the outer layers of the star have pushed into the interior and offers a new diagnostic to improve mass and age estimates of stars beyond the main sequence (the core-hydrogen-burning phase).

Beneath the surface of stars, hot gases are constantly rising, cooling and sinking back down, much like hot bubbles in boiling water. This constant churning is called convection. As these rising and sinking gas blobs collide or burst at the stellar surface, they generate pressure waves. These are essentially acoustic waves, bouncing within the stellar interior to create standing wave patterns.

Stars do not vibrate at just one frequency; they oscillate simultaneously at multiple frequencies, producing a spectrum of sounds. These acoustic oscillations cannot be heard in space directly, but are observed as tiny fluctuations in the star’s brightness over time.

Star clusters offer an ideal environment in which to study stellar evolution as all stars in a cluster form from the same gas cloud at about the same time with the same chemical compositions but with different masses. The researchers investigated stars from the open cluster M67, as this cluster has a rich population of evolved stars including subgiants and red giants with a chemical composition similar to the Sun’s. They measured acoustic oscillations in 27 stars using data from NASA’s Kepler/K2 mission.

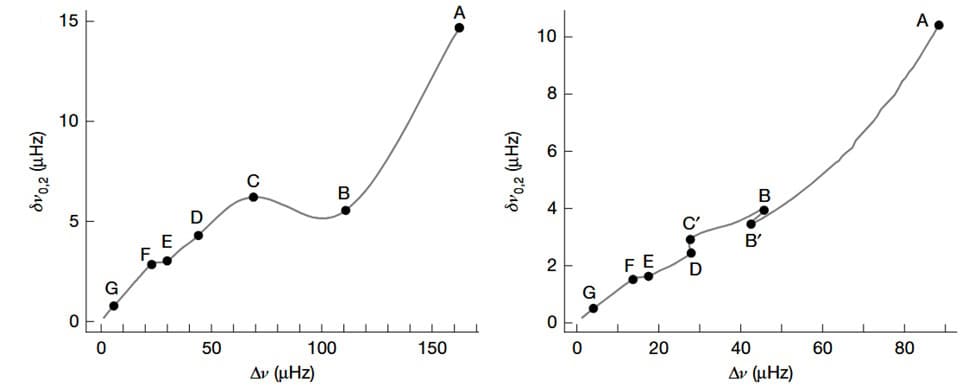

Stars oscillate across a range of tones, and in this study the researchers focused on two key features in this oscillation spectrum: large and small frequency separations. The large frequency separation, which probes stellar density, is the frequency difference between oscillations of the same angular degree (ℓ) but different radial orders (n). The small frequency separation refers to frequency differences between the modes of degrees ℓ and ℓ + 2, of consecutive orders of n. For main sequence stars, small separations are reliable age indicators because their changes during hydrogen burning are well understood. In later stages of stellar evolution, however, their relationship to the stellar interior remained unclear.

In 27 stars, Reyes and colleagues investigated the small separation between modes of degrees 0 and 2. Plotting a graph of small versus large frequency separations for each star, called a C–D diagram, they uncovered a surprising plateau in small frequency separations.

The researchers traced this plateau to the evolution of the lower boundary of the star’s convective envelope. As the envelope expands and cools, this boundary sinks deeper into the interior. Along this boundary, the density and sound speed change rapidly due to the difference in chemical composition on either side. These steep changes cause acoustic glitches that disturb how the pressure waves move through the star and temporarily stall the evolution of the small frequency separations, observed as a plateau in the frequency pattern.

This stalling occurs at a specific stage in stellar evolution – when the convective envelope deepens enough to encompass nearly 80% of the star’s mass. To confirm this connection, the researchers varied the amount of convective boundary mixing in their stellar models. They found that the depth of the envelope directly influenced both the timing and shape of the plateau in the small separations.

This plateau serves as a new diagnostic tool to identify a specific evolutionary stage in red giant stars and improve estimates of their mass and age.

“The discovery of the ‘plateau’ frequencies is significant because it represents one more corroboration of the accuracy of our stellar models, as it shows how the turbulent regions at the bottom of a star’s envelope affect the sound speed,” explains Reyes, who is now at the Australian National University in Canberra. “They also have great potential to help determine with ease and great accuracy the mass and age of a star, which is of great interest for galactic archaeology, the study of the history of our galaxy.”

The sounds of starquakes offer a new window to study the evolution of stars and, in turn, recreate the history of our galaxy. Clusters like M67 serve as benchmarks to study and test stellar models and understand the future evolution of stars like our Sun.

“We plan to look for stars in the field which have very well-determined masses and which are in their ‘plateau’ phase,” says Reyes. “We will use these stars to benchmark the diagnostic potential of the plateau frequencies as a tool, so it can later be applied to stars all over the galaxy.”

The post New analysis of M67 cluster helps decode the sound of stars appeared first on Physics World.

Powerful flares on highly-magnetic neutron stars called magnetars could produce up to 10% of the universe’s gold, silver and platinum, according to a new study. What is more, astronomers may have already observed this cosmic alchemy in action.

Gold, silver, platinum and a host of other rare heavy nuclei are known as rapid-process (r-process) elements. This is because astronomers believe that these elements are produced by the rapid capture of neutrons by lighter nuclei. Neutrons can only exist outside of an atomic nucleus for about 15 min before decaying (except in the most extreme environments). This means that the r-process must be fast and take place in environments rich in free neutrons.

In August 2017, an explosion resulting from the merger of two neutron stars was witnessed by telescopes operating across the electromagnetic spectrum and by gravitational-wave detectors. Dubbed a kilonova, the explosion produced approximately 16,000 Earth-masses worth of r-process elements, including about ten Earth masses of gold and platinum.

While the observations seem to answer the question of where precious metals came from, there remains a suspicion that neutron-star mergers cannot explain the entire abundance of r-process elements in the universe.

Now researchers led by Anirudh Patel, who is a PhD student at New York’s Columbia University, have created a model that describes how flares on the surface of magnetars can create r-process elements.

Patel tells Physics World that “The rate of giant flares is significantly greater than mergers.” However, given that one merger “produces roughly 10,000 times more r-process mass than a single magnetar flare”, neutron-star mergers are still the dominant factory of rare heavy elements.

A magnetar is an extreme type of neutron star with a magnetic field strength of up to a thousand trillion gauss. This makes magnetars the most magnetic objects in the universe. Indeed, if a magnetar were as close to Earth as the Moon, its magnetic field would wipe your credit card.

Astrophysicists believe that when a magnetar’s powerful magnetic fields are pulled taut, the magnetic tension will inevitably snap. This would result in a flare, which is an energetic ejection of neutron-rich material from the magnetar’s surface.

However, the physics isn’t entirely understood, according to Jakub Cehula of Charles University in the Czech Republic, who is a member of Patel’s team. “While the source of energy for a magnetar’s giant flares is generally agreed to be the magnetic field, the exact mechanism by which this energy is released is not fully understood,” he explains.

One possible mechanism is magnetic reconnection, which creates flares on the Sun. Flares could also be produced by energy released during starquakes following a build-up of magnetic stress. However, neither satisfactorily explains the giant flares, of which only nine have thus far been detected.

In 2024 Cehula led research that attempted to explain the flares by combining starquakes with magnetic reconnection. “We assumed that giant flares are powered by a sudden and total dissipation of the magnetic field right above a magnetar’s surface,” says Cehula.

This sudden release of energy drives a shockwave into the magnetar’s neutron-rich crust, blasting a portion of it into space at velocities greater than a tenth of the speed of light, where in theory heavy elements are formed via the r-process.

Remarkably, astronomers may have already witnessed this in 2004, when a giant magnetar flare was spotted as a half-second gamma-ray burst that released more energy than the Sun does in a million years. What happened next remained unexplained until now. Ten minutes after the initial burst, the European Space Agency’s INTEGRAL satellite detected a second, weaker signal that was not understood.

Now, Patel and colleagues have shown that the r-process in this flare created unstable isotopes that quickly decayed into stable heavy elements – creating the gamma-ray signal.

Patel calculates that the 2004 flare resulted in the creation of two million billion billion kilograms of r-process elements, equivalent to about the mass of Mars.

Extrapolating, Patel calculates that giant flares on magnetars contribute between 1–10% of all the r-process elements in the universe.

“This estimate accounts for the fact that these giant flares are rare,” he says, “But it’s also important to note that magnetars have lifetimes of 1000 to 10,000 years, so while there may only be a couple of dozen magnetars known to us today, there have been many more magnetars that have lived and died over the course of the 13 billion-year history of our galaxy.”

Magnetars would have been produced early in the universe by the supernovae of massive stars, whereas it can take a billion years or longer for two neutron stars to merge. Hence, magnetars would have been a more dominant source of r-process elements in the early universe. However, they may not have been the only source.

“If I had to bet, I would say there are other environments in which r-process elements can be produced, for example in certain rare types of core-collapse supernovae,” says Patel.

Either way, it means that some of the gold and silver in your jewellery was forged in the violence of immense magnetic fields snapping on a dead star.

The research is described in Astrophysical Journal Letters.

The post How magnetar flares give birth to gold and platinum appeared first on Physics World.

Subtle quantum effects within atomic nuclei can dramatically affect how some nuclei break apart. By studying 100 isotopes with masses below that of lead, an international team of physicists uncovered a previously unknown region in the nuclear landscape where fragments of fission split in an unexpected way. This is driven not by the usual forces, but by shell effects rooted in quantum mechanics.

“When a nucleus splits apart into two fragments, the mass and charge distribution of these fission fragments exhibits the signature of the underlying nuclear structure effect in the fission process,” explains Pierre Morfouace of Université Paris-Saclay, who led the study. “In the exotic region of the nuclear chart that we studied, where nuclei do not have many neutrons, a symmetric split was previously expected. However, the asymmetric fission means that a new quantum effect is at stake.”

This unexpected discovery not only sheds light on the fine details of how nuclei break apart but also has far-reaching implications. These range from the development of safer nuclear energy to understanding how heavy elements are created during cataclysmic astrophysical events like stellar explosions.

Fission is the process by which a heavy atomic nucleus splits into smaller fragments. It is governed by a complex interplay of forces. The strong nuclear force, which binds protons and neutrons together, competes with the electromagnetic repulsion between positively charged protons. The result is that certain nuclei are unstable and typically leads to a symmetric fission.

But there’s another, subtler phenomenon at play: quantum shell effects. These arise because protons and neutrons inside the nucleus tend to arrange themselves into discrete energy levels or “shells,” much like electrons do in atoms.

“Quantum shell effects [in atomic electrons] play a major role in chemistry, where they are responsible for the properties of noble gases,” says Cedric Simenel of the Australian National University, who was not involved in the study. “In nuclear physics, they provide extra stability to spherical nuclei with so-called ‘magic’ numbers of protons or neutrons. Such shell effects drive heavy nuclei to often fission asymmetrically.”

In the case of very heavy nuclei, such as uranium or plutonium, this asymmetry is well documented. But in lighter, neutron-deficient nuclei – those with fewer neutrons than their stable counterparts – researchers had long expected symmetric fission, where the nucleus breaks into two roughly equal parts. This new study challenges that view.

To investigate fission in this less-explored part of the nuclear chart, scientists from the R3B-SOFIA collaboration carried out experiments at the GSI Helmholtz Centre for Heavy Ion Research in Darmstadt, Germany. They focused on nuclei ranging from iridium to thorium, many of which had never been studied before. The nuclei were fired at high energies into a lead target to induce fission.

The fragments produced in each fission event were carefully analysed using a suite of high-resolution detectors. A double ionization chamber captured the number of protons in each product, while a superconducting magnet and time-of-flight detectors tracked their momentum, enabling a detailed reconstruction of how the split occurred.

Using this method, the researchers found that the lightest fission fragments were frequently formed with 36 protons, which is the atomic number of krypton. This pattern suggests the presence of a stabilizing shell effect at that specific proton number.

“Our data reveal the stabilizing effect of proton shells at Z=36,” explains Morfouace. “This marks the identification of a new ‘island’ of asymmetric fission, one driven by the light fragment, unlike the well-known behaviour in heavier actinides. It expands our understanding of how nuclear structure influences fission outcomes.”

“Experimentally, what makes this work unique is that they provide the distribution of protons in the fragments, while earlier measurements in sub-lead nuclei were essentially focused on the total number of nucleons,” comments Simenel.

Since quantum shell effects are tied to specific numbers of protons or neutrons, not just the overall mass, these new measurements offer direct evidence of how proton shell structure shapes the outcome of fission in lighter nuclei. This makes the results particularly valuable for testing and refining theoretical models of fission dynamics.

“This work will undoubtedly lead to further experimental studies, in particular with more exotic light nuclei,” Simenel adds. “However, to me, the ball is now in the camp of theorists who need to improve their modelling of nuclear fission to achieve the predictive power required to study the role of fission in regions of the nuclear chart not accessible experimentally, as in nuclei formed in the astrophysical processes.”

The research is described in Nature.

The post Subtle quantum effects dictate how some nuclei break apart appeared first on Physics World.

Five-body recombination, in which five identical atoms form a tetramer molecule and a single free atom, could be the largest contributor to loss from ultracold atom traps at specific “Efimov resonances”, according to calculations done by physicists in the US. The process, which is less well understood than three- and four-body recombination, could be useful for building molecules, and potentially for modelling nuclear fusion.

A collision involving trapped atoms can be either elastic – in which the internal states of the atoms and their total kinetic energy remain unchanged – or inelastic, in which there is an interchange between the kinetic energy of the system and the internal energy states of the colliding atoms.