It’s rare to come across someone who’s been responsible for enabling a seismic shift in society that has affected almost everyone and everything. Tim Berners-Lee, who invented the World Wide Web, is one such person. His new memoir This is for Everyone unfolds the history and development of the Web and, in places, of the man himself.

Berners-Lee was born in London in 1955 to parents, originally from Birmingham, who met while working on the Ferranti Mark 1 computer and knew Alan Turing. Theirs was a creative, intellectual and slightly chaotic household. His mother could maintain a motorbike with fence wire and pliers, and was a crusader for equal rights in the workplace. His father – brilliant and absent minded – taught Berners-Lee about computers and queuing theory. A childhood of camping and model trains, it was, in Berners-Lee’s view, idyllic.

Berners-Lee had the good fortune to be supported by a series of teachers and managers who recognized his potential and unique way of working. He studied physics at the University of Oxford (his tutor “going with the flow” of Berners-Lee’s unconventional notation and ability to approach problems from oblique angles) and built his own computer. After graduating, he married and, following a couple of jobs, took a six-month placement at the CERN particle-physics lab in Geneva in 1985.

This placement set “a seed that sprouted into a tool that shook up the world”. Berners-Lee saw how difficult it was to share information stored in different languages in incompatible computer systems and how, in contrast, information flowed easily when researchers met over coffee, connected semi-randomly and talked. While at CERN, he therefore wrote a rough prototype for a program to link information in a type of web rather than a structured hierarchy.

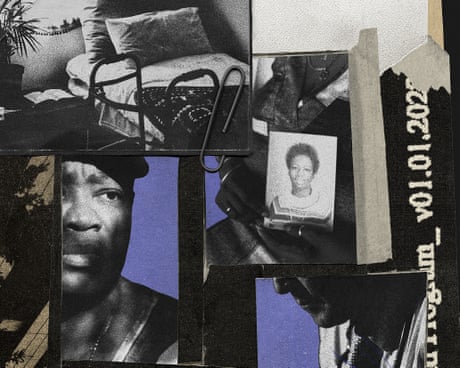

Back at CERN, Tim Berners-Lee developed his vision of a “universal portal” to information

The placement ended and the program was ignored, but four years later Berners-Lee was back at CERN. Now divorced and soon to remarry, he developed his vision of a “universal portal” to information. It proved to be the perfect time. All the tools necessary to achieve the Web – the Internet, address labelling of computers, network cables, data protocols, the hypertext language that allowed cross-referencing of text and links on the same computer – had already been developed by others.

Berners-Lee saw the need for a user-friendly interface, using hypertext that could link to information on other computers across the world. His excitement was “uncontainable”, and according to his line manager “few of us if any could understand what he was talking about”. But Berners-Lee’s managers supported him and freed his time away from his actual job to become the world’s first web developer.

Having a vision was one thing, but getting others to share it was another. People at CERN only really started to use the Web properly once the lab’s internal phone book was made available on it. As a student at the time, I can confirm that it was much, much easier to use the Web than log on to CERN’s clunky IBM mainframe, where phone numbers had previously been stored.

Wider adoption relied on a set of volunteer developers, working with open-source software, to make browsers and platforms that were attractive and easy to use. CERN agreed to donate the intellectual property for web software to the public domain, which helped. But the path to today’s Web was not smooth: standards risked diverging and companies wanted to build applications that hindered information sharing.

Feeling that “the Web was outgrowing my institution” and “would be a distraction” to a lab whose core mission was physics, Berners-Lee moved to the Massachusetts Institute of Technology in 1994. There he founded the World Wide Web Consortium (W3C) to ensure consistent, accessible standards were followed by everyone as the Web developed into a global enterprise. The progression sounds straightforward although earlier accounts, such as James Gillies and Robert Caillau’s 2000 book How the Web Was Born, imply some rivalry between institutions that is glossed over here.

Initially inclined to advise people to share good things and not search for bad things, Berners-Lee had reckoned without the insidious power of “manipulative and coercive” algorithms on social networks

The rest is history, but not quite the history that Berners-Lee had in mind. By 1995 big business had discovered the possibilities of the Web to maximize influence and profit. Initially inclined to advise people to share good things and not search for bad things, Berners-Lee had reckoned without the insidious power of “manipulative and coercive” algorithms on social networks. Collaborative sites like Wikipedia are closer to his vision of an ideal Web; an emergent good arising from individual empowerment. The flip side of human nature seems to come as a surprise.

The rest of the book brings us up to date with Berners-Lee’s concerns (data, privacy, misuse of AI, toxic online culture), his hopes (the good use of AI), a third marriage and his move into a data-handling business. There are some big awards and an impressive amount of name dropping; he is excited by Order of Merit lunches with the Queen and by sitting next to Paul McCartney’s family at the opening ceremony to the London Olympics in 2012. A flick through the index reveals names ranging from Al Gore and Bono to Lucien Freud. These are not your average computing technology circles.

There are brief character studies to illustrate some of the main players, but don’t expect much insight into their lives. This goes for Berners-Lee too, who doesn’t step back to particularly reflect on those around him, or indeed his own motives beyond that vision of a Web for all enabling the best of humankind. He is firmly future focused.

Still, there is no-one more qualified to describe what the Web was intended for, its core philosophy, and what caused it to develop to where it is today. You’ll enjoy the book whether you want an insight into the inner workings that make your web browsing possible, relive old and forgotten browser names, or see how big tech wants to monetize and monopolize your online time. It is an easy read from an important voice.

The book ends with a passionate statement for what the future could be, with businesses and individuals working together to switch the Web from “the attention economy to the intention economy”. It’s a future where users are no longer distracted by social media and manipulated by attention-grabbing algorithms; instead, computers and services do what users want them to do, with the information that users want them to have.

Berners-Lee is still optimistic, still an incurable idealist, still driven by vision. And perhaps still a little naïve too in believing that everyone’s values will align this time.

- 2025 Macmillan 400pp £25.00/$30.00hb

The post Tim Berners-Lee: why the inventor of the Web is ‘optimistic, idealistic and perhaps a little naïve’ appeared first on Physics World.