A Sensed Presence May Be More Than Just an Eerie Figment of the Imagination

The United States’ goal of completing a crewed moon mission before China has been coined as the new space race, reminiscent of the days of Apollo. Similar to Apollo and the space race with the Soviet Union, the principle of non-sovereignty is at issue as is the rule-based international order. The issue of non-sovereignty in […]

The post A soft power strategy to preserve non-sovereignty from Chinese land claims on the moon appeared first on SpaceNews.

Radiation treatment for patients with lung cancer represents a balancing act, particularly if malignant lesions are centrally located near to critical structures. The radiation may destroy the tumour, but vital organs may be seriously damaged as well.

The standard treatment for non-small cell lung cancer (NSCLC) is stereotactic ablative body radiotherapy (SABR), which delivers intense radiation doses in just a few treatment sessions and achieves excellent local control. For ultracentral lung legions, however – defined as having a planning target volume (PTV) that abuts or overlaps the proximal bronchial tree, oesophagus or pulmonary vessels – the high risk of severe radiation toxicity makes SABR highly challenging.

A research team at GenesisCare UK, an independent cancer care provider operating nine treatment centres in the UK, has now demonstrated that stereotactic MR-guided adaptive radiotherapy (SMART)-based SABR may be a safer and more effective option for treating ultracentral metastatic lesions in patients with histologically confirmed NSCLC. They report their findings in Advances in Radiation Oncology.

SMART uses diagnostic-quality MR scans to provide real-time imaging, 3D multiplanar soft-tissue tracking and automated beam control of an advanced linear accelerator. The idea is to use daily online volume adaptation and plan re-optimization to account for any changes in tumour size and position relative to organs-at-risk (OAR). Real-time imaging enables treatment in breath-hold with gated beam delivery (automatically pausing delivery if the target moves outside a defined boundary), eliminating the need for an internal target volume and enabling smaller PTV margins.

The approach offers potential to enhance treatment precision and target coverage while improving sparing of adjacent organs compared with conventional SABR, first author Elena Moreno-Olmedo and colleagues contend.

The team conducted a study to assess the incidence of SABR-related toxicities in patients with histologically confirmed NSCLC undergoing SMART-based SABR. The study included 11 patients with 18 ultracentral lesions, the majority of whom had oligometastatic or olioprogressive disease.

Patients received five to eight treatment fractions, to a median dose of 40 Gy (ranging from 30 to 60 Gy). The researchers generated fixed-field SABR plans with dosimetric aims including a PTV V100% (the volume receiving at least 100% of the prescription dose) of 95% or above, a PTV V95% of 98% or above and a maximum dose of between110% and 140%. PTV coverage was compromised where necessary to meet OAR constraints, with a minimum PTV V100% of at least 70%.

SABR was performed using a 6 MV 0.35 T MRIdian linac with gated delivery during repeated breath-holds, under continuous MR guidance. Based on daily MRI scans, online plan adaptation was performed for all of the 78 delivered fractions.

The researchers report that both the PTV volume and PTV overlap with ultracentral OARs were reduced in SMART treatments compared with conventional SABR. The median SMART PTV was 10.1 cc, compared with 30.4 cc for the simulated SABR PTV, while the median PTV overlap with OARs was 0.85 cc for SMART (8.4% of the PTV) and 4.7 cc for conventional SABR.

In terms of treatment-related side effects for SMART, the rates of acute and late grade 1–2 toxicities were 54% and 18%, respectively, with no grade 3–5 toxicities observed. This demonstrates the technique’s increased safety compared with non-adaptive SABR treatments, which have exhibited severe rates of toxicity, including treatment-related deaths, in ultracentral tumours.

Two-thirds of patients were alive at the median follow-up point of 28 months, and 93% were free from local progression at 12 months. The median progression-free survival was 5.8 months and median overall survival was 20 months.

Acknowledging the short follow-up time frame, the researchers note that additional late toxicities may occur. However, they are hopeful that SMART will be considered as a favourable treatment option for patients with ultracentral NSCLC lesions.

“Our analysis demonstrates that hypofractionated SMART with daily online adaptation for ultracentral NSCLC achieved comparable local control to conventional non-adaptive SABR, with a safer toxicity profile,” they write. “These findings support the consideration of SMART as a safer and effective treatment option for this challenging subgroup of thoracic tumours.”

SMART-based SABR radiotherapy remains an emerging cancer treatment that’s not available yet in many cancer treatment centres. Despite the high risk for patients with ultracentral tumours, SABR is the standard treatment for inoperable NSCLC.

The phase 1 clinical trial, Stereotactic radiation therapy for ultracentral NSCLC: a safety and efficacy trial (SUNSET), assessed the use of SBRT for ultracentral tumours in 30 patients with early-stage NSCLC treated at five Canadian cancer centres. In all cases, the PTVs touched or overlapped the proximal bronchial tree, the pulmonary artery, the pulmonary vein or the oesophagus. Led by Meredith Giuliani of the Princess Margaret Cancer Centre, the trial aimed to determine the maximum tolerated radiation dose associated with a less than 30% rate of grade 3–5 toxicity within two years of treatment.

All patients received 60 Gy in eight fractions. Dose was prescribed to deliver a PTV V100% of 95%, a PTV V90% of 99% and a maximum dose of no more than 120% of the prescription dose, with OAR constraints prioritized over PTV coverage. All patients had daily cone-beam CT imaging to verify tumour position before treatment.

At a median follow-up of 37 months, two patients (6.7%) experienced dose-limiting grade 3–5 toxicities – an adverse event rate within the prespecified acceptability criteria. The three-year overall survival was 72.5% and the three-year progression-free survival was 66.1%.

In a subsequent dosimetric analysis, the researchers report that they did not identify any relationship between OAR dose and toxicity, within the dose constraints used in the SUNSET trial. They note that 73% of patients could be treated without compromise of the PTV, and where compromise was needed, the mean PTV D95 (the minimum dose delivered to 95% of the PTV) remained high at 52.3 Gy.

As expected, plans that overlapped with central OARs were associated with worse local control, but PTV undercoverage was not. “[These findings suggest] that the approach of reducing PTV coverage to meet OAR constraints does not appear to compromise local control, and that acceptable toxicity rates are achievable using 60 Gy in eight fractions,” the team writes. “In the future, use of MRI or online adaptive SBRT may allow for safer treatment delivery by limiting dose variation with anatomic changes.”

The post A SMART approach to treating lung cancers in challenging locations appeared first on Physics World.

Launch firms Space Pioneer and Galactic Energy are the latest China’s commercial space companies to move toward IPOs, amid a surge of investment.

The post Chinese launch firms Space Pioneer and Galactic Energy move toward IPOs appeared first on SpaceNews.

Researchers in the United Arab Emirates have designed a new catheter that can deliver drugs to entire regions of the brain. Developed by Batoul Khlaifat and colleagues at New York University Abu Dhabi, the catheter’s helical structure and multiple outflow ports could make it both safer and more effective for treating a wide range of neurological disorders.

Modern treatments for brain-related conditions including Parkinson’s disease, epilepsy, and tumours often involve implanting microfluidic catheters that deliver controlled doses of drug-infused fluids to highly localized regions of the brain. Today, these implants are made from highly flexible materials that closely mimic the soft tissue of the brain. This makes them far less invasive than previous designs.

However, there is still much room for improvement, as Khlaifat explains. “Catheter design and function have long been limited by the neuroinflammatory response after implantation, as well as the unequal drug distribution across the catheter’s outlets,” she says.

A key challenge with this approach is that each of the brain’s distinct regions has highly irregular shapes, which makes it incredibly difficult to target via single drug doses. Instead, doses must be delivered either through repeated insertions from a single port at the end of a catheter, or through single insertions across multiple co-implanted catheters. Either way, the approach is highly invasive, and runs the risk of further trauma to the brain.

In their study, Khlaifat’s team explored how many of these problems stem from existing catheter designs. They tend to be simple tubes with single input and output ports at either end. Using fluid dynamics simulations, they started by investigating how drug outflow would change when multiple output ports are positioned along the length of the catheter.

To ensure this outflow is delivered evenly, they carefully adjusted the diameter of each port to account for the change in fluid pressure along the catheter’s length – so that four evenly spaced ports could each deliver roughly one quarter of the total flow. Building on this innovation, the researchers then explored how the shape of the catheter itself could be adjusted to optimize delivery even further.

“We varied the catheter design from a straight catheter to a helix of the same small diameter, allowing for a larger area of drug distribution in the target implantation region with minimal invasiveness,” explains team member Khalil Ramadi. “This helical shape also allows us to resist buckling on insertion, which is a major problem for miniaturized straight catheters.”

Based on their simulations, the team fabricated a helical catheter the call Strategic Precision Infusion for Regional Administration of Liquid, or SPIRAL. In their first set of experiments, they tested their simulations in controlled lab conditions. They verified their prediction of even outflow rates across the catheter’s outlets.

“Our helical device was also tested in mouse models alongside its straight counterpart to study its neuroinflammatory response,” Khlaifat says. “There were no significant differences between the two designs.”

Having validated the safety of their approach, the researchers are now hopeful that SPIRAL could pave the way for new and improved methods for targeted drug delivery within the brain. With the ability to target entire regions of the brain with smaller, more controlled doses, this future generation of implanted catheters could ultimately prove to be both safer and more effective than existing designs.

“These catheters could be optimized for each patient through our computational framework to ensure only regions that require dosing are exposed to therapy, all through a single insertion point in the skull,” describes team member Mahmoud Elbeh. “This tailored approach could improve therapies for brain disorders such as epilepsy and glioblastomas.”

The research is described in the Journal of Neural Engineering.

The post Spiral catheter optimizes drug delivery to the brain appeared first on Physics World.

ESA is putting the final touches on a package of programs worth 22 billion euros for next month’s ministerial conference, despite U.S. budget uncertainty and removal of one mission.

The post ESA finalizing ministerial package appeared first on SpaceNews.

Iridium’s shares closed down more than 7% Oct. 23 after the satellite operator lowered its full-year service revenue outlook again, while withdrawing its $1 billion target for 2030 amid mounting competition from SpaceX.

The post Iridium pulls $1 billion 2030 service revenue goal amid SpaceX’s D2D push appeared first on SpaceNews.

SAN FRANCISCO – Indian startup SatLeo Labs is preparing to launch its first thermal-imaging payload early next year. By the end of 2026, the Ahmedabad-based startup intends to launch the first of 12 microsatellites to gather electro-optical and thermal infrared imagery. “Not only will we be getting the visible dataset of any particular object or […]

The post SatLeo prepares to launch first thermal-imaging payload appeared first on SpaceNews.

China launched the latest in a series of clandestine satellites Thursday, using the country’s most powerful rocket to send the spacecraft to geosynchronous transfer orbit.

The post China expands classified geostationary satellite series with Long March 5 launch appeared first on SpaceNews.

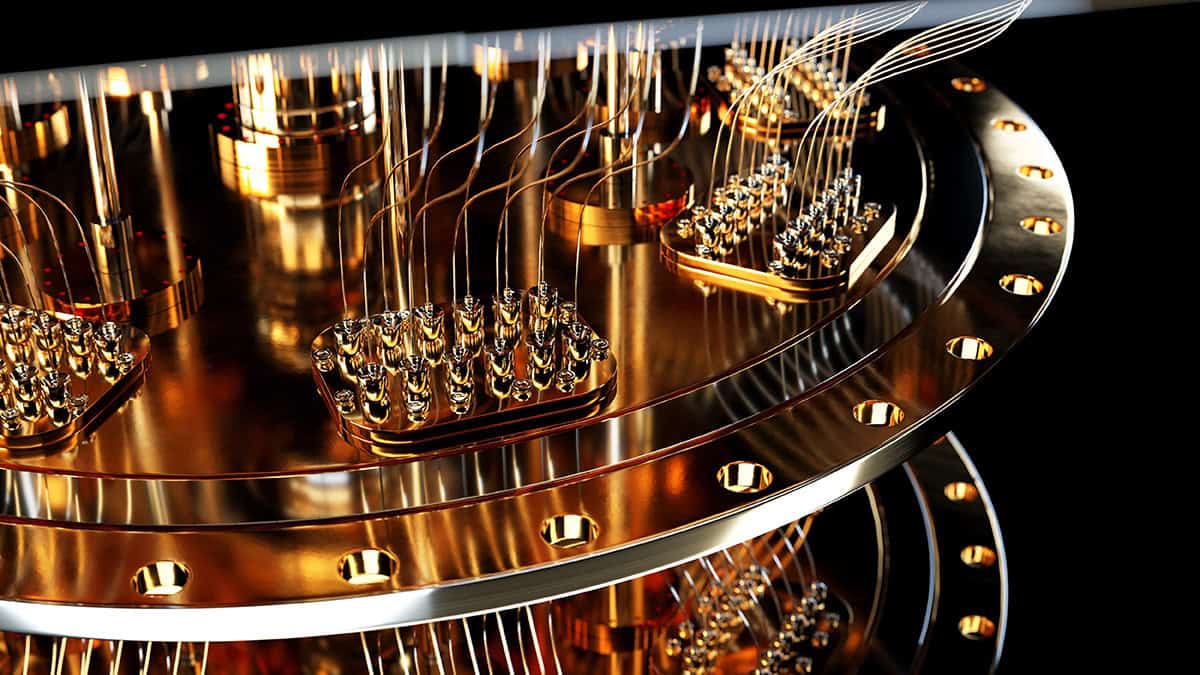

From quantum utility today to quantum advantage tomorrow: incumbent technology companies – among them Google, Amazon, IBM and Microsoft – and a wave of ambitious start-ups are on a mission to transform quantum computing from applied research endeavour to mainstream commercial opportunity. The end-game: quantum computers that can be deployed at-scale to perform computations significantly faster than classical machines while addressing scientific, industrial and commercial problems beyond the reach of today’s high-performance computing systems.

Meanwhile, as technology translation gathers pace across the quantum supply chain, government laboratories and academic scientists must maintain their focus on the “hard yards” of precompetitive research. That means prioritizing foundational quantum hardware and software technologies, underpinned by theoretical understanding, experimental systems, device design and fabrication – and pushing out along all these R&D pathways simultaneously.

Equally important is the requirement to understand and quantify the relative performance of quantum computers from different manufacturers as well as across the myriad platform technologies – among them superconducting circuits, trapped ions, neutral atoms as well as photonic and semiconductor processors. A case study in this regard is a broad-scope UK research collaboration that, for the past four years, has been reviewing, collecting and organizing a holistic taxonomy of metrics and benchmarks to evaluate the performance of quantum computers against their classical counterparts as well as the relative performance of competing quantum platforms.

Funded by the National Quantum Computing Centre (NQCC), which is part of the UK National Quantum Technologies Programme (NQTP), and led by scientists at the National Physical Laboratory (NPL), the UK’s National Measurement Institute, the cross-disciplinary consortium has taken on an endeavour that is as sprawling as it is complex. The challenge lies in the diversity of quantum hardware platforms in the mix; also the emergence of two different approaches to quantum computing – one being a gate-based framework for universal quantum computation, the other an analogue approach tailored to outperforming classical computers on specific tasks.

“Given the ambition of this undertaking, we tapped into a deep pool of specialist domain knowledge and expertise provided by university colleagues at Edinburgh, Durham, Warwick and several other centres-of-excellence in quantum,” explains Ivan Rungger, a principal scientist at NPL, professor in computer science at Royal Holloway, University of London, and lead scientist on the quantum benchmarking project. That core group consulted widely within the research community and with quantum technology companies across the nascent supply chain. “The resulting study,” adds Rungger, “positions transparent and objective benchmarking as a critical enabler for trust, comparability and commercial adoption of quantum technologies, aligning closely with NPL’s mission in quantum metrology and standards.”

For context, a number of performance metrics used to benchmark classical computers can also be applied directly to quantum computers, such as the speed of operations, the number of processing units, as well as the probability of errors to occur in the computation. That only goes so far, though, with all manner of dedicated metrics emerging in the past decade to benchmark the performance of quantum computers – ranging from their individual hardware components to entire applications.

Complexity reigns, it seems, and navigating the extensive literature can prove overwhelming, while the levels of maturity for different metrics varies significantly. Objective comparisons aren’t straightforward either – not least because variations of the same metric are commonly deployed; also the data disclosed together with a reported metric value is often not sufficient to reproduce the results.

“Many of the approaches provide similar overall qualitative performance values,” Rungger notes, “but the divergence in the technical implementation makes quantitative comparisons difficult and, by extension, slows progress of the field towards quantum advantage.”

The task then is to rationalize the metrics used to evaluate the performance for a given quantum hardware platform to a minimal yet representative set agreed across manufacturers, algorithm developers and end-users. These benchmarks also need to follow some agreed common approaches to fairly and objectively evaluate quantum computers from different equipment vendors.

With these objectives in mind, Rungger and colleagues conducted a deep-dive review that has yielded a comprehensive collection of metrics and benchmarks to allow holistic comparisons of quantum computers, assessing the quality of hardware components all the way to system-level performance and application-level metrics.

Drill down further and there’s a consistent format for each metric that includes its definition, a description of the methodology, the main assumptions and limitations, and a linked open-source software package implementing the methodology. The software transparently demonstrates the methodology and can also be used in practical, reproducible evaluations of all metrics.

“As research on metrics and benchmarks progresses, our collection of metrics and the associated software for performance evaluation are expected to evolve,” says Rungger. “Ultimately, the repository we have put together will provide a ‘living’ online resource, updated at regular intervals to account for community-driven developments in the field.”

Innovation being what it is, those developments are well under way. For starters, the importance of objective and relevant performance benchmarks for quantum computers has led several international standards bodies to initiate work on specific areas that are ready for standardization – work that, in turn, will give manufacturers, end-users and investors an informed evaluation of the performance of a range of quantum computing components, subsystems and full-stack platforms.

What’s evident is that the UK’s voice on metrics and benchmarking is already informing the collective conversation around standards development. “The quantum computing community and international standardization bodies are adopting a number of concepts from our approach to benchmarking standards,” notes Deep Lall, a quantum scientist in Rungger’s team at NPL and lead author of the study. “I was invited to present our work to a number of international standardization meetings and scientific workshops, opening up widespread international engagement with our research and discussions with colleagues across the benchmarking community.”

He continues: “We want the UK effort on benchmarking and metrics to shape the broader international effort. The hope is that the collection of metrics we have pulled together, along with the associated open-source software provided to evaluate them, will guide the development of standardized benchmarks for quantum computers and speed up the progress of the field towards practical quantum advantage.”

That’s a view echoed – and amplified – by Cyrus Larijani, NPL’s head of quantum programme. “As we move into the next phase of NPL’s quantum strategy, the importance of evidence-based decision making becomes ever-more critical,” he concludes. “By grounding our strategic choices in robust measurement science and real-world data, we ensure that our innovations not only push the boundaries of quantum technology but also deliver meaningful impact across industry and society.”

Deep Lall et al. 2025 A review and collection of metrics and benchmarks for quantum computers: definitions, methodologies and software https://arxiv.org/abs/2502.06717

Quantum computing technology has reached the stage where a number of methods for performance characterization are backed by a large body of real-world implementation and use, as well as by theoretical proofs. These mature benchmarking methods will benefit from commonly agreed-upon approaches that are the only way to fairly, unambiguously and objectively benchmark quantum computers from different manufacturers.

“Performance benchmarks are a fundamental enabler of technology innovation in quantum computing,” explains Konstantinos Georgopoulos, who heads up the NQCC’s quantum applications team and is responsible for the centre’s liaison with the NPL benchmarking consortium. “How do we understand performance? How do we compare capabilities? And, of course, what are the metrics that help us to do that? These are the leading questions we addressed through the course of this study.

”If the importance of benchmarking is a given, so too is collaboration and the need to bring research and industry stakeholders together from across the quantum ecosystem. “I think that’s what we achieved here,” says Georgopoulos. “The long list of institutions and experts who contributed their perspectives on quantum computing was crucial to the success of this project. What we’ve ended up with are better metrics, better benchmarks, and a better collective understanding to push forward with technology translation that aligns with end-user requirements across diverse industry settings.”

End note: NPL retains copyright on this article.

The post Performance metrics and benchmarks point the way to practical quantum advantage appeared first on Physics World.

This episode of the Physics World Weekly podcast explores how quantum computing and artificial intelligence can be combined to help physicists search for rare interactions in data from an upgraded Large Hadron Collider.

My guest is Javier Toledo-Marín, and we spoke at the Perimeter Institute in Waterloo, Canada. As well as having an appointment at Perimeter, Toledo-Marín is also associated with the TRIUMF accelerator centre in Vancouver.

Toledo-Marín and colleagues have recently published a paper called “Conditioned quantum-assisted deep generative surrogate for particle–calorimeter interactions”.

This podcast is supported by Delft Circuits.

As gate-based quantum computing continues to scale, Delft Circuits provides the i/o solutions that make it possible.

The post Quantum computing and AI join forces for particle physics appeared first on Physics World.

In this episode of Space Minds, host David Ariosto speaks with Terry Hart, former NASA astronaut and mission specialist on the Space Shuttle Challenger. Hart reflects on the triumphs of early shuttle missions, the lessons of the Challenger tragedy, and how those experiences shape today’s commercial space era led by companies like SpaceX and Blue Origin.

The post The astronaut who filmed the Dream is Alive appeared first on SpaceNews.

The first space race was about getting to the moon. Today’s race to the moon and Mars is about staying there.

The post The next space race will be won at night appeared first on SpaceNews.