Vue lecture

DNA captured from the air could track wildlife, invasive species—and humans

Voyager launches IPO with $1.6 billion valuation target

Voyager Technologies launched its initial public offering of shares June 2, targeting a valuation of $1.6 billion for the six-year-old space and defense company.

The post Voyager launches IPO with $1.6 billion valuation target appeared first on SpaceNews.

Rocket Lab launches BlackSky satellite

A Rocket Lab Electron successfully placed a BlackSky high-resolution imaging satellite into orbit on a June 2 launch.

The post Rocket Lab launches BlackSky satellite appeared first on SpaceNews.

Bury it, don’t burn it: turning biomass waste into a carbon solution

If a tree fell in a forest almost 4000 years ago, did it make a sound? Well, in the case of an Eastern red cedar in what is now Quebec, Canada, it’s certainly still making noise today.

That’s because in 2013, a team of scientists were digging a trench when they came across the 3775-year-old log. Despite being buried for nearly four millennia, the wood wasn’t rotten and useless. In fact, recent analysis unearthed an entirely different story.

The team, led by atmospheric scientist Ning Zeng of the University of Maryland in the US, found that the wood had only lost 5% of its carbon compared with a freshly cut Eastern red cedar log. “The wood is nice and solid – you could probably make a piece of furniture out of it,” says Zeng. The log had been preserved in such remarkable shape because the clay soil it was buried in was highly impermeable. That limited the amount of oxygen and water reaching the wood, suppressing the activity of micro-organisms that would otherwise have made it decompose.

This ancient log is a compelling example of “biomass burial”. When plants decompose or are burnt, they release the carbon dioxide (CO2) they had absorbed from the atmosphere. One idea to prevent this CO2 being released back into the atmosphere is to bury the waste biomass under conditions that prevent or slow decomposition, thereby trapping the carbon underground for centuries.

In fact, Zeng and his colleagues discovered the cedar log while they were digging a huge trench to bury 35 tonnes of wood to test this very idea. Nine years later, when they dug up some samples, they found that the wood had barely decomposed. Further analysis suggested that if the logs had been left buried for a century, they would still hold 97% of the carbon that was present when they were felled.

Digging holes

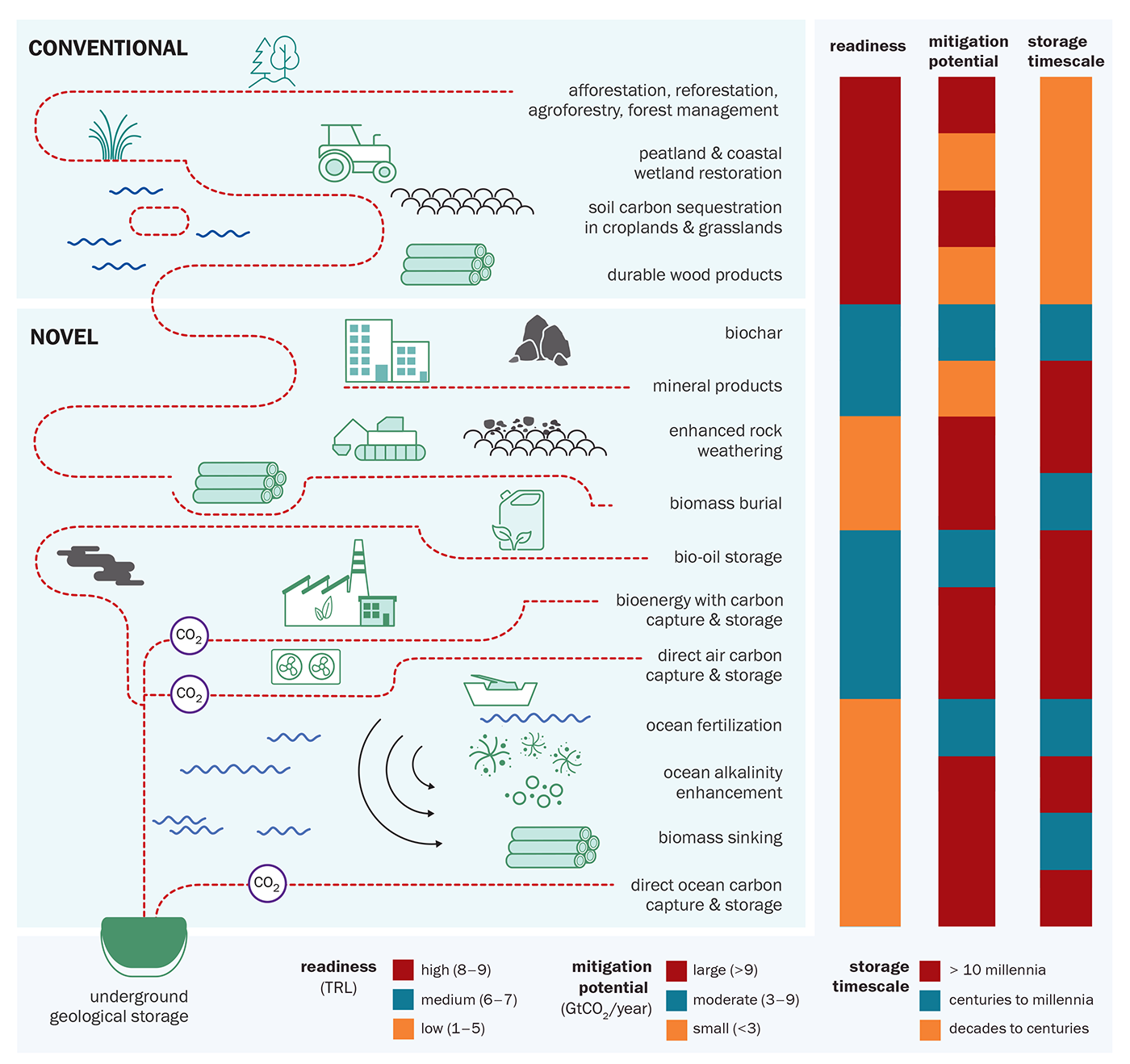

To combat climate change, there is often much discussion about how to remove carbon from the atmosphere. As well as conventional techniques like restoring peatland and replanting forests, there are a variety of more technical methods being developed (figure 1). These include direct air capture (DAC) and ocean alkalinity enhancement, which involves tweaking the chemistry of oceans so that they absorb more CO2. But some scientists – like Sinéad Crotty, a managing director at the Carbon Containment Lab in Connecticut, US – think that biomass burial could be a simpler and cheaper way to sequester carbon.

1 Ready or not

There are multiple methods being developed for capturing, converting and storing carbon dioxide (CO2), each at different stages of readiness for deployment, with varying removal capabilities and storage durability timescales.

This figure – adapted from the State of Carbon Dioxide Removal report – shows methods that are already deployed or analysed in research literature. They are categorized as either “conventional”, processes that are widely established and deployed at scale; or “novel”, those that are at a lower level of readiness and therefore only used on smaller scales. The figure also rates their Technology Readiness Level (TRL), maximum mitigation potential (how many gigatonnes (109 tonnes) of CO2 can be sequestered per year), and storage timescale.

The report defines each technique as follows:

- Afforestation – Conversion to forest of land that was previously not forest.

- Reforestation – Conversion to forest of land that was previously deforested.

- Agroforestry – Growing trees on agricultural land while maintaining agricultural production.

- Forest management – Stewardship and use of existing forests. To count as carbon dioxide removal (CDR), forest management practices must enhance the long-term average carbon stock in the forest system.

- Peatland and coastal wetland restoration – Assisted recovery of inland ecosystems that are permanently or seasonally flooded or saturated by water (such as peatlands) and of coastal ecosystems (such as tidal marshes, mangroves and seagrass meadows). To count as CDR, this recovery must lead to a durable increase in the carbon content of these systems.

- Durable wood products – Wood products which meet a given threshold of durability, typically used in construction. These can include sawn wood, wood panels and composite beams, but exclude less durable products such as paper.

- Biochar – Relatively stable, carbon-rich material produced by heating biomass in an oxygen-limited environment. Assumed to be applied as a soil amendment unless otherwise stated.

- Mineral products – Production of solid carbonate materials for use in products such as aggregates, asphalt, cement and concrete, using CO2 captured from the atmosphere.

- Enhanced rock weathering – Increasing the natural rate of removal of CO2 from the atmosphere by applying crushed rocks, rich in calcium and magnesium, to soil or beaches.

- Biomass burial – Burial of biomass in land sites such as soils or exhausted mines. Excludes storage in the typical geological formations associated with carbon capture and storage (CCS).

- Bio-oil storage – Oil made by biomass conversion and placed into geological storage.

- Bioenergy with carbon capture and storage – Process by which biogenic CO2 is captured from a bioenergy facility, with subsequent geological storage.

- Direct air carbon capture and storage – Chemical process by which CO2 is captured from the ambient air, with subsequent geological storage.

- Ocean fertilization – Enhancement of nutrient supply to the near-surface ocean with the aim of sequestering additional CO2 from the atmosphere stimulated through biological production. Methods include direct addition of micro-nutrients or macro-nutrients. To count as CDR, the biomass must reach the deep ocean where the carbon has the potential to be sequestered durably.

- Ocean alkalinity enhancement – Spreading of alkaline materials on the ocean surface to increase the alkalinity of the water and thus increase ocean CO2 uptake.

- Biomass sinking – Sinking of terrestrial (e.g. straw) or marine (e.g. macroalgae) biomass in the marine environment. To count as CDR, the biomass must reach the deep ocean where the carbon has the potential to be sequestered durably.

- Direct ocean carbon capture and storage – Chemical process by which CO2 is captured directly from seawater, with subsequent geological storage. To count as CDR, this capture must lead to increased ocean CO2 uptake.

The 3775-year-old log shows that carbon can be stored for centuries underground, but the wood has to be buried under specific conditions. “People tend to think, ‘Who doesn’t know how to dig a hole and bury some wood?’” Zeng says. “But think about how many wooden coffins were buried in human history. How many of them survived? For a timescale of hundreds or thousands of years, we need the right conditions.”

The key for scientists seeking to test biomass burial is to create dry, low-oxygen environments, similar to those in the Quebec clay soil. Last year, for example, Crotty and her colleagues dug more than 100 pits at a site in Colorado, in the US, filled them with woody material and then covered them up again. In five years’ time they plan to dig the biomass back out of the pits to see how much it has decomposed.

The pits vary in depth, and have been refilled and packed in different ways, to test how their build impacts carbon storage. The researchers will also be calculating the carbon emissions of processes such as transporting and burying the biomass – including the amount of carbon released from the soil when the pits are dug. “What we are trying to do here is build an understanding of what works and what doesn’t, but also how we can measure, report and verify that what we are doing is truly carbon negative,” Crotty says.

Over the next five years the team will continuously measure surface CO2 and methane fluxes from several of the pits, while every pit will have its CO2 and methane emissions measured monthly. There are also moisture sensors and oxygen probes buried in the pits, plus a full weather station on the site.

Crotty says that all this data will allow them to assess how different depths, packing styles and the local environment alter conditions in the chambers. When the samples are excavated in five years, the researchers will also explore what types of decomposition the burial did and did not suppress. This will include tests to identify different fungal and bacterial signatures, to uncover the micro-organisms involved in any decay.

The big questions

Experiments like Crotty’s will help answer one of the key concerns about terrestrial storage of biomass: how long can the carbon be stored?

In 2023 a team led by Lawrence Livermore National Laboratory (LLNL) did a large-scale analysis of the potential for CO2 removal in the US. The resulting Road to Removal report outlined how CO2 removal could be used to help the US achieve its net zero goals (these have since been revoked by the Trump administration), focusing on techniques like direct air capture (DAC), increasing carbon uptake in forests and agricultural lands, and converting waste biomass into fuels and CO2.

The report did not, however, look at biomass burial. One of the report authors, Sarah Baker – an expert in decarbonization and CO2 removal at LLNL – told Physics World that this was because of a lack of evidence around the durability of the carbon stored. The report’s minimum requirement for carbon storage was at least 100 years, and there were not enough data available to show how much carbon stored in biomass would remain after that period, Baker explains.

The US Department of Energy is also working to address this question. It has funded a set of projects, which Baker is involved with, to bridge some of the knowledge gaps on carbon-removal pathways. This includes one led by the National Renewable Energy Lab, measuring how long carbon in buried biomass remains stored under different conditions.

Bury the problem

Crotty’s Colorado experiment is also addressing another question: are all forms of biomass equally appropriate for burial? To test this, Crotty’s team filled the pits with a range of woody materials, including different types of wood and wood chip as well as compressed wood, and “slash” – small branches, leaves, bark and other debris created by logging and other forestry work.

Indeed, Crotty and her colleagues see biomass storage as crucial for those managing our forests. The western US states, in particular, have seen an increased risk of wildfires through a mix of climate change and aggressive fire-suppression policies that do not allow smaller fires to burn and thereby produce overgrown forests. “This has led to a build-up of fuels across the landscape,” Crotty says. “So, in a forest that would typically have a high number of low-severity fires, it’s changed the fire regime into a very high-intensity one.”

These concerns led the US Forest Service to announce a 10-year wildfire crisis plan in 2022 that seeks to reduce the risk of fires by thinning and clearing 50 million acres of forest land, in addition to 20 million acres already slated for treatment. But this creates a new problem.

“There are currently very few markets for the types of residues that need to come out of these forests – it is usually small-diameter, low-value timber,” explains Crotty. “They typically can’t pay their way out of the forests, so business as usual in many areas is to simply put them in a pile and burn them.”

A recent study Crotty co-authored suggests that every year “pile burning” in US National Forests emits greenhouse gases equivalent to almost two million tonnes of CO2, and more than 11 million tonnes of fine particulate matter – air pollution that is linked to a range of health problems. Conservative estimates by the Carbon Containment Lab indicate that the material scheduled for clearance under the Forest Service’s 10-year crisis plan will contain around two gigatonnes (Gt) of CO2 equivalents. This is around 5% of current annual global CO2 emissions.

There are also cost implications. Crotty’s recent analysis found that piling and burning forest residue costs around $700 to $1300 per acre. By adding value to the carbon in the forest residues and keeping it out of the atmosphere, biomass storage may offer a solution to these issues, Crotty says.

As an incentive to remove carbon from the atmosphere, trading mechanisms exist whereby individuals, companies and governments can buy and sell carbon emissions. In essence, carbon has a price attached to it, meaning that someone who has emitted too much, say, can pay someone else to capture and store the equivalent amount of emissions, with an often-touted figure being $100 per tonne of CO2 stored. For a long time, this has been seen as the price at which carbon capture becomes affordable, enabling scale up to the volumes needed to tackle climate change.

“There is only so much capital that we will ever deploy towards [carbon removal] and thus the cheaper the solution, the more credits we’ll be able to generate, the more carbon we will be able to remove from the atmosphere,” explains Justin Freiberg, a managing director of the Carbon Containment Lab. “$100 is relatively arbitrary, but it is important to have a target and aim low on pricing for high quality credits.”

DAC has not managed to reach this magical price point. Indeed, the Swiss firm Climeworks – which is one of the biggest DAC companies – has stated that its costs might be around $300 per tonne by 2030.

A tomb in a mine

Another carbon-removal company, however, claims it has hit this benchmark using biomass burial. “We’re selling our first credits at $100 per tonne,” says Hannah Murnen, chief technology officer at Graphyte – a US firm backed by Bill Gates.

Graphyte is confident that there is significant potential in biomass burial. Based in Pine Bluff, Arkansas, the firm dries and compresses waste biomass into blocks before storage. “We dry it to below a level at which life can exist,” says Murnen, which effectively halts decomposition.

The company claims that it will soon be storing 50,000 tonnes of CO2 per year and is aiming for five million tonnes per year by 2030. Murnen acknowledges that these are “really significant figures”, particularly compared with what has been achieved in carbon capture so far. Nevertheless, she adds, if you look at the targets around carbon capture “this is the type of scale we need to get to”.

The need for carbon capture

The Intergovernmental Panel on Climate Change says that carbon capture is essential to limit global warming to 1.5 °C above pre-industrial levels.

To stay within the Paris Agreement’s climate targets, the 2024 State of Carbon Dioxide Removal report estimated that 7–9 gigatonnes (Gt) of CO2 removal will be needed annually by 2050. According to the report – which was put together by multiple institutions, led by the University of Oxford – currently two billion tonnes of CO2 are being removed per year, mostly through “conventional” methods like tree planting and wetland restoration. “Novel” methods – such as direct air capture (DAC), bioenergy with carbon capture, and ocean alkalinity enhancement – contribute 1.3 million tonnes of CO₂ removal per year, less than 0.1% of the total.

Graphyte is currently working with sawmill residue and rice hulls, but in the future Murnen says it plans to accept all sorts of biomass waste. “One of the great things about biomass for the purpose of carbon removal is that, because we are not doing any sort of chemical transformation on the biomass, we’re very flexible to the type of biomass,” Murnen adds.

And there appears to be plenty available. Estimates by researchers in the UK and India (NPJ Climate and Atmospheric Science 2 35) suggest that every year around 140 Gt of biomass waste is generated globally from forestry and agriculture. Around two-thirds of the agricultural residues are from cereals, like wheat, rice, barley and oats, while sugarcane stems and leaves are the second largest contributors. The rest is made up of things like leaves, roots, peels and shells from other crops. Like forest residues, much of this waste ends up being burnt or left to rot, releasing its carbon.

Currently, Graphyte has one storage site about 30 km from Pine Bluff, where its compressed biomass blocks are stored underground, enclosed in an impermeable layer that prevents water ingress. “We took what used to be an old gravel mine – so basically a big hole in the ground – and we’ve created a lined storage tomb where we are placing the biomass and then sealing it closed,” says Murnen.

Once sealed, Graphyte monitors the CO2 and methane concentrations in the headspace of the vaults, to check for any decomposition of the biomass. The company also analyses biomass as it enters the facility, to track how much carbon it is storing. Wood residues, like sawmill waste are generally around 50% carbon, says Murnen, but rice hulls are closer to 35% carbon.

Graphyte is confident that its storage is physically robust and could avoid any re-emission for what Murnen calls “a very long period of time”. However, it is also exploring how to prevent accidental disturbance of the biomass in the future – possibly long after the company ceases to exist. One option is to add a conservation easement to the site, a well-established US legal mechanism for adding long-term protection to land.

“We feel pretty strongly that the way we are approaching [carbon removal] is one of the most scalable ways,” Murnen says. “In as far as impediments or barriers to scale, we have a much easier permitting pathway, we don’t need pipelines, we are pretty flexible on the type of land that we can use for our storage sites, and we have a huge variety of feedstocks that we can take into the process.”

A simple solution

Back at LLNL, Baker says that although she hasn’t “run the numbers”, and there are a lot caveats, she suspects that biomass burial is “true carbon removal because it is so simple”.

Once associated upstream and downstream emissions are taken into account, many techniques that people call carbon removal are probably not, she says, because they emit more fossil CO2 than they store.

Biomass burial is also cheap. As the Road to Removal analysis found, “thermal chemical” techniques, like pyrolysis, have great potential for removing and storing carbon while converting biomass into hydrogen and sustainable aviation fuel. But they require huge investment, with larger facilities potentially costing hundreds of millions of dollars. Biomass burial could even act as temporary storage until facilities are ready to convert the carbon into sustainable fuels. “Buy ourselves time and then use it later,” says Baker.

Either way, biomass burial has great potential for the future of carbon storage, and therefore our environment. “The sooner we can start doing these things the greater the climate impact,” Baker says.

We just need to know that the storage is durable – and if that 3775-year-old log is any indication, there’s the potential to store biomass for hundreds, maybe thousands of years.

The post Bury it, don’t burn it: turning biomass waste into a carbon solution appeared first on Physics World.

Astronomers Have Detected a Galaxy Millions of Years Older Than Any Previously Observed

Wireless e-tattoos help manage mental workload

Managing one’s mental workload is a tricky balancing act that can affect cognitive performance and decision making abilities. Too little engagement with an ongoing task can lead to boredom and mistakes; too high could cause a person to become overwhelmed.

For those performing safety-critical tasks, such as air traffic controllers or truck drivers for example, monitoring how hard their brain is working is even more important – lapses in focus could have serious consequences. But how can a person’s mental workload be assessed? A team at the University of Texas at Austin proposes the use of temporary face tattoos that can track when a person’s brain is working too hard.

“Technology is developing faster than human evolution. Our brain capacity cannot keep up and can easily get overloaded,” says lead author Nanshu Lu in a press statement. “There is an optimal mental workload for optimal performance, which differs from person to person.”

The traditional approach for monitoring mental workload is electroencephalography (EEG), which analyses the brain’s electrical activity. But EEG devices are wired, bulky and uncomfortable, making them impractical for real-world situations. Measurements of eye movements using electrooculography (EOG) are another option for assessing mental workload.

Lu and colleagues have developed an ultrathin wireless e-tattoo that records high-fidelity EEG and EOG signals from the forehead. The e-tattoo combines a disposable sticker-like electrode layer and a reusable battery-powered flexible printed circuit (FPC) for data acquisition and wireless transmission.

The serpentine-shaped electrodes and interconnects are made from low-cost, conductive graphite-deposited polyurethane, coated with an adhesive polymer composite to reduce contact impedance and improve skin attachment. The e-tattoo stretches and conforms to the skin, providing reliable signal acquisition, even during dynamic activities such as walking and running.

To assess the e-tattoo’s ability to record basic neural activities, the team used it to measure alpha brainwaves as a volunteer opened and closed their eyes. The e-tattoo captured equivalent neural spectra to that recorded by a commercial gel electrode-based EEG system with comparable signal fidelity.

The researchers next tested the e-tattoo on six participants while they performed a visuospatial memory task that gradually increased in difficulty. They analysed the signals collected by the e-tattoo during the tasks, extracting EEG band powers for delta, theta, alpha, beta and gamma brainwaves, plus various EOG features.

As the task got more difficult, the participants showed higher activity in the theta and delta bands, a feature associated with increased cognitive demand. Meanwhile, activity in the alpha and beta bands decreased, indicating mental fatigue.

The researchers built a machine learning model to predict the level of mental workload experienced during the tasks, training it on forehead EEG and EOG features recorded by the e-tattoo. The model could reliably estimate mental workload in each of the six subjects, demonstrating the feasibility of real-time cognitive state decoding.

“Our key innovation lies in the successful decoding of mental workload using a wireless, low-power, low-noise and ultrathin EEG/EOG e-tattoo device,” the researchers write. “It addresses the unique challenges of monitoring forehead EEG and EOG, where wearability, non-obstructiveness and signal stability are critical to assessing mental workload in the real world.”

They suggest that future applications could include real-time cognitive load monitoring in pilots, operators and healthcare professionals. “We’ve long monitored workers’ physical health, tracking injuries and muscle strain,” says co-author Luis Sentis. “Now we have the ability to monitor mental strain, which hasn’t been tracked. This could fundamentally change how organizations ensure the overall well-being of their workforce.”

The e-tattoo is described in Device.

The post Wireless e-tattoos help manage mental workload appeared first on Physics World.

U.S. Space Force awards BAE Systems $1.2 billion contract for missile-tracking satellites

Space Systems Command expands medium Earth orbit constellation to counter hypersonic threats

The post U.S. Space Force awards BAE Systems $1.2 billion contract for missile-tracking satellites appeared first on SpaceNews.

National Academies, staggering from Trump cuts, on brink of dramatic downsizing

Trump’s proposed budget details drastic cuts to biomedical research and global health

This octopus grew a ninth arm—which soon developed a mind of its own

Ancient poop yields world’s oldest butterfly fossils

Explosive mpox outbreak in Sierra Leone overwhelms health systems

Golden Dome: It’s all about the data

Golden Dome’s ground control and software layers may be as vital — and competitive — as its satellites.

The post Golden Dome: It’s all about the data appeared first on SpaceNews.

South Korea’s Venus-focused cubesat advances as larger missions face NASA cuts

South Korea’s state-backed Institute for Basic Science has ordered the first of five cubesats to study Venus from LEO starting next year, bolstering sustained planetary research as flagship missions face budget uncertainty.

The post South Korea’s Venus-focused cubesat advances as larger missions face NASA cuts appeared first on SpaceNews.

Lower Alzheimer's Risk With the MIND Diet, a Combo of the DASH and Mediterranean Diets

South America’s First Hunter-Gatherers Appeared, Then Unusually Disappeared 2,000 Years Ago

The Smallest Dinosaur Ever Was Just 11 Inches Long, and Had Beautiful Tail Feathers

EchoStar bets on TV amid FCC mobile scrutiny

EchoStar has ordered another geostationary satellite for its Dish Network TV broadcast business, even as the company signals the possibility of seeking bankruptcy protection amid a regulatory probe into its mobile spectrum licenses.

The post EchoStar bets on TV amid FCC mobile scrutiny appeared first on SpaceNews.

8 Ways to Boost the Effects of Ozempic and Other GLP-1 Drug Treatments

Andromeda galaxy may not collide with the Milky Way after all

Since 1912, we’ve known that the Andromeda galaxy is racing towards our own Milky Way at about 110 kilometres per second. A century later, in 2012, astrophysicists at the Space Telescope Science Institute (STScI) in Maryland, US came to a striking conclusion. In four billion years, they predicted, a collision between the two galaxies was a sure thing.

Now, it’s not looking so sure.

Using the latest data from the European Space Agency’s Gaia astrometric mission, astrophysicists led by Till Sawala of the University of Helsinki, Finland re-modelled the impending crash, and found that it’s 50/50 as to whether a collision happens or not.

This new result differs from the 2012 one because it considers the gravitational effect of an additional galaxy, the Large Magellanic Cloud (LMC), alongside the Milky Way, Andromeda and the nearby Triangulum spiral galaxy, M33. While M33’s gravity, in effect, adds to Andromeda’s motion towards us, Sawala and colleagues found that the LMC’s gravity tends to pull the Milky Way out of Andromeda’s path.

“We’re not predicting that the merger is not going to happen within 10 billion years, we’re just saying that from the data we have now, we can’t be certain of it,” Sawala tells Physics World.

“A step in the right direction”

While the LMC contains only around 10% of the Milky Way’s mass, Sawala and colleagues’ work indicates that it may nevertheless be massive enough to turn a head-on collision into a near-miss. Incorporating its gravitational effects into simulations is therefore “a step in the right direction”, says Sangmo Tony Sohn, a support scientist at the STScI and a co-author of the 2012 paper that predicted a collision.

Even with more detailed simulations, though, uncertainties in the motion and masses of the galaxies leave room for a range of possible outcomes. According to Sawala, the uncertainty with the greatest effect on merger probability lies in the so-called “proper motion” of Andromeda, which is its motion as it appears on our night sky. This motion is a mixture of Andomeda’s radial motion towards the centre of the Milky Way and the two galaxies’ transverse motion perpendicular to one another.

If the combined transverse motion is large enough, Andromeda will pass the Milky Way at a distance greater than 200 kiloparsecs (652,000 light years). This would avert a collision in the next 10 billion years, because even when the two galaxies loop back on each other, their next pass would still be too distant, according to the models.

Conversely, a smaller transverse motion would limit the distance at closest approach to less than 200 kiloparsecs. If that happens, Sawala says the two galaxies are “almost certain to merge” because of the dynamical friction effect, which arises from the diffuse halo of old stars and dark matter around galaxies. When two galaxies get close enough, these haloes begin interacting with each other, generating tidal and frictional heating that robs the galaxies of orbital energy and makes them fall ever closer.

The LMC itself is an excellent example of how this works. “The LMC is already so close to the Milky Way that it is losing its orbital energy, and unlike [Andromeda], it is guaranteed to merge with the Milky Way,” Sawala says, adding that, similarly, M33 stands a good chance of merging with Andromeda.

“A very delicate task”

Because Andromeda is 2.5 million light years away, its proper motion is very hard to measure. Indeed, no-one had ever done it until the STScI team spent 10 years monitoring the galaxy, which is also known as M31, with the Hubble Space Telescope – something Sohn describes as “a very delicate task” that continues to this day.

Another area where there is some ambiguity is in the mass estimate of the LMC. “If the LMC is a little more massive [than we think], then it pulls the Milky Way off the collision course with M31 a little more strongly, reducing the possibility of a merger between the Milky Way and M31,” Sawala explains.

The good news is that these ambiguities won’t be around forever. Sohn and his team are currently analysing new Hubble data to provide fresh constraints on the Milky Way’s orbital trajectory, and he says their results have been consistent with the Gaia analyses so far. Sawala agrees that new data will help reduce uncertainties. “There’s a good chance that we’ll know more about what is going to happen fairly soon, within five years,” he says.

Even if the Milky Way and Andromeda don’t collide in the next 10 billion years, though, that won’t be the end of the story. “I would expect that there is a very high probability that they will eventually merge, but that could take tens of billions of years,” Sawala says.

The research is published in Nature Astronomy.

The post Andromeda galaxy may not collide with the Milky Way after all appeared first on Physics World.

EYCORE – Emerging Polish Space Defence Company Becomes Key Player in Developing National Earth Observation Constellation

During the recent 3rd ESA Security Conference held in Warsaw, the winning consortium for the implementation of Poland’s National Earth Observation Program CAMILA (Country Awareness Mission in Land Analysis) was […]

The post EYCORE – Emerging Polish Space Defence Company Becomes Key Player in Developing National Earth Observation Constellation appeared first on SpaceNews.

Space assets could be held ransom. Will we have any choice but to pay?

Ransomware exploits value. Attackers put victims against a decision to pay for the hope of the return of their system or lose it. For victims, it is hard to justify […]

The post Space assets could be held ransom. Will we have any choice but to pay? appeared first on SpaceNews.

SpaceNews Appoints Laurie Diamond as VP of Business Development to Accelerate Revenue Growth and Strategic Expansion

Washington, D.C. — SpaceNews, the trusted source for space industry news and analysis for more than 35 years, announces the appointment of Laurie Diamond as Vice President of Business Development. […]

The post SpaceNews Appoints Laurie Diamond as VP of Business Development to Accelerate Revenue Growth and Strategic Expansion appeared first on SpaceNews.

Thinking of switching research fields? Beware the citation ‘pivot penalty’ revealed by new study

Scientists who switch research fields suffer a drop in the impact of their new work – a so-called “pivot penalty”. That is according to a new analysis of scientific papers and patents, which finds that the pivot penalty increases the further away a researcher shifts from their previous topic of research.

The analysis has been carried out by a team led by Dashun Wang and Benjamin Jones of Northwestern University in Illinois. They analysed more than 25 million scientific papers published between 1970 and 2015 across 154 fields as well as 1.7 million US patents across 127 technology classes granted between 1985 and 2020.

To identify pivots and quantify how far a scientist moves from their existing work, the team looked at the scientific journals referenced in a paper and compared them with those cited by previous work. The more the set of journals referenced in the main work diverged from those usually cited, the larger the pivot. For patents, the researchers used “technological field codes” to measure pivots.

Larger pivots are associated with fewer citations and a lower propensity for high-impact papers, defined as those in the top 5% of citations received in their field and publication year. Low-pivot work – moving only slightly away from the typical field of research – led to a high-impact paper 7.4% of the time, yet the highest-pivot shift resulted in a high-impact paper only 2.2% of the time. A similar trend was seen for patents.

When looking at the output of an individual researcher, low-pivot work was 2.1% more likely to have a high-impact paper while high-pivot work was 1.8% less likely to do so. The study found the pivot penalty to be almost universal across scientific fields and it persists regardless of a scientist’s career stage, productivity and collaborations.

COVID impact

The researchers also studied the impact of COVID-19, when many researchers pivoted to research linked to the pandemic. After analyzing 83 000 COVID-19 papers and 2.63 million non-COVID papers published in 2020, they found that COVID-19 research was not immune to the pivot penalty. Such research had a higher impact than average, but the further a scientist shifted from their previous work to study COVID-19 the less impact the research had.

“Shifting research directions appears both difficult and costly, at least initially, for individual researchers,” Wang told Physics World. He thinks, however, that researchers should not avoid change but rather “approach it strategically”. Researchers should, for example, try anchoring their new work in the conventions of their prior field or the one they are entering.

To help researchers pivot, Wang says research institutions should “acknowledge the friction” and not “assume that a promising researcher will thrive automatically after a pivot”. Instead, he says, institutions need to design support systems, such as funding or protected time to explore new ideas, or pairing researchers with established scholars in the new field.

The post Thinking of switching research fields? Beware the citation ‘pivot penalty’ revealed by new study appeared first on Physics World.

Ask me anything: Tom Woodroof – ‘Curiosity, self-education and carefully-chosen guidance can get you surprisingly far’

What skills do you use every day in your job?

I co-founded Mutual Credit Services in 2020 to help small businesses thrive independently of the banking sector. As a financial technology start-up, we’re essentially trying to create a “commons” economy, where power lies in the hands of people, not big institutions, thereby making us more resilient to the climate crisis.

Those goals are probably as insanely ambitious as they sound, which is why my day-to-day work is a mix of complexity economics, monetary theory and economic anthropology. I spend a lot of time thinking hard about how these ideas fit together, before building new tech platforms, apps and services, which requires analytical and design thinking.

There are still many open questions about business, finance and economics that I’d like to do research on, and ultimately develop into new services. I’m constantly learning through trial projects and building a pipeline of ideas for future exploration.

Developing the business involves a lot of decision-making, project management and team-building. In fact, I’m spending more and more of my time on commercialization – working out how to bring new services to market, nurturing partnerships and talking to potential early adopters. It’s vital that I can explain novel financial ideas to small businesses in a way they can understand and have confidence in. So I’m always looking for simpler and more compelling ways to describe what we do.

What do you like best and least about your job?

What I like best is the variety and creativity. I’m a generalist by nature, and love using insights from a variety of disciplines. The practical application of these ideas to create a better economy feels profoundly meaningful, and something that I’d be unlikely to get in any other job. I also love the autonomy of running a business. With a small but hugely talented and enthusiastic team, we’ve so far managed to avoid the company becoming rigid and institutionalized. It’s great to work with people on our team and beyond who are excited by what we’re doing, and want to be involved.

The hardest thing is facing the omnicrisis of climate breakdown and likely societal collapse that makes this work necessary in the first place. As with all start-ups, the risk of failure is huge, no matter how good the ideas are, and it’s frustrating to spend so much time on tasks that just keep things afloat, rather than move the mission forward. I work long hours and the job can be stressful.

What do you know today, that you wish you knew when you were starting out in your career?

I spent a lot of time during my PhD at Liverpool worrying that I’d get trapped in one narrow field, or drift into one of the many default career options. I wish I’d known how many opportunities there are to do original, meaningful and self-directed work – especially if you’re open to unconventional paths, such as the one I’ve followed, and can find the right people to do it with.

It’s also easy to assume that certain skills or fields are out of reach, whereas I’ve found again and again that a mix of curiosity, self-education and carefully-chosen guidance can get you surprisingly far. Many things that once seemed intimidating now feel totally manageable. That said, I’ve also learned that everything takes at least three times longer than expected – especially when you’re building something new. Progress often looks like small compounding steps, rather than a handful of breakthroughs.

The post Ask me anything: Tom Woodroof – ‘Curiosity, self-education and carefully-chosen guidance can get you surprisingly far’ appeared first on Physics World.

Were Hominins in Europe 6 Million Years Ago? Footprint Find Sparks Debate

A Neuralink Rival Just Tested a Brain Implant in a Person

Laboratory-scale three-dimensional X-ray diffraction makes its debut

Trips to synchrotron facilities could become a thing of the past for some researchers thanks to a new laboratory-scale three-dimensional X-ray diffraction microscope designed by a team from the University of Michigan, US. The device, which is the first of its kind, uses a liquid-metal-jet electrode to produce high-energy X-rays and can probe almost everything a traditional synchrotron can. It could therefore give a wider community of academic and industrial researchers access to synchrotron-style capabilities.

Synchrotrons are high-energy particle accelerators that produce bright, high-quality beams of coherent electromagnetic radiation at wavelengths ranging from the infrared to soft X-rays. To do this, they use powerful magnets to accelerate electrons in a storage ring, taking advantage of the fact that accelerated electrons emit electromagnetic radiation.

One application for this synchrotron radiation is a technique called three-dimensional X-ray diffraction (3DXRD) microscopy. This powerful technique enables scientists to study the mechanical behaviour of polycrystalline materials, and it works by constructing three-dimensional images of a sample from X-ray images taken at multiple angles, much as a CT scan images the human body. Instead of the imaging device rotating around a patient, however, it is the sample that rotates in the focus of the powerful X-ray beam.

At present, 3DXRD can only be performed at synchrotrons. These are national and international facilities, and scientists must apply for beamtime months or even years in advance. If successful, they receive a block of time lasting six days at the most, during which they must complete all their experiments.

A liquid-metal-jet anode

Previous attempts to make 3DXRD more accessible by downscaling it have largely been unsuccessful. In particular, efforts to produce high-energy X-rays using electrical anodes have foundered because these anodes are traditionally made of solid metal, which cannot withstand the extremely high power of electrons needed to produce X-rays.

The new lab-scale device developed by mechanical engineer Ashley Bucsek and colleagues overcomes this problem thanks to a liquid-metal-jet anode that can absorb more power and therefore produce a greater number of X-ray photons per electrode surface area. The sample volume is illuminated by a monochromatic box or line-focused X-ray beam while diffraction patterns are serially recorded as the sample rotates full circle. “The technique is capable of measuring the volume, position, orientation and strain of thousands of polycrystalline grains simultaneously,” Bucsek says.

When members of the Michigan team tested the device by imaging samples of titanium alloy samples, they found it was as accurate as synchrotron-based 3DXRD, making it a practical alternative. “I conducted my PhD doing 3DXRD experiments at synchrotron user facilities, so having full-time access to a personal 3DXRD microscope was always a dream,” Bucsek says. “My colleagues and I hope that the adaptation of this technology from the synchrotron to the laboratory scale will make it more accessible.”

The design for the device, which is described in Nature Communications, was developed in collaboration with a US-based instrumentation firm, PROTO Manufacturing. Bucsek says she is excited by the possibility that commercialization will make 3DXRD more “turn-key” and thus reduce the need for specialized knowledge in the field.

The Michigan researchers now hope to use their instrument to perform experiments that must be carried out over long periods of time. “Conducting such prolonged experiments at synchrotron user facilities would be difficult, if not impossible, due to the high demand, so, lab-3DXRD can fill a critical capability gap in this respect,” Bucsek tells Physics World.

The post Laboratory-scale three-dimensional X-ray diffraction makes its debut appeared first on Physics World.

How to Make AI Faster and Smarter—With a Little Help From Physics

White House to withdraw Isaacman nomination to lead NASA

The White House is withdrawing the nomination of Jared Isaacman to be administrator of NASA, throwing an agency already reeling from proposed massive budget cuts into further disarray.

The post White House to withdraw Isaacman nomination to lead NASA appeared first on SpaceNews.

Blue Origin performs 12th crewed New Shepard suborbital flight

Blue Origin sent six people to space on a suborbital spaceflight May 31 that the company’s chief executive says is both a good business and a way to test technology.

The post Blue Origin performs 12th crewed New Shepard suborbital flight appeared first on SpaceNews.

Black Death Bacterium Evolved to be Less Aggressive to Kill Victims Slowly

Analysts Say Trump Trade Wars Would Harm the Entire US Energy Sector, From Oil to Solar

Majorana bound states spotted in system of three quantum dots

Firm evidence of Majorana bound states in quantum dots has been reported by researchers in the Netherlands. Majorana modes appeared at both edges of a quantum dot chain when an energy gap suppressed them in the centre, and the experiment could allow researchers to investigate the unique properties of these particles in hitherto unprecedented detail. This could bring topologically protected quantum bits (qubits) for quantum computing one step closer.

Majorana fermions were first proposed in 1937 by the Italian physicist Ettore Majorana. They were imagined as elementary particles that would be their own antiparticles. However, such elementary particles have never been definitively observed. Instead, physicists have worked to create Majorana quasiparticles (particle-like collective excitations) in condensed matter systems.

In 2001, the theoretical physicist Alexei Kitaev at Microsoft Research, proposed that “Majorana bound states” could be produced in nanowires comprising topological superconductors. The Majorana quasiparticle would exist as a single nonlocal mode at either end of a wire, while being zero-valued in the centre. Both ends would be constrained by the laws of physics to remain identical despite being spatially separated. This phenomenon could produce “topological qubits” robust to local disturbance.

Microsoft and others continue to research Majorana modes using this platform to this day. Multiple groups claim to have observed them, but this remains controversial. “It’s still a matter of debate in these extended 1D systems: have people seen them? Have they not seen them?”, says Srijit Goswami of QuTech in Delft.

Controlling disorder

In 2012, theoretical physicists Jay Sau, then of Harvard University and Sankar Das Sarma of the University of Maryland proposed looking for Majorana bound states in quantum dots. “We looked at [the nanowires] and thought ‘OK, this is going to be a while given the amount of disorder that system has – what are the ways this disorder could be controlled?’ and this is exactly one of the ways we thought it could work,” explains Sau. The research was not taken seriously at the time, however, Sau says, partly because people underestimated the problem of disorder.

Goswami and others have previously observed “poor man’s Majoranas” (PMMs) in two quantum dots. While they share some properties with Majorana modes, PMMs lack topological protection. Last year the group coupled two spin-polarized quantum dots connected by a semiconductor–superconductor hybrid material. At specific points, the researchers found zero-bias conductance peaks.

“Kitaev says that if you tune things exactly right you have one Majorana on one dot and another Majorana on another dot,” says Sau. “But if you’re slightly off then they’re talking to each other. So it’s an uncomfortable notion that they’re spatially separated if you just have two dots next to each other.”

Recently, a group that included Goswami’s colleagues at QuTech found that the introduction of a third quantum dot stabilized the Majorana modes. However, they were unable to measure the energy levels in the quantum dots.

Zero energy

In new work, Goswami’s team used systems of three electrostatically-gated, spin-polarized quantum dots in a 2D electron gas joined by hybrid semiconductor–superconductor regions. The quantum dots had to be tuned to zero energy. The dots exchanged charge in two ways: by standard electron hopping through the semiconductor and by Cooper-pair mediated coupling through the superconductor.

“You have to change the energy level of the superconductor–semiconductor hybrid region so that these two processes have equal probability,” explains Goswami. “Once you satisfy these conditions, then you get Majoranas at the ends.”

In addition to more topological protection, the addition of a third qubit provided the team with crucial physical insight. “Topology is actually a property of a bulk system,” he explains; “Something special happens in the bulk which gives rise to things happening at the edges. Majoranas are something that emerge on the edges because of something happening in the bulk.” With three quantum dots, there is a well-defined bulk and edge that can be probed separately: “We see that when you have what is called a gap in the bulk your Majoranas are protected, but if you don’t have that gap your Majoranas are not protected,” Goswami says.

To produce a qubit will require more work to achieve the controllable coupling of four Majorana bound states and the integration of a readout circuit to detect this coupling. In the near-term, the researchers are investigating other phenomena, such as the potential to swap Majorana bound states.

Sau is now at the University of Maryland and says that an important benefit of the experimental platform is that it can be determined unambiguously whether or not Majorana bound states have been observed. “You can literally put a theory simulation next to the experiment and they look very similar.”

The research is published in Nature.

The post Majorana bound states spotted in system of three quantum dots appeared first on Physics World.