Wood Pellet Mills Are Prone to Catching Fire. Why Build Them in California?

The Helgoland 2025 meeting, marking 100 years of quantum mechanics, has featured a lot of mind-bending fundamental physics, quite a bit of which has left me scratching my head.

So it was great to hear a brilliant talk by David Moore of Yale University about some amazing practical experiments using levitated, trapped microspheres as quantum sensors to detect what he calls the “invisible” universe.

If the work sounds familar to you, that’s because Moore’s team won a Physics World Top 10 Breakthrough of the Year award in 2024 for using their technique to detect the alpha decay of individual lead-212 atoms.

Speaking in the Nordseehalle on the island of Helgoland, Moore explained the next stage of the experiment, which could see it detect neutrinos “in a couple of months” at the earliest – and “at least within a year” at the latest.

Of course, physicists have already detected neutrinos, but it’s a complicated business, generally involving huge devices in deep underground locations where background signals are minimized. Yale’s set up is much cheaper, smaller and more convenient, involving no more than a couple of lab benches.

As Moore explained, he and his colleagues first trap silica spheres at low pressure, before removing excess electrons to electrically neutralize them. They then stabilize the spheres’ rotation before cooling them to microkelvin temperatures.

In the work that won the Physics World award last year, the team used samples of radon-220, which decays first into polonium-216 and then lead-212. These nuclei embed theselves in the silica spheres, which recoil when the lead-212 decays by releasing an alpha particle (Phys. Rev. Lett. 133 023602).

Moore’s team is able to measure the tiny recoil by watching how light scatters off the spheres. “We can see the force imparted by a subatomic particle on a heavier object,” he told the audience at Helgoland. “We can see single nuclear decays.”

Now the plan is to extend the experiment to detect neutrinos. These won’t (at least initially) be the neutrinos that stream through the Earth from the Sun or even those from a nuclear reactor.

Instead, the idea will be to embed the spheres with nuclei that undergo beta decay, releasing a much lighter neutrino in the process. Moore says the team will do this within a year and, one day, potentially even use to it spot dark matter.

“We are reaching the quantum measurement regime,” he said. It’s a simple concept, even if the name – “Search for new Interactions in a Microsphere Precision Levitation Experiment” (SIMPLE) – isn’t.

This article forms part of Physics World‘s contribution to the 2025 International Year of Quantum Science and Technology (IYQ), which aims to raise global awareness of quantum physics and its applications.

Stayed tuned to Physics World and our international partners throughout the next 12 months for more coverage of the IYQ.

Find out more on our quantum channel.

The post Yale researcher says levitated spheres could spot neutrinos ‘within months’ appeared first on Physics World.

Strategic Investment to Support Critical U.S. and Allied Solid Rocket Motor and Space Launch Programs Cedar City, Utah – June 11, 2025 American Pacific Corporation (AMPAC), a leading supplier of […]

The post American Pacific Corporation Finalizes $100 Million Capacity Expansion with Parent Company Approval appeared first on SpaceNews.

AST SpaceMobile has reached a deal enabling bankrupt satellite operator Ligado Networks to pay the more than $500 million it owes Viasat, in exchange for L-band spectrum to boost its planned direct-to-smartphone services.

The post AST SpaceMobile reaches deal to bankroll Ligado’s Viasat settlement appeared first on SpaceNews.

NGSO solutions positioned to capture 97% market share by 2034, driven by Starlink growth

The post “The Starlink Effect”: NGSO Services to Dominate Maritime Satellite Communications Market appeared first on SpaceNews.

There is huge promise in AI to transform spacecraft design. From near real-time performance calculations that enable broader design space exploration, to generative algorithms using mission requirements as inputs to […]

The post Is AI the next frontier in spacecraft design, or just a shiny buzzword? appeared first on SpaceNews.

China’s CAS Space completed a hot fire test of its Kinetica-2 rocket’s first stage, targeting a maiden orbital launch later this year.

The post CAS Space performs Kinetica-2 first stage hot fire test ahead of first launch and cargo demo appeared first on SpaceNews.

The animal world – including some of its ickiest parts – never ceases to amaze. According to researchers in Canada and Singapore, velvet worm slime contains an ingredient that could revolutionize the design of high-performance polymers, making them far more sustainable than current versions.

“We have been investigating velvet worm slime as a model system for inspiring new adhesives and recyclable plastics because of its ability to reversibly form strong fibres,” explains Matthew Harrington, the McGill University chemist who co-led the research with Ali Miserez of Nanyang Technological University (NTU). “We needed to understand the mechanism that drives this reversible fibre formation, and we discovered a hitherto unknown feature of the proteins in the slime that might provide a very important clue in this context.”

The velvet worm (phylum Onychophora) is a small, caterpillar-like creature that lives in humid forests. Although several organisms, including spiders and mussels, produce protein-based slimy material outside their bodies, the slime of the velvet worm is unique. Produced from specialized papillae on each side of the worm’s head, and squirted out in jets whenever the worm needs to capture prey or defend itself, it quickly transforms from a sticky, viscoelastic gel into stiff, glassy fibres as strong as nylon.

When dissolved in water, these stiff fibres return to their biomolecular precursors. Remarkably, new fibres can then be drawn from the solution – implyimg that the instructions for fibre self-assembly are “encoded” within the precursors themselves, Harrington says.

Previously, the molecular mechanisms behind this reversibility were little understood. In the present study, however, the researchers used protein sequencing and the AI-guided protein structure prediction algorithm AlphaFold to identify a specific high-molecular-weight protein in the slime. Known as a leucine-rich repeat, this protein has a structure similar to that of a cell surface receptor protein called a Toll-like receptor (TLR).

In biology, Miserez explains, this type of receptor is involved in immune system response. It also plays a role in embryonic or neural development. In the worm slime, however, that’s not the case.

“We have now unveiled a very different role for TLR proteins,” says Miserez, who works in NTU’s materials science and engineering department. “They play a structural, mechanical role and can be seen as a kind of ‘glue protein’ at the molecular level that brings together many other slime proteins to form the macroscopic fibres.”

Miserez adds that the team found this same protein in different species of velvet worms that diverged from a common ancestor nearly 400 million years ago. “This means that this different biological function is very ancient from an evolutionary perspective,” he explains.

“It was very unusual to find such a protein in the context of a biological material,” Harrington adds. “By predicting the protein’s structure and its ability to bind to other slime proteins, we were able to hypothesize its important role in the reversible fibre formation behaviour of the slime.”

The team’s hypothesis is that the reversibility of fibre formation is based on receptor-ligand interactions between several slime proteins. While Harrington acknowledges that much work remains to be done to verify this, he notes that such binding is a well-described principle in many groups of organisms, including bacteria, plants and animals. It is also crucial for cell adhesion, development and innate immunity. “If we can confirm this, it could provide inspiration for making high-performance non-toxic (bio)polymeric materials that are also recyclable,” he tells Physics World.

The study, which is detailed in PNAS, was mainly based on computational modelling and protein structure prediction. The next step, say the McGill researchers, is to purify or recombinantly express the proteins of interest and test their interactions in vitro.

The post Worm slime could inspire recyclable polymer design appeared first on Physics World.

Look Up, a French space situational awareness company, has raised nearly 50 million euros ($57.6 million) to continue development of a radar network to track space objects.

The post French SSA company Look Up raises 50 million euros appeared first on SpaceNews.

The Space Force would receive $29 billion under the HAC-D bill

The post House appropriators advance defense bill, slam White House for budget delay appeared first on SpaceNews.

Logos Space Services has raised $50 million to advance engineering plans for more than 4,000 broadband satellites, the startup founded by a former Google executive and NASA project manager announced June 12.

The post Logos nets $50 million to advance plans for more than 4,000 broadband satellites appeared first on SpaceNews.

NASA and Axiom Space are indefinitely delaying a private astronaut mission to the International Space Station because of an air leak in a Russian module.

The post NASA indefinitely delays private astronaut mission, citing air leak in Russian module appeared first on SpaceNews.

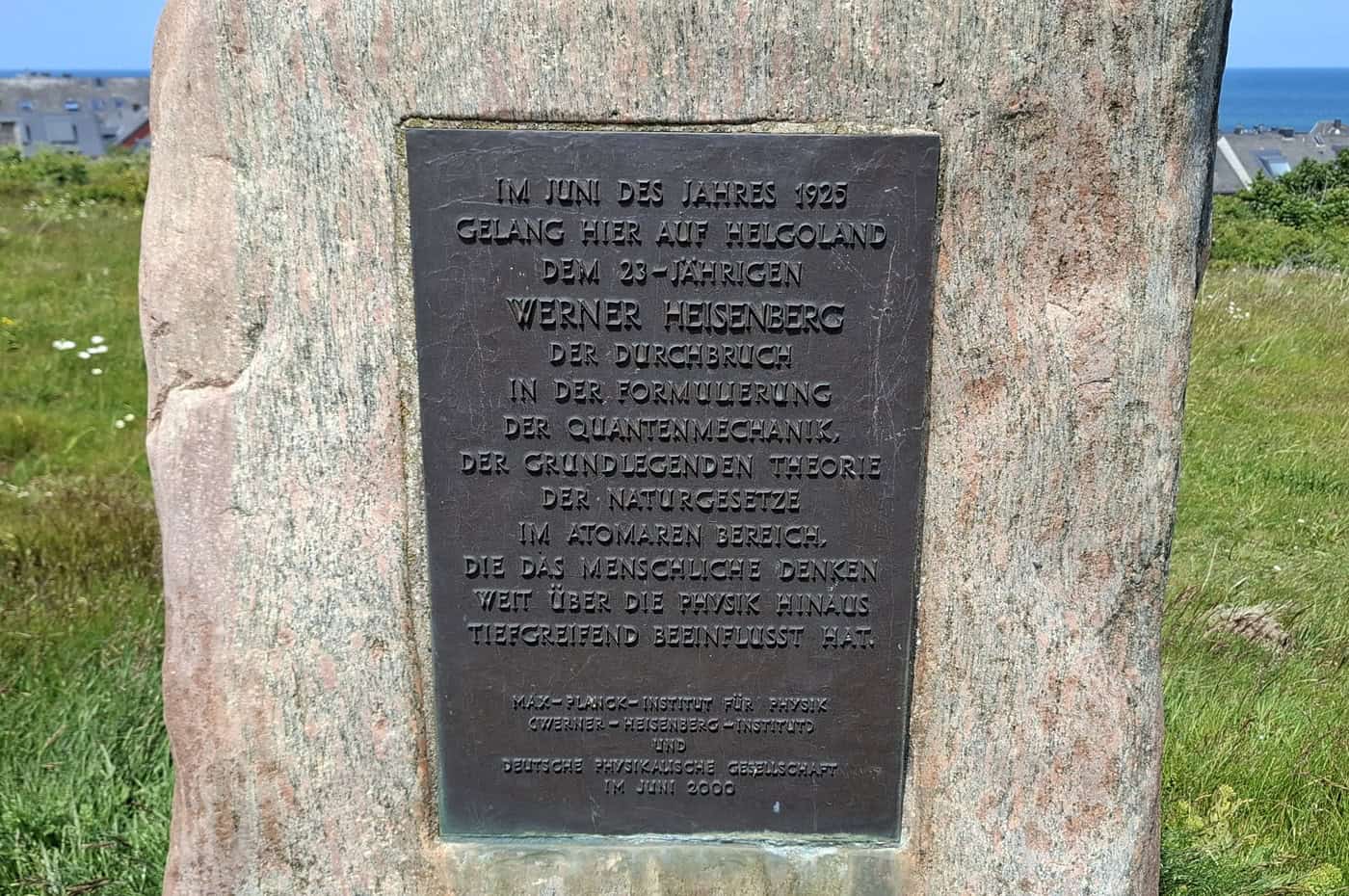

I’ve been immersed in quantum physics this week at the Helgoland 2025 meeting, which is being held to mark Werner Heisenberg’s seminal development of quantum mechanics on the island 100 years ago.

But when it comes to science, Helgoland isn’t only about quantum physics. It’s also home to an outpost of the Alfred Wegener Institute, which is part of the Helmholtz Centre for Polar and Marine Research and named after the German scientist who was the brains behind continental drift.

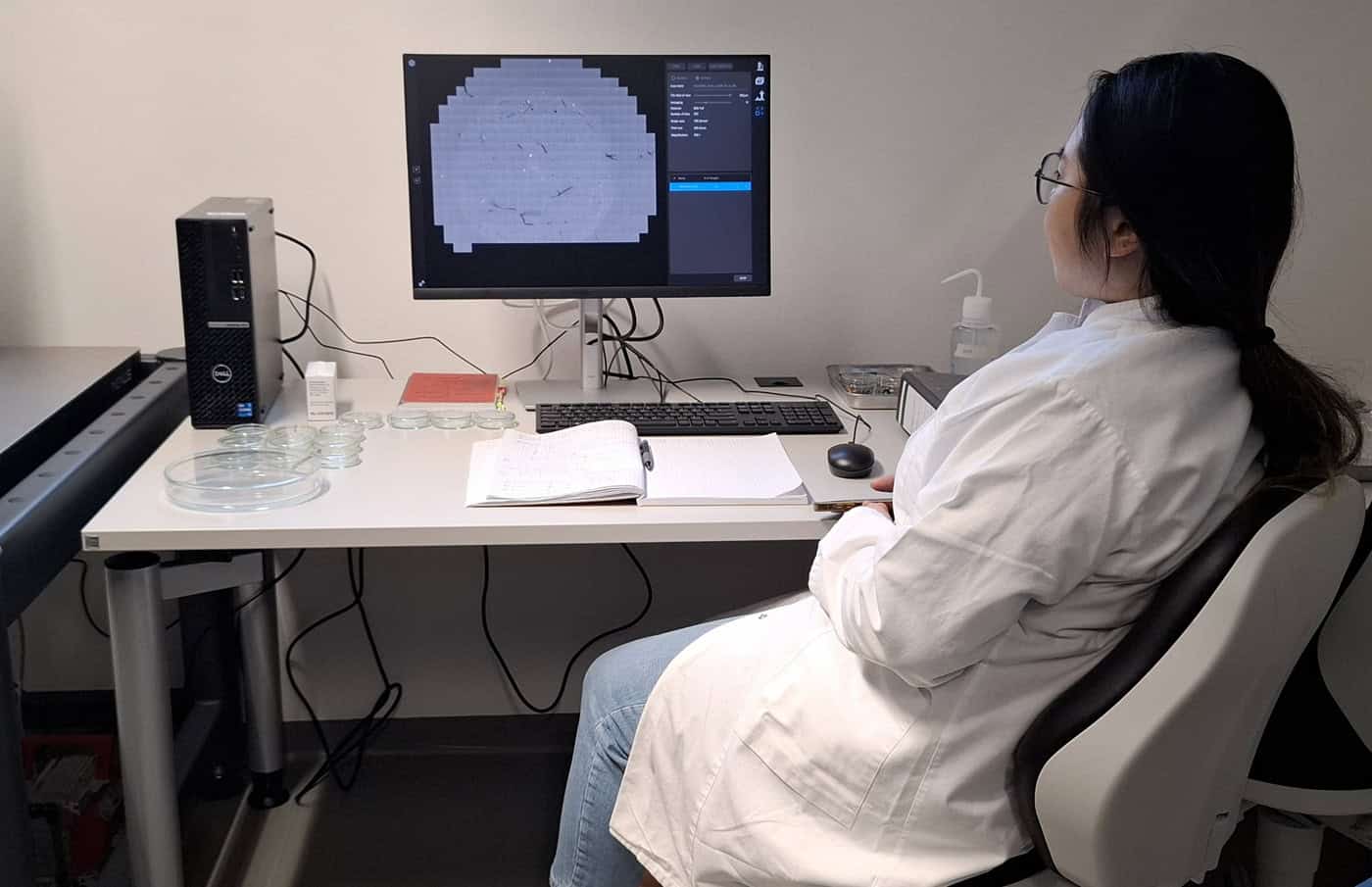

Dating back to 1892, the Biological Institute Helgoland (BAH) has about 80 permanent staff. They include Sebastian Primpke, a polymer scientist who studies the growing danger of microplastics and microfibres on the oceans.

Microplastics, which are any kind of small plastic materials, generally range in size from one micron to about 5 mm. They are a big danger for fish and other forms of marine life, as Marric Stephens reported in this recent feature.

Primpke studies microplastics using biofilms attached to a grid immersed in a tank containing water piped continuously in from the North Sea. The tank is covered with a lid to keep samples in the dark, mimicking underwater conditions.

He and his team periodically take samples from the films out, studying them in the lab using infrared and Raman microscopes. They’re able to obtain information such as the length, width, area, perimeter of individual microplastic particles as well as how convex or concave they are.

Other researchers at the Hegloland lab study microfibres, which can come from cellulose and artificial plastics, using electron microscopy. You can find out more information about the lab’s work here.

Primpke, who is a part-time firefighter, has lived and worked on Helgoland for a decade. He says it’s a small community, where everyone knows everyone else, which has its good and bad sides.

With only 1500 residents on the island, which lies 50 km from the mainland, finding good accommodation can be tricky. But with so many tourists, there are more amenities than you’d expect of somewhere of that size.

The post Helgoland researchers seek microplastics and microfibres in the sea appeared first on Physics World.

In this week's episode of Space Minds, we explore how microgravity accelerates aging—and guest Dr. Nadia Maroouf shares her insights on the phenomenon and what she’s doing to help protect astronauts.

The post Why space wrecks the human body appeared first on SpaceNews.

In this episode of the Physics World Weekly podcast we explore the career opportunities open to physicists and engineers looking to work within healthcare – as medical physicists or clinical engineers.

Physics World’s Tami Freeman is in conversation with two early-career physicists working in the UK’s National Health Service (NHS). They are Rachel Allcock, a trainee clinical scientist at University Hospitals Coventry and Warwickshire NHS Trust, and George Bruce, a clinical scientist at NHS Greater Glasgow and Clyde. We also hear from Chris Watt, head of communications and public affairs at IPEM, about the new IPEM careers guide.

This episode is supported by Radformation, which is redefining automation in radiation oncology with a full suite of tools designed to streamline clinical workflows and boost efficiency. At the centre of it all is AutoContour, a powerful AI-driven autocontouring solution trusted by centres worldwide.

This episode is supported by Radformation, which is redefining automation in radiation oncology with a full suite of tools designed to streamline clinical workflows and boost efficiency. At the centre of it all is AutoContour, a powerful AI-driven autocontouring solution trusted by centres worldwide.

The post Exploring careers in healthcare for physicists and engineers appeared first on Physics World.

Dawn Aerospace has announced the first order for its Aurora suborbital spaceplane, signing a deal to fly the vehicle from Oklahoma.

The post Dawn Aerospace sells Aurora suborbital spaceplane to Oklahoma appeared first on SpaceNews.

Four-year-old small satellite maker Muon Space announced $89.5 million in new funding June 12 to scale production and acquire propulsion startup Starlight Engines, bringing a potential supply chain bottleneck in-house.

The post Muon Space raises $90 million to scale satellite production and acquire propulsion startup appeared first on SpaceNews.

Shares in Voyager Technologies soared in their public debut June 11 as the company plans to use the proceeds to support work in defense and space.

The post Voyager looks to expanded defense and space opportunities as a public company appeared first on SpaceNews.

Jack Harris, a quantum physicist at Yale University in the US, has a fascination with islands. He grew up on Martha’s Vineyard, an island just south of Cape Cod on the east coast of America, and believes that islands shape a person’s thinking. “Your world view has a border – you’re on or you’re off,” Harris said on a recent episode of the Physics World Stories podcast.

It’s perhaps not surprising, then, that Harris is one of the main organizers of a five-day conference taking place this week on Helgoland, where Werner Heisenberg discovered quantum mechanics exactly a century ago. Heisenberg had come to the tiny, windy, pollen-free island, which lies 50 km off the coast of Germany, in June 1925, to seek respite from the hay fever he was suffering from in Göttingen.

According to Heisenberg’s 1971 book Physics and Beyond, he supposedly made his breakthrough early one morning that month. Unable to sleep, Heisenberg left his guest house just before daybreak and climbed a tower at the top of the island’s southern headland. As the Sun rose, Heisenberg pieced together the curious observations of frequencies of light that materials had been seen to absorb and emit.

While admitting that the real history of the episode isn’t as simple as Heisenberg made out, Harris believes it’s nevertheless a “very compelling” story. “It has a place and a time: an actual, clearly defined, quantized discrete place – an island,” Harris says. “This is a cool story to have as part of the fabric of [the physics] community.” Hardly surprising, then, that more than 300 physicists, myself included, have travelled from across the world to the Helgoland 2025 meeting.

Much time has been spent so far at the event discussing the fundamentals of quantum mechanics, which might seem a touch self-indulgent and esoteric given the burgeoning (and financially lucrative) applications of the subject. Do we really need to concern ourselves with, say, non-locality, the meaning of measurement, or the nature of particles, information and randomness?

Why did we need to hear from Juan Maldacena from the Institute for Advanced Study in Princeton getting so excited talking about information loss and black holes? (Fun fact: a “white” black hole the size of a bacterium would, he claimed, be as hot as the Sun and emit so much light we could see it with the naked eye.)

But the fundamental questions are fascinating in their own right. What’s more, if we want to build, say, a quantum computer, it’s not just a technical and engineering endeavour. “To make it work you have to absorb a lot of the foundational topics of quantum mechanics,” says Harris, pointing to challenges such as knowing what kinds of information alter how a system behaves. “We’re at a point where real-word practical things like quantum computing, code breaking and signal detection hinge on our ability to understand the foundational questions of quantum mechanics.”

This article forms part of Physics World‘s contribution to the 2025 International Year of Quantum Science and Technology (IYQ), which aims to raise global awareness of quantum physics and its applications.

Stayed tuned to Physics World and our international partners throughout the next 12 months for more coverage of the IYQ.

Find out more on our quantum channel.

The post Quantum island: why Helgoland is a great spot for fundamental thinking appeared first on Physics World.

The Colorado-based company announced the formal launch of Sierra Space Defense

The post Sierra Space doubles down on defense appeared first on SpaceNews.