Superconducting innovation: SQMS shapes up for scalable success in quantum computing

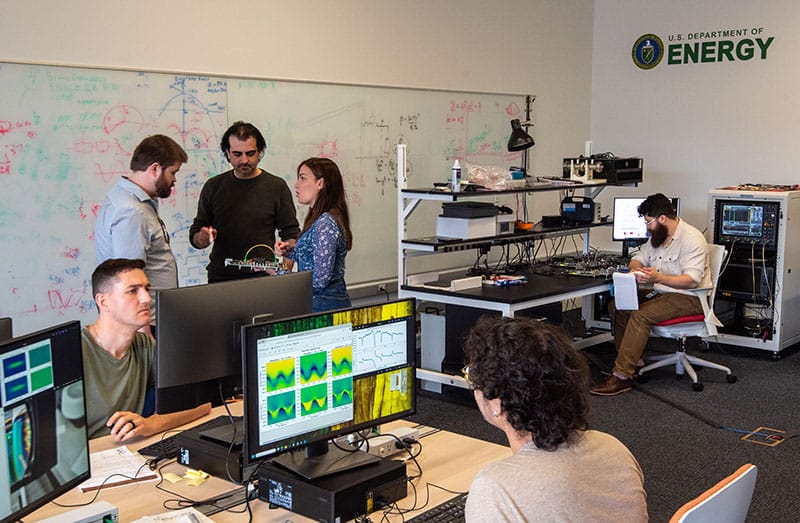

Developing quantum computing systems with high operational fidelity, enhanced processing capabilities plus inherent (and rapid) scalability is high on the list of fundamental problems preoccupying researchers within the quantum science community. One promising R&D pathway in this regard is being pursued by the Superconducting Quantum Materials and Systems (SQMS) National Quantum Information Science Research Center at the US Department of Energy’s Fermi National Accelerator Laboratory, the pre-eminent US particle physics facility on the outskirts of Chicago, Illinois.

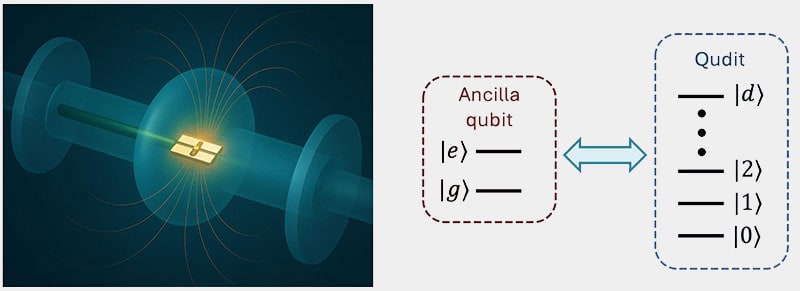

The SQMS approach involves placing a superconducting qubit chip (held at temperatures as low as 10–20 mK) inside a three-dimensional superconducting radiofrequency (3D SRF) cavity – a workhorse technology for particle accelerators employed in high-energy physics (HEP), nuclear physics and materials science. In this set-up, it becomes possible to preserve and manipulate quantum states by encoding them in microwave photons (modes) stored within the SRF cavity (which is also cooled to the millikelvin regime).

Put another way: by pairing superconducting circuits and SRF cavities at cryogenic temperatures, SQMS researchers create environments where microwave photons can have long lifetimes and be protected from external perturbations – conditions that, in turn, make it possible to generate quantum states, manipulate them and read them out. The endgame is clear: reproducible and scalable realization of such highly coherent superconducting qubits opens the way to more complex and scalable quantum computing operations – capabilities that, over time, will be used within Fermilab’s core research programme in particle physics and fundamental physics more generally.

Fermilab is in a unique position to turn this quantum technology vision into reality, given its decadal expertise in developing high-coherence SRF cavities. In 2020, for example, Fermilab researchers demonstrated record coherence lifetimes (of up to two seconds) for quantum states stored in an SRF cavity.

“It’s no accident that Fermilab is a pioneer of SRF cavity technology for accelerator science,” explains Sir Peter Knight, senior research investigator in physics at Imperial College London and an SQMS advisory board member. “The laboratory is home to a world-leading team of RF engineers whose niobium superconducting cavities routinely achieve very high quality factors (Q) from 1010 to above 1011 – figures of merit that can lead to dramatic increases in coherence time.”

Moreover, Fermilab offers plenty of intriguing HEP use-cases where quantum computing platforms could yield significant research dividends. In theoretical studies, for example, the main opportunities relate to the evolution of quantum states, lattice-gauge theory, neutrino oscillations and quantum field theories in general. On the experimental side, quantum computing efforts are being lined up for jet and track reconstruction during high-energy particle collisions; also for the extraction of rare signals and for exploring exotic physics beyond the Standard Model.

Cavities and qubits

SQMS has already notched up some notable breakthroughs on its quantum computing roadmap, not least the demonstration of chip-based transmon qubits (a type of charge qubit circuit exhibiting decreased sensitivity to noise) showing systematic and reproducible improvements in coherence, record-breaking lifetimes of over a millisecond, and reductions in performance variation.

Key to success here is an extensive collaborative effort in materials science and the development of novel chip fabrication processes, with the resulting transmon qubit ancillas shaping up as the “nerve centre” of the 3D SRF cavity-based quantum computing platform championed by SQMS. What’s in the works is essentially a unique quantum analogue of a classical computing architecture: the transmon chip providing a central logic-capable quantum information processor and microwave photons (modes) in the 3D SRF cavity acting as the random-access quantum memory.

As for the underlying physics, the coupling between the transmon qubit and discrete photon modes in the SRF cavity allows for the exchange of coherent quantum information, as well as enabling quantum entanglement between the two. “The pay-off is scalability,” says Alexander Romanenko, a senior scientist at Fermilab who leads the SQMS quantum technology thrust. “A single logic-capable processor qubit, such as the transmon, can couple to many cavity modes acting as memory qubits.”

In principle, a single transmon chip could manipulate more than 10 qubits encoded inside a single-cell SRF cavity, substantially streamlining the number of microwave channels required for system control and manipulation as the number of qubits increases. “What’s more,” adds Romanenko, “instead of using quantum states in the transmon [coherence times just crossed into milliseconds], we can use quantum states in the SRF cavities, which have higher quality factors and longer coherence times [up to two seconds].”

In terms of next steps, continuous improvement of the ancilla transmon coherence times will be critical to ensure high-fidelity operation of the combined system – with materials breakthroughs likely to be a key rate-determining step. “One of the unique differentiators of the SQMS programme is this ‘all-in’ effort to understand and get to grips with the fundamental materials properties that lead to losses and noise in superconducting qubits,” notes Knight. “There are no short-cuts: wide-ranging experimental and theoretical investigations of materials physics – per the programme implemented by SQMS – are mandatory for scaling superconducting qubits into industrial and scientifically useful quantum computing architectures.”

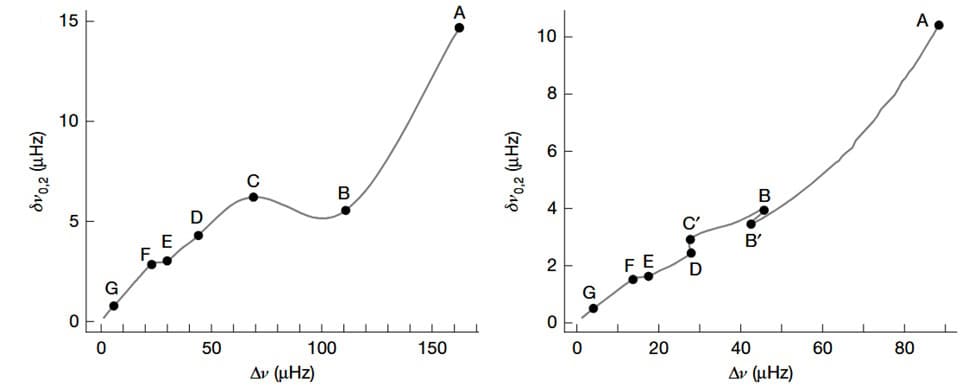

Laying down a marker, SQMS researchers recently achieved a major milestone in superconducting quantum technology by developing the longest-lived multimode superconducting quantum processor unit (QPU) ever built (coherence lifetime >20 ms). Their processor is based on a two-cell SRF cavity and leverages its exceptionally high quality factor (~1010) to preserve quantum information far longer than conventional superconducting platforms (typically 1 or 2 ms for rival best-in-class implementations).

Coupled with a superconducting transmon, the two-cell SRF module enables precise manipulation of cavity quantum states (photons) using ultrafast control/readout schemes (allowing for approximately 104 high-fidelity operations within the qubit lifetime). “This represents a significant achievement for SQMS,” claims Yao Lu, an associate scientist at Fermilab and co-lead for QPU connectivity and transduction in SQMS. “We have demonstrated the creation of high-fidelity [>95%] quantum states with large photon numbers [20 photons] and achieved ultra-high-fidelity single-photon entangling operations between modes [>99.9%]. It’s work that will ultimately pave the way to scalable, error-resilient quantum computing.”

Fast scaling with qudits

There’s no shortage of momentum either, with these latest breakthroughs laying the foundations for SQMS “qudit-based” quantum computing and communication architectures. A qudit is a multilevel quantum unit that can be more than two states and, in turn, hold a larger information density – i.e. instead of working with a large number of qubits to scale information processing capability, it may be more efficient to maintain a smaller number of qudits (with each holding a greater range of values for optimized computations).

Scale-up to a multiqudit QPU system is already underway at SQMS via several parallel routes (and all with a modular computing architecture in mind). In one approach, coupler elements and low-loss interconnects integrate a nine-cell multimode SRF cavity (the memory) to a two-cell SRF cavity quantum processor. Another iteration uses only two-cell modules, while yet another option exploits custom-designed multimodal cavities (10+ modes) as building blocks.

One thing is clear: with the first QPU prototypes now being tested, verified and optimized, SQMS will soon move to a phase in which many of these modules will be assembled and put together in operation. By extension, the SQMS effort also encompasses crucial developments in control systems and microwave equipment, where many devices must be synchronized optimally to encode and analyse quantum information in the QPUs.

Along a related coordinate, complex algorithms can benefit from fewer required gates and reduced circuit depth. What’s more, for many simulation problems in HEP and other fields, it’s evident that multilevel systems (qudits) – rather than qubits – provide a more natural representation of the physics in play, making simulation tasks significantly more accessible. The work of encoding several such problems into qudits – including lattice-gauge-theory calculations and others – is similarly ongoing within SQMS.

Taken together, this massive R&D undertaking – spanning quantum hardware and quantum algorithms – can only succeed with a “co-design” approach across strategy and implementation: from identifying applications of interest to the wider HEP community to full deployment of QPU prototypes. Co-design is especially suited to these efforts as it demands sustained alignment of scientific goals with technological implementation to drive innovation and societal impact.

In addition to their quantum computing promise, these cavity-based quantum systems will play a central role in serving both as the “adapters” and low-loss channels at elevated temperatures for interconnecting chip or cavity-based QPUs hosted in different refrigerators. These interconnects will provide an essential building block for the efficient scale-up of superconducting quantum processors into larger quantum data centres.

“The SQMS collaboration is ploughing its own furrow – in a way that nobody else in the quantum sector really is,” says Knight. “Crucially, the SQMS partners can build stuff at scale by tapping into the phenomenal engineering strengths of the National Laboratory system. Designing, commissioning and implementing big machines has been part of the ‘day job’ at Fermilab for decades. In contrast, many quantum computing start-ups must scale their R&D infrastructure and engineering capability from a far-less-developed baseline.”

The last word, however, goes to Romanenko. “Watch this space,” he concludes, “because SQMS is on a roll. We don’t know which quantum computing architecture will ultimately win out, but we will ensure that our cavity-based quantum systems will play an enabling role.”

Scaling up: from qubits to qudits

The post Superconducting innovation: SQMS shapes up for scalable success in quantum computing appeared first on Physics World.