A Secretive US Space Plane Will Soon Test Quantum Navigation Technology

Chinese launch startup iSpace successfully sent a satellite into orbit early Tuesday with a solid fueled rocket mission a year after its previous launch failed.

The post China’s iSpace returns to flight with successful orbital solid rocket launch appeared first on SpaceNews.

The Canadian Space Agency awarded initial study contracts July 29 for a lunar utility rover as part of the country’s push to deepen its role in the U.S.-led Artemis program.

The post Canada awards study contracts for lunar utility rover appeared first on SpaceNews.

The technology can simulate adversary spacecraft behaviors in physics-accurate orbital environments

The post Slingshot unveils AI agent for Space Force training appeared first on SpaceNews.

NASA has paused plans to procure a set of ground stations intended to reduce the burden on the agency’s Deep Space Network, citing uncertain budgets.

The post NASA pauses acquisition of lunar communications ground stations appeared first on SpaceNews.

To paraphrase Jane Austen, it is a truth universally acknowledged that a research project in possession of large datasets must be in want of artificial intelligence (AI).

The first time I really became aware of AI’s potential was in the early 2000s. I was one of many particle physicists working at the Collider Detector at Fermilab (CDF) – one of two experiments at the Tevatron, which was the world’s largest and highest energy particle collider at the time. I spent my days laboriously sifting through data looking for signs of new particles and gossiping about all things particle physics.

CDF was a large international collaboration, involving around 60 institutions from 15 countries. One of the groups involved was at the University of Karlsruhe (now the Karlsruhe Institute of Technology) in Germany, and they were trying to identify the matter and antimatter versions of a beauty quark from the collider’s data. This was notoriously difficult – backgrounds were high, signals were small, and data volumes were massive. It was also the sort of dataset where for many variables, there was only a small difference between signal and background.

In the face of such data, Michael Feindt, a professor in the group, developed a neural-network algorithm to tackle the problem. This type of algorithm is modelled on the way the brain learns by combining information from many neurons, and it can be trained to recognize patterns in data. Feindt’s neural network, trained on suitable samples of signal and background, was able to more easily distinguish between the two for the data’s variables, and combine them in the most effective way to identify matter and antimatter beauty quarks.

At the time, this work was interesting simply because it was a new way of trying to extract a small signal from a very large background. But the neural network turned out to be a key development that underpinned many of CDF’s physics results, including the landmark observation of a Bs meson (a particle formed of an antimatter beauty quark and a strange quark) oscillating between its matter and antimatter forms.

Versions of the algorithm have since been used elsewhere, including by physicists on three of the four main experiments at CERN’s Large Hadron Collider (LHC). In every case, the approach allowed researchers to extract more information from less data, and in doing so, accelerated the pace of scientific advancement.

What was even more interesting is that the neural-network approach didn’t just benefit particle physics. There was a brief foray applying the network to hedge fund management and predicting car insurance rates. A company Phi-T (later renamed Blue Yonder) was spun out from the University of Karlsruhe and applied the algorithm to optimizing supply-chain logistics. After a few acquisitions, the company is now award-winning and global. The neural network, however, remained free for particle physicists to use.

Many types of neural networks and other AI approaches are now routinely used to acquire and analyse particle physics data. In fact, our datasets are so large that we absolutely need their computational help, and their deployment has moved from novelty to necessity.

To give you a sense of just how much information we are talking about, during the next run period of the LHC, its experiments are expected to produce about 2000 petabytes (2 × 1018 bytes) of real and simulated data per year that researchers will need to analyse. This dataset is almost 10 times larger than a year’s worth of videos uploaded to YouTube, 30 times larger than Google’s annual webpage datasets, and over a third as big as a year’s worth of Outlook e-mail traffic. These are dataset sizes very much in want of AI to analyse.

Particle physics may have been an early adopter, but AI has now spread throughout physics. This shouldn’t be too surprising. Physics is data-heavy and computationally intensive, so it benefits from the step up in speed and computational complexity to analyse datasets, simulate physical systems, and automate the control of complicated experiments.

For example, AI has been used to classify gravitational-lensing images in astronomical surveys. It has helped researchers interpret the resulting distributions of matter they infer to be there in terms of different models of dark energy. Indeed, in 2024 it improved Dark Energy Survey results equivalent to quadrupling their data sample (see box “An AI universe”).

AI has even helped design new materials. In 2023 Google DeepMind discovered millions of new crystals that could power future technologies, a feat estimated to be equivalent to 800 years of research. And there are many other advances – AI is a formidable tool for accelerating scientific progress.

But AI is not limited to complex experiments. In fact, we all use it every day. AI powers our Internet searches, helps us understand concepts, and even leads us to misunderstand things by feeding us false facts. Nowadays, AI pervades every aspect of our lives and presents us with challenges and opportunities whenever it appears.

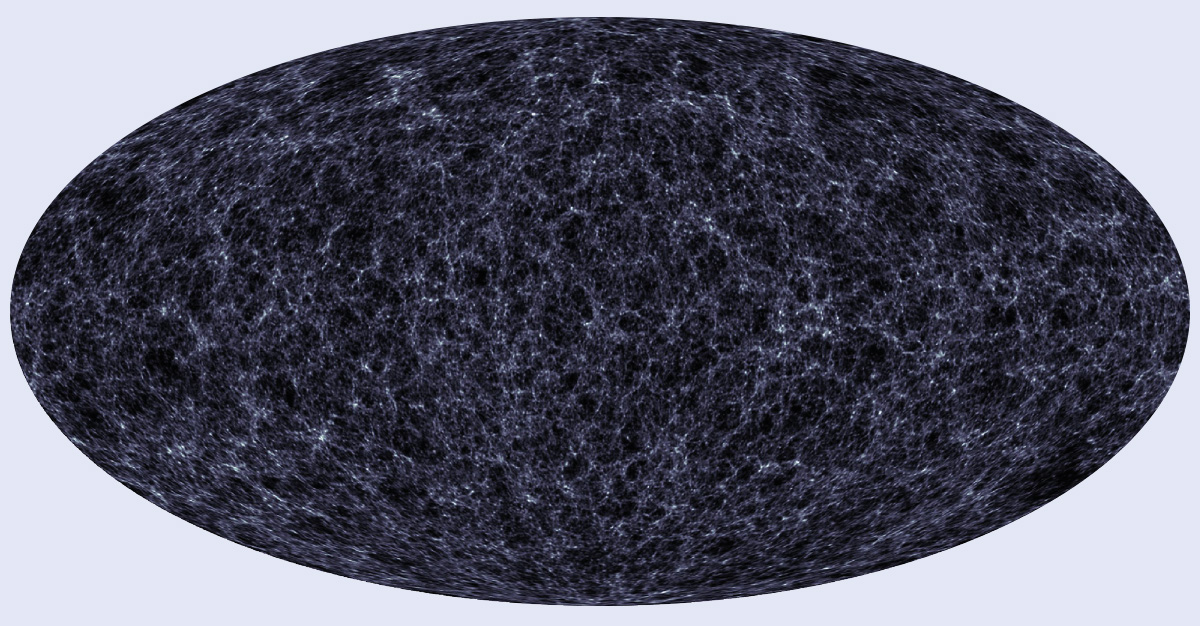

AI approaches have been used by the Dark Energy Survey (DES) collaboration to investigate dark energy, the mysterious phenomenon thought to drive the expansion of the universe.

DES researchers had previously mapped the distribution of matter in the universe by relating distortions in light from galaxies to the gravitational attraction of matter the light passes through before being measured. The distribution depends on visible and dark matter (which draws galaxies closer), and dark energy (which drives galaxies apart).

In a 2024 study researchers used AI techniques to simulate a series of matter distributions – each based on a different value for variables describing dark matter, dark energy and other cosmological parameters that describe the universe. They then compared these simulated findings with the real matter distribution. By determining which simulated distributions were consistent with the data, values for the corresponding dark energy parameters could be extracted. Because the AI techniques allowed more information to be used to make the comparison than would otherwise be possible, the results are more precise. Researchers were able to improve the precision by a factor of two, a feat equivalent to using four times as much data with previous methods.

It’s this mix of challenge and opportunity that makes now the right time to examine the relationship between physics and AI, and what each can do for the other. In fact, the Institute of Physics (IOP) has recently published a “pathfinder” study on this very subject, on which I acted as an adviser. Pathfinder studies explore the landscape of a topic, identifying the directions that a subsequent, deeper and more detailed “impact” study should explore.

This current pathfinder study – Physics and AI: a Physics Community Perspective – is based on an IOP member survey that examined attitudes towards AI and its uses, and an expert workshop that discussed future potential for innovation. The resulting report, which came out in April 2025, revealed just how widespread the use of AI is in physics.

About two thirds of the 700 people who replied to the survey said they had used AI to some degree, and every physics area contained a good fraction of respondents who had at least some level of familiarity with it. Most often this experience involved different machine-learning approaches or generative AI, but respondents had also worked with AI ethics and policy, computer vision and natural language processing. This is a testament to the many uses we can find for AI, from very specific pattern recognition and image classification tasks, to understanding its wider implications and regulatory needs.

Although it is clear that AI can really accelerate our research, we have to be careful. As many respondents to the survey pointed out, AI is a powerful aid, but simply using it as a black box and imagining it does the right thing is dangerous. AI tools and the challenges we put them to are complex – we need to ensure we understand what they are doing and how well they are doing it to have confidence in their answers.

There are any number of cautionary tales about the consequences of using AI badly and obtaining a distorted outcome. A 2017 master’s thesis by Joy Adowaa Buolamwini from Massachusetts Institute of Technology (MIT) famously analysed three commercially available facial-recognition technologies, and uncovered gender and racial bias by the algorithms due to incomplete training sets. The programmes had been trained on images predominantly consisting of white men, which led to women of colour being misidentified nearly 35% of the time, while white men were correctly classified 99% of the time. Buolamwini’s findings prompted IBM and Microsoft to revise and correct their algorithms.

Even estimating the uncertainty associated with the use of machine learning is fraught with complication. Training data are never perfect. For instance, simulated data may not perfectly describe equipment response in an experiment, or – as with the example above – crucial processes occurring in real data may be missed if the training dataset is incomplete. And the performance of an algorithm is never perfect; there may be uncertainties associated with the way the algorithm was trained and its parameters chosen.

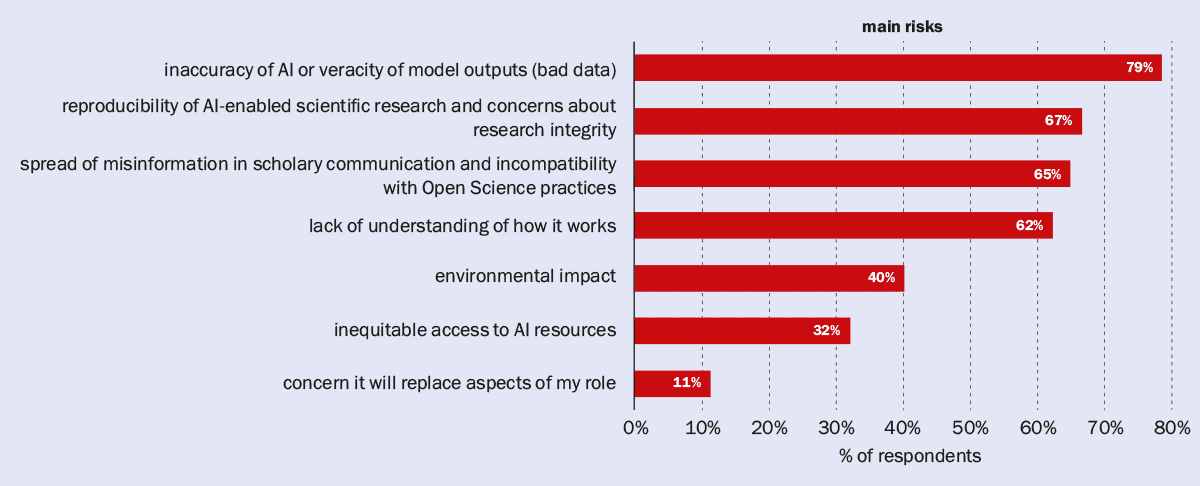

Indeed, 69% of respondents to the pathfinder survey felt that AI poses multiple risks to physics, and one of the main concerns was inaccuracy due to poor or bad training data (figure 1). It’s bad enough getting a physics result wrong and discovering a particle that isn’t really there, or missing a new particle that is. Imagine the risks if poorly understood AI approaches are applied to healthcare decisions when interpreting medical images, or in finance where investments are made on the back of AI-driven model suggestions. Yet despite the potential consequences, the AI approaches in these real-world cases are not always well calibrated and can have ill-defined uncertainties.

The Institute of Physics pathfinder survey asked its members, “Which are your potential greatest concerns regarding AI in physics research and innovation?” Respondents were allowed to select multiple answers, and the prevailing worry was about the inaccuracy of AI.

New approaches are being considered in physics that try to separate out the uncertainties associated with simulated training data from those related to the performance of the algorithm. However, even this is not straightforward. A 2022 paper by Aishik Ghosh and Benjamin Nachman from Lawrence Berkeley National Laboratory in the US (Eur. Phys. J. C 82 46) notes that devising a procedure to be insensitive to the uncertainties you think are present in training data is not the same as having a procedure that is insensitive to the actual uncertainties that are really there. If that’s true, not only is measurement uncertainty underestimated but, depending on the differences between training data and reality, false results can be obtained.

The moral is that AI can and does advance physics, but we need to invest the time to use it well so that our results are robust. And if we do that, others can benefit from our work too.

Physics is a field where accuracy is crucial, and we are as rigorous as we can be about understanding bias and uncertainty in our results. In fact, the pathfinder report highlights that our methodologies to quantify uncertainty can be used to advance and strengthen AI methods too. This is critical for future innovation and to improve trust in AI use.

Advances are already under way. One development, first introduced in 2017, is physics-informed neural networks. These impose consistency with physical laws in addition to using training data relevant to their particular applications. Imposing physical laws can help compensate for limited training data and prevents unphysical solutions, which in turn improves accuracy. Although relatively new, it’s a rapidly developing field, finding applications in sectors as diverse as computational fluid dynamics, heat transfer, structural mechanics, option pricing and blood pressure estimation.

Another development is in the use of Bayesian neural networks, which incorporate uncertainty estimates into their predictions to make results more robust and meaningful. The approach is being trialled in decision-critical fields such as medical diagnosis and stock market prediction.

But this is not new to physics. The neural network developed at CDF in the 2000s was an early Bayesian neural network, developed to be robust against outliers in data, avoid issues in training caused by statistical fluctuations, and to have a sound probabilistic basis to interpret results. All the features, in fact, that make the approach invaluable for analysing many other systems outside physics.

So physics benefits from AI and can drive advances in it too. This is a unique relationship that needs wider recognition, and this is a good moment to bring it to the fore. The UK government has said it sees AI as “the defining opportunity of our generation”, driving growth and innovation, and that it wants the UK to become a global AI superpower. Action plans and strategies are already being implemented. Physics has a unique perspective to offer help and make this happen. It’s time for us to include it in the conversation.

In the words of the pathfinder report, we need to articulate and showcase what AI can do for physics and what physics can do for AI. Let’s make this the start of putting physics on the AI map for everyone.

Intelligent behaviour exhibited by machines. But the definition of intelligence is controversial so a more general description of AI that would satisfy most is: the behaviour of a system that adapts its actions in response to its environment and prior experience.

As a group of approaches to endow a machine with artificial intelligence, machine learning is itself a broad category. In essence, it is the process by which a system learns from a training set so that it can deliver autonomously an appropriate response to new data.

A subset of machine learning in which the learning mechanism is modelled on the behaviour of a biological brain. Input signals are modified as they pass through networked layers of neurons before emerging as an output. Experience is encoded by varying the strength of interactions between neurons in the network.

A set of real or simulated data used to train a machine-learning algorithm to recognize patterns in data indicative of signal or background.

A type of machine-learning algorithm that creates new content, such as images or text, based on the data the algorithm was trained on.

A branch of AI that analyses, interprets and extracts meaningful data from images to identify and classify objects and patterns.

A branch of AI that analyses, interprets and generates human language.

The post How AI can help (and hopefully not hinder) physics appeared first on Physics World.

A new photodetector made up of vertically stacked perovskite-based light absorbers can produce real photographic images, potentially challenging the dominance of silicon-based technologies in this sector. The detector is the first to exploit the concept of active optical filtering, and its developers at ETH Zurich and Empa in Switzerland say it could be used to produce highly sensitive, artefact-free images with much improved colour fidelity compared to conventional sensors.

The human eye uses individual cone cells in the retina to distinguish between red, green and blue (RGB) colours. Imaging devices such as those found in smartphones and digital cameras are designed to mimic this capability. However, because their silicon-based sensors absorb light over the entire visible spectrum, they must split the light into its RGB components. Usually, they do this using colour-filter arrays (CFAs) positioned on top of a monochrome light sensor. Then, once the device has collected the raw data, complex algorithms are used to reconstruct a colour image.

Although this approach is generally effective, it is far from ideal. One drawback is the presence of “de-mosaicing” artefacts from the reconstruction process. Another is large optical losses, as pixels for red light contain filters that block green and blue light, while those for green block red and blue, and so on. This means that each pixel in the image sensor only receives about a third of the incident light spectrum, greatly reducing the efficacy of light capture.

A team led by ETH Zurich materials scientist Maksym Kovalenko has now developed an alternative image sensor based on lead halide perovskites. These crystalline semiconductor materials have the chemical formula APbX3, where A is a formamidinium, methylammonium or caesium cation and X is a halide such as chlorine, bromine or iodine.

Crucially, the composition of these materials determines which wavelengths of light they will absorb. For example, when they contain more iodide ions, they absorb red light, while materials containing more bromide or chloride ions absorb green or blue light, respectively. Stacks of these materials can thus be used to absorb these wavelengths selectively without the need for filters, since each material layer remains transparent to the other colours.

The idea of vertically stacked detectors that filter each other optically has been discussed since at least 2017, including in early work from the ETH-Empa group, says team member Sergey Tsarev. “The benefits of doing this were clear, but the technical complexity discouraged many researchers,” Tsarev says.

To build their sensor, the ETH-Empa researchers had to fabricate around 30 functional thin-film layers on top of each other, without damaging prior layers. “It’s a long and often unrewarding process, especially in today’s fast-paced research environment where quicker results are often prioritized,” Tsarev explains. “This project took us nearly three years to complete, but we chose to pursue it because we believe challenging problems with long-term potential deserve our attention. They can push boundaries and bring meaningful innovation to society.”

The team’s measurements show that the new, stacked sensors reproduce RGB colours more precisely than conventional silicon technologies. The sensors also boast high external quantum efficiencies (defined as the number of photons produced per electron used) of 50%, 47% and 53% for the red, green and blue channels respectively.

Kovalenko says that in purely technical terms, the most obvious application for this sensor would be in consumer-grade colour cameras. However, he says that this path to commercialization would be very difficult due to competition from highly optimized and cost-effective conventional technologies already on the market. “A more likely and exciting direction,” he tells Physics World, “is in machine vision and in so-called hyperspectral imaging – that is, imaging at wavelengths other than red, green and blue.”

Perovskite sensors are particularly interesting in this context, explains team member Sergi Yakunin, because the wavelength range they absorb over can be precisely controlled by defining a larger number of colour channels that are clearly separated from other. In contrast, silicon’s broad absorption spectrum means that silicon-based hyperspectral imaging devices require numerous filters and complex computer algorithms.

“This is very impractical even with a relatively small number of colours,” Kovalenko says. “Hyperspectral image sensors based on perovskite could be used in medical analysis or in automated monitoring of agriculture and the environment, for example, or in other specialized imaging systems that can isolate and enhance particular wavelengths with high colour fidelity.”

The researchers now aim to devise a strategy for making their sensor compatible with standard CMOS technology. “This might include vertical interconnects and miniaturized detector pixels,” says Tsarev, “and would enable seamless transfer of our multilayer detector concept onto commercial silicon readout chips, bringing the technology closer to real-world applications and large-scale deployment.”

The study is detailed in Nature.

The post Stacked perovskite photodetector outperforms conventional silicon image sensors appeared first on Physics World.

Leanspace, a European satellite operations technology provider, today announced that Qwaltec, a US-based defense contractor and leading provider of turnkey solutions and engineering services, has joined its growing partner ecosystem […]

The post Qwaltec joins Leanspace Partner Ecosystem to Deliver Next-Gen Spacecraft Operations Solutions for the US Market appeared first on SpaceNews.

SAN FRANCISCO – More than 4,000 people from 45 countries have signed up to attend the Small Satellite Conference at the Salt Palace Convention Center in Salt Lake City Aug. 10-13. After 38 years in Logan, Utah, SmallSat is moving to Salt Lake City because the convention center and surrounding hotels can accommodate thousands of […]

The post SmallSat heads to Salt Lake City as audience expands appeared first on SpaceNews.

A House appropriations bill provides funding for a civil space traffic coordination system but wants changes to increase its reliance on the Defense Department.

The post House appropriators want TraCSS to rely more on Defense Department appeared first on SpaceNews.

OTV-8 will test technologies for GPS-denied navigation and space network integration

The post U.S. military X-37B spaceplane prepares for eighth mission appeared first on SpaceNews.

SAN FRANCISCO – EraDrive, a Stanford spinoff, won a $1 million NASA contract to detect, identify and track space objects. It was the first contract for the Palo Alto, California, startup founded earlier this year by Space Rendezvous Laboratory (SLAB) director Simone D’Amico, Justin Kruger, SLAB postdoctoral fellow, and Sumant Sharma, a SLAB alum and […]

The post Stanford spinoff EraDrive claims $1 million NASA contract appeared first on SpaceNews.

In atom-based quantum technologies, motion is seen as a nuisance. The tiniest atomic jiggle or vibration can scramble the delicate quantum information stored in internal states such as the atom’s electronic or nuclear spin, especially during operations when those states get read out or changed.

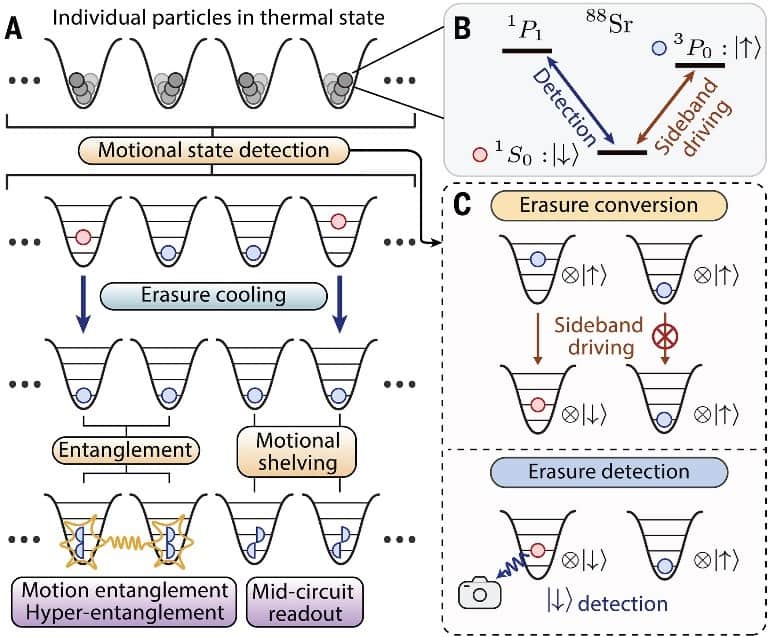

Now, however, Manuel Endres and colleagues at the California Institute of Technology (Caltech), US, have found a way to turn this long-standing nuisance into a useful feature. Writing in Science, they describe a technique called erasure correction cooling (ECC) that detects and corrects motional errors without disturbing atoms that are already in their ground state (the ideal state for many quantum applications). This technique not only cools atoms; it does so better than some of the best conventional methods. Further, by controlling motion deliberately, the Caltech team turned it into a carrier of quantum information and even created hyper-entangled states that link the atoms’ motion with their internal spin states.

“Our goal was to turn atomic motion from a source of error into a useful feature,” says the paper’s lead author Adam Shaw, who is now a postdoctoral researcher at Stanford University. “First, we developed new cooling methods to remove unwanted motion, like building an enclosure around a swing to block a chaotic wind. Once the motion is stable, we can start injecting it programmatically, like gently pushing the swing ourselves. This controlled motion can then carry quantum information and perform computational tasks.”

Atoms confined in optical traps – the basic building blocks of atom-based quantum platforms – behave like quantum oscillators, occupying different vibrational energy levels depending on their temperature. Atoms in the lowest vibrational level, the motional ground state, are especially desirable because they exhibit minimal thermal motion, enabling long coherence times and high-fidelity control over quantum states.

Over the past few decades, scientists have developed various methods, including Sisyphus cooling and Raman sideband cooling, to persuade atoms into this state. However, these techniques face limitations, especially in shallow traps where motional states are harder to resolve, or in large-scale systems where uniform and precise cooling is required.

ECC builds on standard cooling methods to overcome these challenges. After an initial round of Sisyphus cooling, the researchers use spin-motion coupling and selective fluorescence imaging to pinpoint atoms still in excited motional states without disturbing the atoms already in the motional ground state. They do this by linking an atom’s motion to its internal electronic spin state, then shining a laser that only causes the “hot” (motionally excited) atoms to change the spin state and light up, while the “cold” ones in the motional ground state remain dark. The “hot” atoms are then either re-cooled or replaced with ones already in the motional ground state.

This approach pushed the fraction of atoms in the ground motional state from 77% (after Sisyphus cooling alone) to over 98% and up to 99.5% when only the error-free atoms were selected for further use. Thanks to this high-fidelity preparation, the Caltech physicists further demonstrated their control over motion at the quantum level by creating a motional qubit consisting of atoms in a superposition of the ground and first excited motional states.

Unlike electronic superpositions, these motional qubits are insensitive to laser phase noise, highlighting their robustness for quantum information processing. Further, the researchers used the motional superposition to implement mid-circuit readout, showing that quantum information can be temporarily stored in motion, protected during measurement, and recovered afterwards. This paves the way for advanced quantum error correction, and potentially other applications as well.

“Whenever you find ways to better control a physical system, it opens up new opportunities,” Shaw observes. Motional qubits, he adds, are already being explored as a means of simulating systems in high-energy physics.

A further highlight of this work is the demonstration of hyperentanglement, or entanglement across both internal (electronic) and external (motional) degrees of freedom. While most quantum systems rely on a single type of entanglement, this work shows that motion and internal states in neutral atoms can be coherently linked, paving the way for more versatile quantum architectures.

The post Physicists turn atomic motion from a nuisance to a resource appeared first on Physics World.